Earlier this month, I hosted my “Testing Without Mocks” course for the first time. It’s about a novel way of testing code. I’ve delivered part of this course at conferences before, but this was the first time I had delivered it online, and I added a ton of new material. At the risk of navel-gazing, this is what I learned.

tl;dr? Skip to the improvements.

The Numbers

Attendees: 17 people from 13 organizations. (One organization sent four people; another sent two people.)

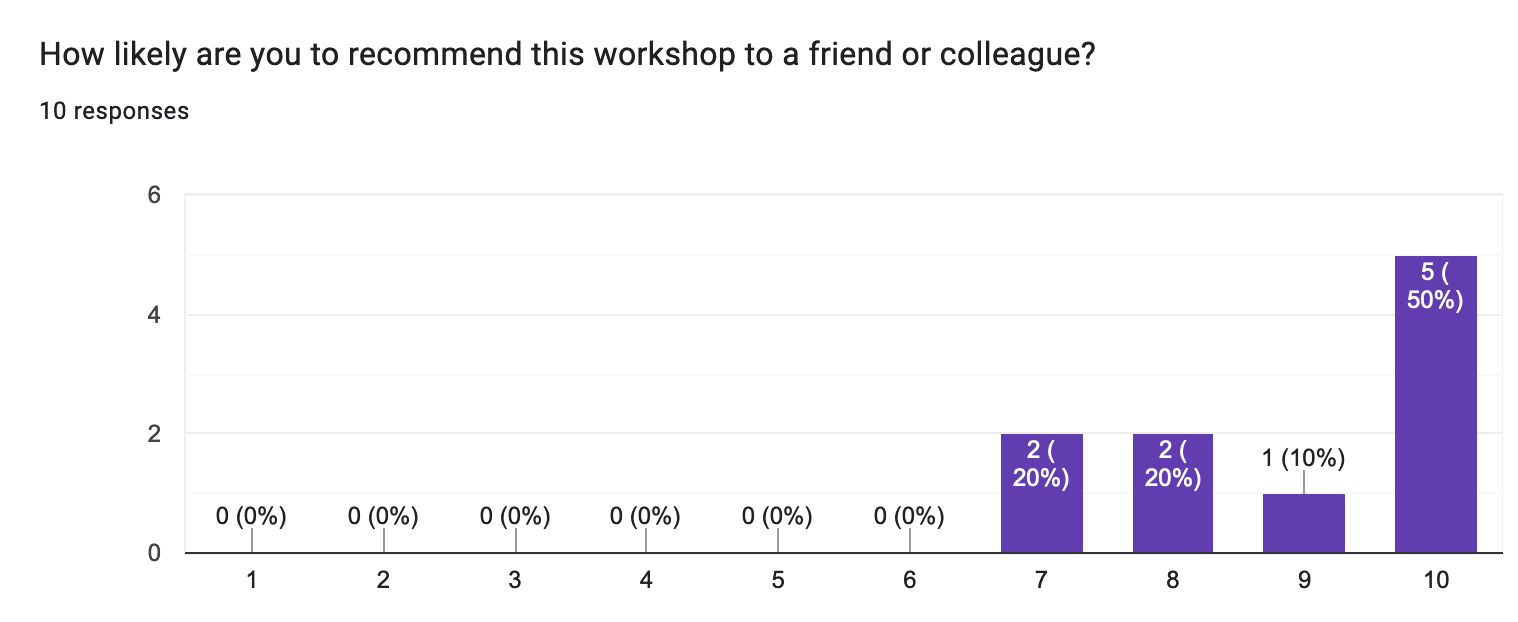

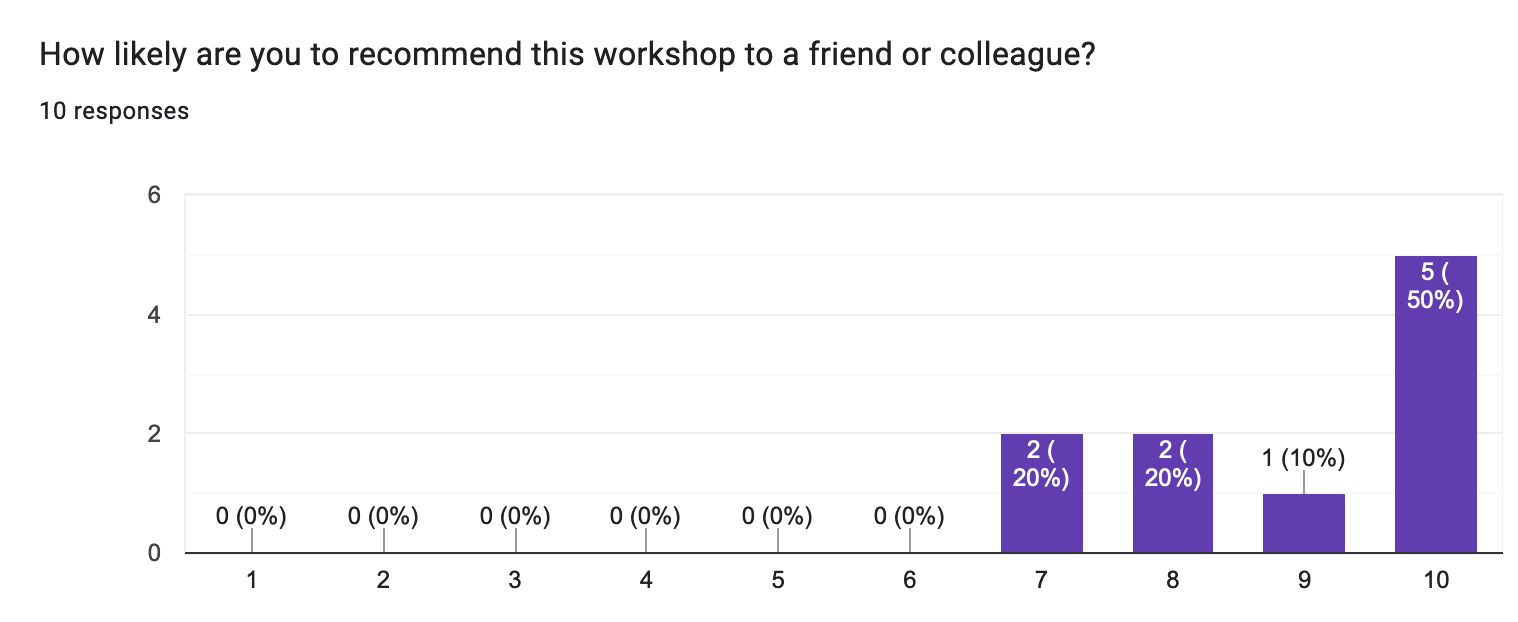

Evaluations: 10 out of 17 (59%) people filled out the evaluation form. That’s a much lower ratio than my in-person courses, but unsurprising for an online course.

Net Promoter Score: 60. That’s an excellent score, and in line with my typical ratings.

Attendees had the choice of working solo, in a pair, or in a team. Going into the course:

- Solo: 1 person

- Pairing: 10 people in 5 pairs; six people paired with colleagues they already knew

- Team: 6 people in two 3-person teams

During the course, two people chose to switch to solo work.

Qualitative Feedback

Seven people (70%) commented that we should keep the exercise-focused format of the course. It was a free-form question, so this is an unusually high degree of alignment. Two people (20%) specifically called out the structure of the code as a positive, and two others liked the clear explanations.

The most common request was for more time. Three people (30%) said the course would be improved by having more time. One person suggested having a longer break between sessions, and another would have liked it to be a better fit for their time zone.

There were several suggestions about programming languages. Two people wanted the course to be available in Java, C#, or Go. Two others suggested providing more information about Node.js in advance.

The course allowed you to work alone, in a pair, or in a team. Two people said it was a highlight. Another called out the friendly environment and knowledgeable colleagues. One person said their pairing partner didn’t work out, but appreciated my quick action to move them to solo work.

Accolades

I gave people the opportunity to provide a testimonial. Six people (60%) did so:

I recommend this workshop because it will give you very effective tools to improve how you build software. It has certainly done it for me.

Cristóbal G., Senior Staff Engineer

[We recommend this workshop because] it shows there is a better way to test than using mocks.

Dave M. and Jasper H., .Net Developers

I recommend this workshop because it gives you a new perspective on how to deal with side effects in your unit tests.

Martin G., Senior IT Consultant

I recommend this workshop because it presents a new approach to dealing with the problems usually addressed by using mocks. It's the first such approach I've found that actually improves on mocks.

John M., Software Engineer II

I recommend this workshop because it taught me how to effectively test infrastructure without resorting to tedious, slow, and flaky integration tests or test doubles. Testing Without Mocks will allow me to fully utilize TDD in my work and solve many of the pains my clients and I experience. The course was efficient, realistic, and thorough, with generous resources and time with James.

David L., Independent Consultant

I would recommend this workshop for any developer looking to improve their tests and add additional design tools to their tool belt. James gives out a lot of instruments and approaches that help you deal with tricky testing situations, make your test more robust and help you isolate your system from outside world in a way that is simple and straightforward to add to an existing system without having to do a massive redesign.

anonymous

Analysis

The exercise-focused structure of the course was a hit, and the specific exercises worked well. Nothing’s perfect, though, and there were a few rough spots that could be cleaned up. I’ll definitely keep the exercises and continue to refine the exercise materials.

On the other hand, I felt that the course was rushed, a feeling that was supported by the feedback. The first module was particularly squeezed. I also wasn’t able to spend as much time as I wanted on debriefing each module. I need more time.

A lot of attendees were located in central Europe. The course started at 5pm their time and lasted for 4½ hours, and then had optional office hours for another few hours after that. That meant very late nights for those attendees. I would like to create a schedule that’s more CEST-friendly.

Nearly everybody chose to work collaboratively. I put a lot of effort into matching people’s preferences and skills, but even then, two people ended up deciding to switch to independent work. It went smoothly overall, and received positive feedback, but putting strangers together feels risky. I plan to support pairing and teaming again, but I’ll evaluate it with a critical eye.

I spent the week before the course communicating about logistics and providing access to course materials. During the course, everybody’s build and tooling worked the first time, even for the pairs and teams. That’s unusually smooth. I’ll keep the same approach to logistics.

I put a lot of effort into the course materials, which have collapsible API documentation and progressive hints, but people largely ignored the materials, especially at the beginning of the course. This resulted in people getting stuck on questions that were answered in the materials. I need to provide more guidance on how to use the course materials.

I had each group work in a separate Zoom breakout room and share their screen. This allowed me to observe people’s progress without interrupting them. It worked well, so I’ll keep the same approach to breakout rooms.

For the most part, the use of JavaScript (or TypeScript) and Node.js wasn’t a problem, but a few people new to JavaScript struggled with it. I want to provide more support for people without JavaScript and Node.js experience.

Improvements

I’m very happy with how the course turned out, and I see opportunities to make it better. This is what I plan to change for the next course:

Improved schedule. I’ll switch to four 3-hour sessions spread over two weeks. I expect this to make the biggest difference: I’ll be able to spend more time on introducing and debriefing the material without sacrificing the exercises; people will have time between sessions to consolidate their learning and review upcoming material; and the course will end earlier for people in Europe.

Course material walkthrough. Before the first set of exercises, I’ll walk through the course materials and highlight the exercise setup, API and JavaScript documentation, and hints. I’ll demonstrate how to use the hints to get guidance without spoiling the answer. I’ll also show them where to find the exercise solutions, and tell them to doublecheck their solutions against the official answers as they complete each exercise.

Exercise expectations. The course materials have more exercises than can be finished in a single sitting. I’ll modify the materials to be clear about the minimum amount needed to complete, and remind people to use the hints if they’re falling behind schedule.

Exercise improvements. I took notes about common questions and sources of confusion. I’ll update the source code and exercise guides to smooth out the rough spots.

JavaScript and Node.js preparation. For people new to JavaScript and Node.js, I’ll provide an introductory guide they can read in advance. It will explain key concepts such as concurrency, promises, and events. I’ll also provide a reference for each module so people have the option to familiarize themselves with upcoming material.

Thanks for reading! If you’re interested in the course, you can sign up here.

![Book cover for the Korean translation of “The Art of Agile Development, Second Edition” by James Shore. The title reads, “[국내도서] 애자일 개발의 기술 2/e”. It’s translated by 김모세 and published by O’Reilly. Other than translated text, the cover is the same as the English edition, showing a water glass containing a goldfish and a small sapling with green leaves.](/images/aoad2/cover/aoad2-korean-cover-959x1200.jpg)