AoAD2 Practice: Test-Driven Development

This is an excerpt from The Art of Agile Development, Second Edition. Visit the Second Edition home page for additional excerpts and more!

This excerpt is copyright 2007, 2021 by James Shore and Shane Warden. Although you are welcome to share this link, do not distribute or republish the content without James Shore’s express written permission.

Test-Driven Development

- Audience

- Programmers

We produce high-quality code in small, verifiable steps.

“What programming languages really need is a ‘DWIM’ instruction,” the joke goes. “Do what I mean, not what I say.”

Programming is demanding. It requires perfection, consistently, for months and years of effort. At best, mistakes lead to code that won’t compile. At worst, they lead to bugs that lie in wait and pounce at the moment that does the most damage.

Wouldn’t it be wonderful if there were a way to make computers do you what you mean? A technique so powerful, it virtually eliminates the need for debugging?

There is such a technique. It’s test-driven development, and it really works.

Test-driven development, or TDD, is a rapid cycle of testing, coding, and refactoring. When adding a feature, you’ll perform dozens of these cycles, implementing and refining the software in tiny steps until there is nothing left to add and nothing left to take away. Done well, TDD ensures that the code does exactly what you mean, not just what you say.

When used properly, TDD also helps you improve your design, documents your code for future programmers, enables refactoring, and guards against future mistakes. Better yet, it’s fun. You’re always in control and you get this constant reinforcement that you’re on the right track.

TDD isn’t perfect, of course. TDD helps programmers code what they intended to code, but it doesn’t stop programmers from misunderstanding what they need to do. It helps improve documentation, refactoring, and design, but only if programmers work hard to do so. It also has a learning curve: it’s difficult to add to legacy codebases, and it takes extra effort to apply to code that involves the outside world, such as user interfaces, networking, and databases.

Try it anyway. Although TDD benefits from other Agile practices, it doesn’t require them. You can use it with almost any code.

Why TDD Works

Back in the days of punch cards, programmers laboriously hand-checked their code to make sure it would compile. A compile error could lead to failed batch jobs and intense debugging sessions.

Getting code to compile isn’t such a big deal anymore. Most IDEs check your syntax as you type, and some even compile every time you save. The feedback loop is so fast, errors are easy to find and fix. If something doesn’t compile, there isn’t much code to check.

Test-driven development applies the same principle to programmers’ intention. Just as modern environments provide feedback on the syntax of your code, TDD cranks up the feedback on the semantics of your code. Every few minutes—as often as every 20 to 30 seconds—TDD verifies that the code does what you think it should do. If something goes wrong, there are only a few lines of code to check. Mistakes become obvious.

TDD is a series of validated hypotheses.

TDD accomplishes this trick through a series of validated hypotheses. You work in very small steps, and at every step, you make a mental prediction about what’s going to happen next. First you write a bit of test code and predict it will fail in a particular way. Then a bit of production code and predict the test will now pass. Then a small refactoring and predict the test will pass again. If a prediction is ever wrong, you stop and figure it out—or just back up and try again.

As you go, the tests and production code mesh together to check each other’s correctness, and your successful predictions confirm that you’re in control of your work. The result is code that does exactly what you thought it should. You can still forget something, or misunderstand what needs to be done. But you can have confidence that the code does what you intended.

When you’re done, the tests remain. They’re committed with the rest of the code, and they act as living documentation of how you intended the code to behave. More importantly, your team runs the tests with every build, providing safety for refactoring and ensuring the code continues to work as originally intended. If someone accidentally changes the code’s behavior—for example, with a misguided refactoring—the tests fail, signaling the mistake.

Key Idea: Fast Feedback

Feedback and iteration is a key Agile idea, as discussed in the “Key Idea: Feedback and Iteration” sidebar. An important aspect of that feedback loop is the speed of feedback. The more quickly you can get feedback, the more quickly you can adjust course and correct mistakes, and the easier it is to understand what to do differently next time.

Agile teams try to speed up their feedback loops. The faster the feedback, the better. This applies at every level, from releases (“Were our ideas about value correct?”) to minute-to-minute coding ("Does the line of code I just wrote do what I think it should?"). Test-driven development, zero-friction development, continuous deployment, and adaptive planning’s “smallest valuable increments” are all examples of speeding up feedback.

How to Use TDD

You’ll need a programmers’ testing framework to use TDD. For historical reasons, they’re called “unit testing frameworks,” although they’re used for all sorts of tests. Every popular language has one, or even multiple—just do a web search for “<language> unit test framework.” Popular examples include JUnit for Java, xUnit.net for .NET, Mocha for JavaScript, and CppUTest for C++.

TDD doesn’t prevent mistakes; it reveals them.

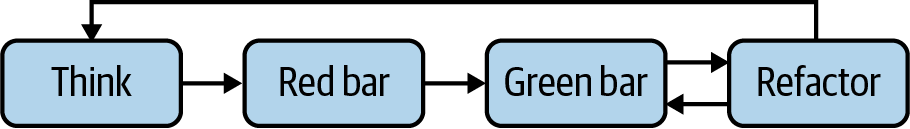

TDD follows the “red, green, refactor” cycle illustrated in the “The TDD Cycle” figure. Other than time spent thinking, each step should be incredibly small, providing you with feedback within a minute or two. Counterintuitively, the better at TDD someone is, the more likely they are to take small steps, and the faster they go. This is because TDD doesn’t prevent mistakes; it reveals them. Small steps mean fast feedback, and fast feedback means mistakes are easier and faster to fix.

Figure 1. The TDD cycle

Step 1: Think

TDD is “test-driven” because you start with a test, and then write only enough code to make the test pass. The saying is, “Don’t write any production code unless you have a failing test.”

Your first step, therefore, is to engage in a rather odd thought process. Imagine what behavior you want your code to have, then think of the very first piece to implement. It should be small. Very small. Less than five lines of code small.

Next, think of a test—also just a few lines of code—that will fail until exactly that behavior is present. Think of something that checks the code’s behavior, not its implementation. As long as the interface doesn’t change, you should be able to change the implementation at any time, without having to change the test.

- Allies

- Pair Programming

- Mob Programming

- Spike Solutions

This is the hardest part of TDD, because it requires thinking two steps ahead: first, what you want to do; second, which test will require you to do it. Pairing and mobbing help. While the driver works on making the current test pass, the navigator thinks ahead, figuring out which increment and test should come next.

Sometimes, thinking ahead will be too difficult. When that happens, use a spike solution to figure out how to approach the problem, then rebuild it using TDD.

Step 2: Red bar

When you know your next step, write the test. Write just enough test code for the current increment of behavior—hopefully fewer than five lines of code. If it takes more, that’s okay; just try for a smaller increment next time.

Write the test in terms of the code’s public interface, not how you plan to implement its internals. Respect encapsulation. This means your first test will use names that don’t exist yet. This is intentional: it forces you to design your interface from the perspective of a user of that interface, not as its implementer.

After the test is coded, predict what will happen. Typically, the test should fail, resulting in a red progress bar in most test runners. Don’t just predict that it will fail, though; predict how it will fail. Remember, TDD is a series of validated hypotheses. This is your first hypothesis.

- Ally

- Zero Friction

Then use your watch script or IDE to run the tests. You should get feedback within a few seconds. Compare the result to your prediction. Did they match?

If the test doesn’t fail, or if it fails in a different way than you expected, you’re no longer in control of your code. Perhaps your test is broken, or it doesn’t test what you thought it did. Troubleshoot the problem. You should always be able to predict what’s going to happen.

Your goal is to always know what the code is doing and why.

It’s just as important to troubleshoot unexpected successes as it is to troubleshoot unexpected failures. Your goal isn’t merely to have tests that pass; it’s to remain in control of your code—to always know what the code is doing and why.

Step 3: Green bar

Next, write just enough production code to get the test to pass. Again, you should usually need less than five lines of code. Don’t worry about design purity or conceptual elegance; just do what you need to do to make the test pass. You’ll clean it up in a moment.

Make another prediction and run the tests. This is your second hypothesis.

The tests should pass, resulting in a green progress bar. If the test fails, get back to known-good code as quickly as you can. Often, the mistake will be obvious. You’ve only written a few new lines.

If the mistake isn’t obvious, consider undoing your change and trying again. Sometimes it’s best to delete or comment out the new test and start over with a smaller increment. Remaining in control is key.

It’s always tempting to beat your head against the problem rather than backing up and trying again. I do it too. And yet, hard-won experience has taught me that trying again with a smaller increment is almost always faster and easier.

That doesn’t stop me from beating my head against walls—it always feels like the solution is just around the corner—but I have finally learned to set a timer so the damage is contained. If you can’t bring yourself to undo right away, set a 5- or 10-minute timer, and promise yourself that you’ll back up and try again, with a smaller increment, when the timer goes off.

Step 4: Refactor

- Ally

- Refactoring

When your tests are passing again, you can refactor without worrying about breaking anything. Review the code you have so far and look for possible improvements. If you’re pairing or mobbing, ask your navigator if they have any suggestions.

Incrementally refactor to make each improvement. Use very small refactorings—less than a minute or two each, certainly not longer than five minutes—and run the tests after each one. They should always pass. As before, if the test doesn’t pass and the mistake isn’t immediately obvious, undo the refactoring and get back to known-good code.

- Ally

- Simple Design

Refactor as much as you like. Make the code you’re touching as clean as you know how, without worrying about making it perfect. Be sure to keep the design focused on the software’s current needs, not what might happen in the future.

While you refactor, don’t add any functionality. Refactoring isn’t supposed to change behavior. New behavior requires a failing test.

Step 5: Repeat

When you’re ready to add new behavior, start the cycle over again.

The key to successful TDD is small increments and fast feedback.

If things are going smoothly, with every hypothesis matching reality, you can “upshift” and take bigger steps. (But generally not more than five lines of code at a time.) If you’re running into problems, “downshift” and take smaller steps.

The key to successful TDD is small increments and fast feedback. Every minute or two, you should get a confirmation that you’re on the right track and your changes did what you expected them to do. Typically, you’ll run through several cycles very quickly, then spend more time thinking and refactoring for a few cycles, then speed up again.

Cargo Cult: Test-Driven Debaclement

“Oh yeah, TDD.” Alisa scowls. “I tried that once. What a disaster.”

“What happened?” you ask. TDD has worked well for you, but some things have been a challenge to figure out. Maybe you can share some tips.

“Okay, so TDD is about codifying your spec with tests, right?” Alisa explains, with a hint of condescension. “You start out by figuring out what you want your code to do, then write all the tests so that the code is fully specified. Then you write code until the tests pass.”

“But that’s just stupid!” she rants. “Forcing a spec up front requires you to make decisions before you fully understand the problem. Now you have all these tests that are hard to change, so you’re invested in the solution and won’t look for better options. Even if you do decide to change, all those tests lock in your implementation so it’s nearly impossible to change without redoing all your work. It’s ridiculous!”

”I...I don’t think that’s TDD,” you stammer. “That sounds awful. You’re supposed to work in small steps, not write all the tests up front. And you’re supposed to test behavior, not implementation.”

“No, you’re wrong,” Alisa says firmly. “TDD is test-first development. Write the tests, then write the code. And it sucks. I know, I’ve tried it.”

Eat the Onion from the Inside Out

The hardest part of TDD is figuring out how to take small steps. Luckily, coding problems are like ogres, and onions: they have layers. The trick with TDD is to start with the sweet, juicy core, and then work your way out from there. You can use any strategy you like, but this is the approach I use:

Core interface. Start by defining the core interface you want to call, then write a test that calls that interface in the simplest possible way. Use this as an opportunity to see how the interface works in practice. Is it comfortable? Does it make sense? To make the test pass, you can just hardcode the answer.

Calculations and branches. Your hardcoded answer isn’t enough. What calculations and logic are at the core of your new code? Start adding them, one branch and calculation at a time. Focus on the happy path: how the code will be used when everything’s working properly.

Loops and generalization. Your code will often involve loops or alternative ways of being used. Once you’ve implemented the core logic, add support for those alternatives, one at a time. You’ll often need to refactor the logic you’ve built into a more generic form to keep the code clean.

Special cases and error handling. After you’ve handled all the happy-path cases, think about everything that can go wrong. Do you call any code that could throw an exception? Do you make any assumptions that need to be validated? Write tests for each one.

Runtime assertions. As you work, you might identify situations that can arise only as the result of a programming error, such as an array index that’s out of bounds, or a variable that should never be null. Add runtime assertions for these cases so they fail fast. (See the “Fail Fast” section.) They don’t need to be tested, since they’re just an added safety net.

James Grenning’s ZOMBIES mnemonic might help: Test Zero, then One, then Many. While you test, pay attention to Boundaries, Interfaces, and Exceptions, all while keeping the code Simple. [Grenning2016]

A TDD Example

TDD is best understood by watching somebody do it. I have several video series online demonstrating real-world TDD. At the time of this writing, my free “TDD Lunch & Learn” series is the most recent. It has 21 episodes covering everything from TDD basics all the way up to thorny problems such as networking and timeouts. [Shore2020b]

The first of these examples uses TDD to create a ROT-13 encoding function. (ROT-13 is a simple Caesar cipher where “abc” becomes “nop” and vice versa.) It’s a very simple problem, but it’s a good example of how even small problems can be broken down into very small steps.

In this example, notice the techniques I use to work in small increments. The increments may even seem ridiculously small, but that makes finding mistakes easy, and that helps me go faster. As I said, the more experience you have with TDD, the smaller the steps you’re able to take, and the faster that allows you to go.

Start with the core interface

Think. First, I needed to decide how to start. As usual, the core interface is a good starting point. What did I want it to look like?

This example was written in JavaScript—specifically, Node.js—so I had the choice between creating a class or just exporting a function from a module. There didn’t seem to be much value in making a full-blown class, so I decided to just make a rot13 module that exported a transform function.

Red bar. Now that I knew what I wanted to do, I was able to write a test that exercised that interface in the simplest possible way:

it("runs tests", function() { ⓵

assert.equal(rot13.transform(""), ""); ⓶

});

Line 1 defines the test, and line 2 asserts that the actual value, rot13.transform(""), matches the expected value, "". (Some assertion libraries put the expected value first, but this example uses Chai, which puts the actual value first.)

Before running the test, I made a hypothesis. Specifically, I predicted the test would fail because rot13 didn’t exist, and that’s what happened.

Green bar. To make the test pass, I created the interface and hardcoded just enough to satisfy the test:

export function transform() {

return "";

}

Hardcoding the return value is kind of a party trick, and I’ll often write a bit of real code during this first step, but in this case, there wasn’t anything else the code needed to do.

Good tests document how the code is intended to work.

Refactor. Check for opportunities to refactor every time through the loop. In this case, I renamed the test from “runs tests,” which was leftover from my initial setup, to “does nothing when input is empty.” That’s obviously more helpful for future readers. Good tests document how the code is intended to work, and good test names allow the reader to get a high-level understanding by skimming through the names. Note how the name talks about what the production code does, not what the test does:

it("does nothing when input is empty", function() {

assert.equal(rot13.transform(""), "");

});

Calculations and branches

Think. Now I needed to code the core logic of the ROT-13 transform. Eventually, I knew I wanted to loop through the string and convert one character at a time, but that was too big of a step. I needed to think of something smaller.

A smaller step is to “convert one character,” but even that was too big. Remember, the smaller the steps, the faster you’re able to go. I needed to break it down even smaller. Ultimately, I decided to just transform one lower-case letter forward 13 letters. Upper-case letters and looping around after “z” would wait for later.

Red bar. With such a small step, the test was easy to write:

it("transforms lower-case letters", function() {

assert.equals(rot13.transform("a"), "n");

});

My hypothesis was that the test would fail, expecting "n" but getting "", and that’s what happened.

Green bar. Making the test pass was just as easy:

export function transform(input) {

if (input === "") return "";

const charCode = input.charCodeAt(0);

charCode += 13;

return String.fromCharCode(charCode);

}

Even though this was a small step, it forced me to work out the critical question of converting letters to character codes and back, something I had to look up. Taking a small step allowed me to solve this problem in isolation, which made it easier to tell when I got it right.

Refactor. I didn’t see any opportunities to refactor, so it was time to go around the loop again.

Repeat. I continued in this way, step by small step, until the core letter transformation algorithm was complete.

Lower-case letter forward:

a→n(as I just showed)Lower-case letter backward:

n→aFirst character before

adoesn’t rotate:`→`First character after

zdoesn’t rotate:{→{Upper-case letters forward:

A→NUpper-case letters backward:

N→AMore boundary cases:

@→@and[→[

After each step, I considered the code and refactored when appropriate. Here are the resulting tests. The numbers correspond to each step. Note how some steps resulted in new tests, and others just enhanced an existing test:

it("does nothing when input is empty", function() {

assert.equal(rot13.transform(""), "");

});

it("transforms lower-case letters", function() {

assert.equal(rot13.transform("a"), "n"); ⓵

assert.equal(rot13.transform("n"), "a"); ⓶

});

it("transforms upper-case letters", function() {

assert.equal(rot13.transform("A"), "N"); ⓹

assert.equal(rot13.transform("N"), "A"); ⓺

});

it("doesn't transform symbols", function() {

assert.equal(rot13.transform("`"), "`"); ⓷

assert.equal(rot13.transform("{"), "{"); ⓸

assert.equal(rot13.transform("@"), "@"); ⓻

assert.equal(rot13.transform("["), "["); ⓻

});

Here’s the production code. It’s harder to match each step to the code because there was so much refactoring (see episode 1 of [Shore2020b] for details), but you can see how TDD is an iterative process that gradually causes the code to grow:

export function transform() {

if (input === "") return "";

let charCode = input.charCodeAt(0); ⓵

if (isBetween(charCode, "a", "m") || isBetween(charCode, "A", "M")) { ⓷⓸⓹

charCode += 13; ⓵

}

if (isBetween(charCode, "n", "z") || isBetween(charCode, "N", "Z")) { ⓶ ⓸ ⓺

charCode -= 13; ⓶

}

return String.fromCharCode(charCode); ⓵

}

function isBetween(charCode, firstLetter, lastLetter) { ⓸

return charCode >= codeFor(firstLetter) && charCode <= codeFor(lastLetter);⓸

} ⓸

function codeFor(letter) { ⓷

return letter.charCodeAt(0); ⓷

} ⓷

Step 7 (tests for more boundary cases) didn’t result in new production code, but I included it just to make sure I hadn’t made any mistakes.

Loops and generalization

Think. So far, the code handled only strings with one letter. Now it was time to generalize it to support full strings.

Refactor. I realized that this would be easier to implement if I factored out the core logic, so I jumped back to the “Refactoring” step to do so:

export function transform(input) {

if (input === "") return "";

let charCode = input.charCodeAt(0);

return transformLetter(charCode);

}

function transformLetter(charCode) {

if (isBetween(charCode, "a", "m") || isBetween(charCode, "A", "M")) {

charCode += 13;

}

if (isBetween(charCode, "n", "z") || isBetween(charCode, "N", "Z")) {

charCode -= 13;

}

return String.fromCharCode(charCode);

}

function isBetween...

function codeFor...

Refactoring to make the next step easier is a technique I use all the time. Sometimes, during the “Red bar” step, I realize that I should have refactored first. When that happens, I comment out the test temporarily so I can refactor while my tests are passing. This makes it faster and easier for me to detect refactoring errors.

Red bar. Now I was ready to generalize the code. I updated one of my tests to prove a loop was needed:

it("transforms lower-case letters", function() {

assert.equal(rot13.transform("abc"), "nop");

assert.equal(rot13.transform("n"), "a");

});

I expected it to fail, expecting "nop" and getting "n", because it was looking at only the first letter, and that’s exactly what happened.

Green bar. I modified the production code to add the loop:

export function transform(input) {

let result = "";

for (let i = 0; i < input.length; i++) {

let charCode = input.charCodeAt(i);

result += transformLetter(charCode);

}

return result;

}

function transformLetter...

function isBetween...

function codeFor...

- Ally

- Zero Friction

Refactor. I decided to flesh out the tests so they’d work better as documentation for future readers of this code. This wasn’t strictly necessary, but I thought it would make the ROT-13 logic more obvious. I changed one assertion at a time, of course. The feedback was so fast and frictionless, executing automatically every time I saved, there was no reason not to.

In this case, everything worked as expected, but if something had failed, changing one assertion at a time would have made debugging just a little bit easier. Those benefits add up:

it("does nothing when input is empty", function() {

assert.equal(rot13.transform(""), "");

});

it("transforms lower-case letters", function() {

assert.equal(

rot13.transform("abcdefghijklmnopqrstuvwxyz"), "nopqrstuvwxyzabcdefghijklm" ⓵

);

assert.equal(rot13.transform("n"), "a"); ⓶

});

it("transforms upper-case letters", function() {

assert.equal(

rot13.transform("ABCDEFGHIJKLMNOPQRSTUVWXYZ"), "NOPQRSTUVWXYZABCDEFGHIJKLM" ⓷

);

assert.equal(rot13.transform("N"), "A"); ⓸

});

it("doesn't transform symbols", function() {

assert.equal(rot13.transform("`{@["), "`{@["); ⓹

assert.equal(rot13.transform("{"), "{"); ⓺

assert.equal(rot13.transform("@"), "@"); ⓺

assert.equal(rot13.transform("["), "["); ⓺

});

Special cases, error handling, and runtime assertions

Finally, I wanted to look at everything that could go wrong. I started with runtime assertions. How could the code be used incorrectly? Usually, I don’t test my runtime assertions because they’re just a safety net, but I did so this time for the purpose of demonstration:

it("fails fast when no parameter provided", function() { ⓵

assert.throws( ⓵

() => rot13.transform(), ⓵

"Expected string parameter" ⓵

); ⓵

}); ⓵

it("fails fast when wrong parameter type provided", function() { ⓶

assert.throws( ⓶

() => rot13.transform(123), ⓶

"Expected string parameter" ⓶

); ⓶

}); ⓶

Of course, I followed the TDD loop and added the tests one at a time. Implementing them meant adding a guard clause, which I also implemented incrementally:

export function transform(input) {

if (input === undefined ⓵ || typeof input !== "string" ⓶ ) {

throw new Error("Expected string parameter"); ⓵

} ⓵

...

Good tests also act as documentation, so my last step is always to review the tests and think about how well they communicate to future readers. Typically, I’ll start with the general “happy path” case, then go into specifics and special cases. Sometimes I’ll add a few tests just to clarify behavior, even if I don’t have to change the production code. That was the case with this code. These are the tests I ended up with:

it("does nothing when input is empty", ...);

it("transforms lower-case letters", ...);

it("transforms upper-case letters", ...);

it("doesn’t transform symbols", ...);

it("doesn’t transform numbers", ...);

it("doesn’t transform non-English letters", ...);

it("doesn’t break when given emojis", ...);

it("fails fast when no parameter provided", ...);

it("fails fast when wrong parameter type provided", ...);

And the final production code:

export function transform(input) {

if (input === undefined || typeof input !== "string") {

throw new Error("Expected string parameter");

}

let result = "";

for (let i = 0; i < input.length; i++) {

let charCode = input.charCodeAt(i);

result += transformLetter(charCode);

}

return result;

}

function transformLetter(charCode) {

if (isBetween(charCode, "a", "m") || isBetween(charCode, "A", "M")) {

charCode += 13;

} else if (isBetween(charCode, "n", "z") || isBetween(charCode, "N", "Z")) {

charCode -= 13;

}

return String.fromCharCode(charCode);

}

function isBetween(charCode, firstLetter, lastLetter) {

return charCode >= codeFor(firstLetter) && charCode <= codeFor(lastLetter);

}

function codeFor(letter) {

return letter.charCodeAt(letter);

}

At this point, the code did everything it needed to. Readers familiar with JavaScript, however, will notice that the code can be further refactored and improved. I continue the example in the “Refactoring in Action” section.

Questions

Isn’t TDD wasteful?

I go faster with TDD than without it. With enough practice, I think you will too.

TDD is faster because programming doesn’t just involve typing at the keyboard. It also involves debugging, manually running the code, checking that a change worked, and so forth. Michael “GeePaw” Hill calls this activity GAK, for “geek at keyboard.” With TDD, you spend much less time GAKking around and more time doing fun programming work. You also spend less time studying code, because the tests act as documentation and inform you when you make mistakes. Even though tests take time to write, the net result is that you have more time for development, not less. GeePaw Hill’s video “TDD & The Lump of Coding Fallacy” [Hill2018] is an excellent and entertaining explanation of this phenomenon.

What do I need to test when using TDD?

The saying is, “Test everything that can possibly break.” To determine if something could possibly break, I think, “Do I have confidence that I’m doing this correctly, and that nobody in the future will inadvertently break this code?”

I’ve learned through painful experience that I can break nearly everything, so I test nearly everything. The only exception is code without any logic, such as simple getters and setters, or a function that calls only another function.

You don’t need to test third-party code unless you have some reason to distrust it. But it is a good idea to wrap third-party code in code that you control, and test that the wrapper works the way you want it to. The “Third-Party Components” section has more about wrapping third-party code.

How do I test private methods?

Start by testing public methods. As you refactor, some of that code will move into private methods, but it will still be covered by existing tests.

If your code is so complex that you need to test a private method directly, this is a good indication that you should refactor. You can move the private function into a separate module or method object, where it will be public, and test it directly.

How can I use TDD when developing a UI?

TDD is particularly difficult with user interfaces because most UI frameworks weren’t designed with testability in mind. Many people compromise by writing a very thin, untested translation layer that only forwards UI calls to a presentation layer. They keep all their UI logic in the presentation layer and use TDD on that layer as normal.

There are tools that allow you to test a UI directly by making HTTP calls (for web-based software) or by pressing buttons and simulating window events (for client-side software). That’s what I prefer to use. Although they’re usually used for broad tests, I use them to write narrow integration tests of my UI translation layer. (See the “Test Outside Interactions with Narrow Integration Tests” section.)

Should we refactor our test code?

Absolutely. Tests have to be maintained, too. I’ve seen otherwise-fine codebases go off the rails because of brittle and fragile test suites.

That said, tests are a form of documentation and should generally read like a step-by-step recipe. Loops and logic should be moved into helper functions that make the underlying intent of the test easier to understand. Across each test, though, it’s okay to have some duplication if it makes the intent of the test more clear. Unlike production code, tests are read much more often than they’re modified.

Arlo Belshee uses the acronym “WET,” for “Write Explicit Tests,” as a guiding principle for test design. It’s in contrast with the DRY (Don’t Repeat Yourself) principle used for production code. His article on test design, “WET: When DRY Doesn’t Apply,” is superb. [Belshee2016a]

How much code coverage should we have?

Measuring code coverage is often a mistake. Rather than focusing on code coverage, focus on taking small steps and using your tests to drive your code. If you do this, everything you want to test, should be tested. The “Code Coverage” sidebar discusses this topic further.

Prerequisites

Although TDD is a very valuable tool, it does have a two- or three-month learning curve. It’s easy to apply to toy problems such as the ROT-13 example, but translating that experience to larger systems takes time. Legacy code, proper test isolation, and narrow integration tests are particularly difficult to master. On the other hand, the sooner you start using TDD, the sooner you’ll figure it out, so don’t let these challenges stop you.

Because TDD has a learning curve, be careful about adopting it without permission. Your organization could see the initial slowdown and reject TDD without proper consideration. Similarly, be cautious about being the only one to use TDD on your team. It’s best if everyone agrees to use it together, otherwise you’re likely to end up with other members of the team inadvertently breaking your tests and creating test-unfriendly code.

Once you do adopt TDD, don’t continue to ask permission to write tests. They’re a normal part of development. When sizing stories, include the time required for testing in your size considerations.

- Allies

- Zero Friction

- Fast Reliable Tests

Fast feedback is crucial for TDD to be successful. Make sure you can get feedback within one to five seconds, at least for the subset of tests you’re currently working on.

Finally, don’t let your tests become a straightjacket. If you can’t refactor your code without breaking a lot of tests, something is wrong. Often, it’s a result of overzealous use of test doubles. Similarly, overuse of broad tests can lead to tests that fail randomly. Fast, reliable tests are judicious in their use of both.

Indicators

When you use TDD well:

You spend little time debugging.

You continue to make programming mistakes, but you find them in a matter of minutes and can fix them easily.

You have total confidence the whole codebase does what programmers intended it to do.

You aggressively refactor at every opportunity, confident in the knowledge that the tests will catch any mistakes.

Alternatives and Experiments

TDD is at the heart of the Delivering practices. Without it, Delivering fluency will be difficult or even impossible to achieve.

A common misinterpretation of TDD, as the “Test-Driven Debaclement” sidebar illustrates, is to design your code first, write all the tests, and then write the production code. This approach is frustrating and slow, and it doesn’t allow you to learn as you go.

Another approach is to write tests after writing the production code. This is very difficult to do well: the code has to be designed for testability, and it’s hard to do so unless you write the tests first. It’s also tedious, with a constant temptation to wrap up and move on. In practice, I’ve yet to see after-the-fact tests come close to the detail and quality of tests created with TDD.

Even if these approaches do work for you, TDD isn’t just about testing. It’s really about using very small, continuously validated hypotheses to confirm that you’re on the right track and producing high-quality code. With the exception of Kent Beck’s TCR, which I’ll discuss in a moment, I’m not aware of any alternatives to TDD that allow you to do so while also providing the documentation and safety of a good test suite.

Under the TDD banner, though, there are many, many experiments that you can conduct. TDD is one of those “moments to learn, lifetime to master” skills. Look for ways to apply TDD to more and more technologies, and experiment with making your feedback loops smaller.

Kent Beck has been experimenting with an idea he calls TCR: test && commit || revert. [Beck2018] It refers to a small script that automatically commits your code if the tests pass and reverts it if the tests fail. This gives you the same series of validated hypotheses that TDD does, and arguably makes them even smaller and more frequent. That’s one of the hardest and most important things to learn about TDD. TCR is worth trying as an exercise, if nothing else.

Further Reading

Test-Driven Development: By Example [Beck2002] is an excellent introduction to TDD by the person who invented it. If you liked the ROT-13 example, you’ll like the extended examples in this book. The TDD patterns in Part III are particularly good.

Share your thoughts about this excerpt on the AoAD2 mailing list or Discord server. For videos and interviews regarding the book, see the book club archive.

For more excerpts from the book, see the Second Edition home page.