The Accountability Problem

October 18, 2025

This is a transcript of my keynote presentation at the Agile Cambridge conference in England on October 2nd, 2025. The topic was “The Accountability Problem.” How do we define software department accountability so our business partners don’t do it for us?

Thanks for having me. I’m very happy to be here in Cambridge. This is my first time visiting, so I spent the afternoon Tuesday doing some sightseeing, including a lovely ride down the River Cam. I was delighted to learn yesterday that I had Simon Wardley to thank for chauffered punt rides, including the completely fictional story I was told about the mathematical bridge.

One of the things I love about Cambridge is its rich history. Of course, lots of history is important when you have...

...this monster eating up every second.

That’s the Chronophage outside of Corpus Christi college, if you aren’t familiar with it, and much more impressive in person than in my terrible vertical picture with window glare.

Before we get going, I should explain my context. You’ll hear a lot of advice at this conference, and how much that advice is relevant to you has a lot to do with how much their context matches yours.

I’m currently VP of Engineering at OpenSesame, and for the 23 years prior to that, I was a consultant. As VP, and as a consultant, I specialize in late-stage startups: entrepreneurial organizations that were successful enough that they were able to grow. These are companies with a product mindset that value entrepreneurial thinking, but they’re also trying to grow up and be “real companies,” and they’re trying to figure out how to do that without losing their entrepreneurial edge.

So that’s the context of my material: entrepreneurial companies building software products that they sell. If you’re not in that situation, I encourage you to mine my talk for ideas, but don’t try to apply it blindly. And if you are in that situation... well, mine my talk for ideas, and don’t try to apply it blindly!

A few more disclaimers. All the substantive content of this talk—the words, diagrams, examples, and so forth—were created with my actual meat brain, without any AI. Large images have been sourced from various locations, and are credited in the bottom left corner.

I’ve also dressed up some of the slides with decorative AI-generated images from ChatGPT 5, like that rapper holding a stop sign. If there’s one thing GenAI is good at, it’s embellishment.

I should also mention that, although I work for OpenSesame, I’m not speaking for OpenSesame. I created this talk on my own time, and I’m technically on vacation right now. The opinions I express are my own.

Anyway, as I was saying, one of the things I love about Cambridge is its rich history. I’m sure you’ve all heard several times by now that the university was founded back in 1209, by people fleeing [waves hand dismissively] the other university. In comparison, my home town of Astoria, Oregon, which is the oldest permanent settlement on the west coast of the US, was founded in 1811. I think that’s last Tuesday by British standards.

Part of the history surrounding Cambridge is this man: LP Hartley. He was born in Cambridgeshire in 1895, although he never went to Cambridge University. He went to... the other one. But, despite that choice, he went on to become a successful novelist.

His most famous novel is “The Go-Between.” It begins with a wonderful opening line:

“The past is a foreign country: they do things differently there.”

And that connects us back to Cambridge. The University of Cambridge Press published this book in 1985. It’s by David Lowenthal, and it created an entire sub-genre of history called Heritage Studies. It’s still in print today, in a revised edition.

The concept of this idea is that, although the past informs the present, the present also informs the past. Our thoughts and actions today extend from events that occurred in the past. But, at the same time, our understanding of the past is colored by our thoughts and actions today.

The past is a foreign country. They do things different there. But we can’t visit the past. We can’t see what they did differently. We can only interpret what they’ve left behind.

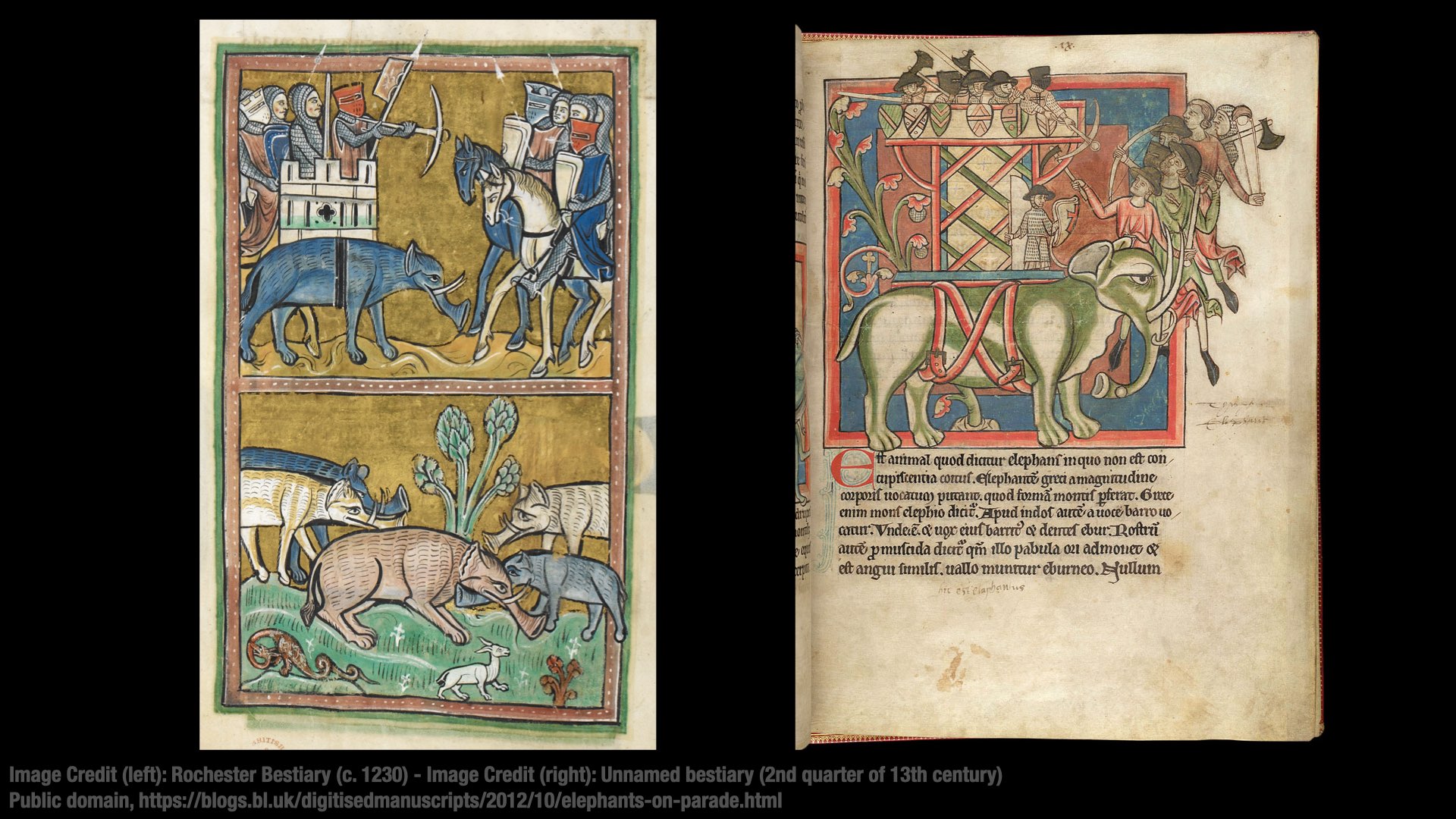

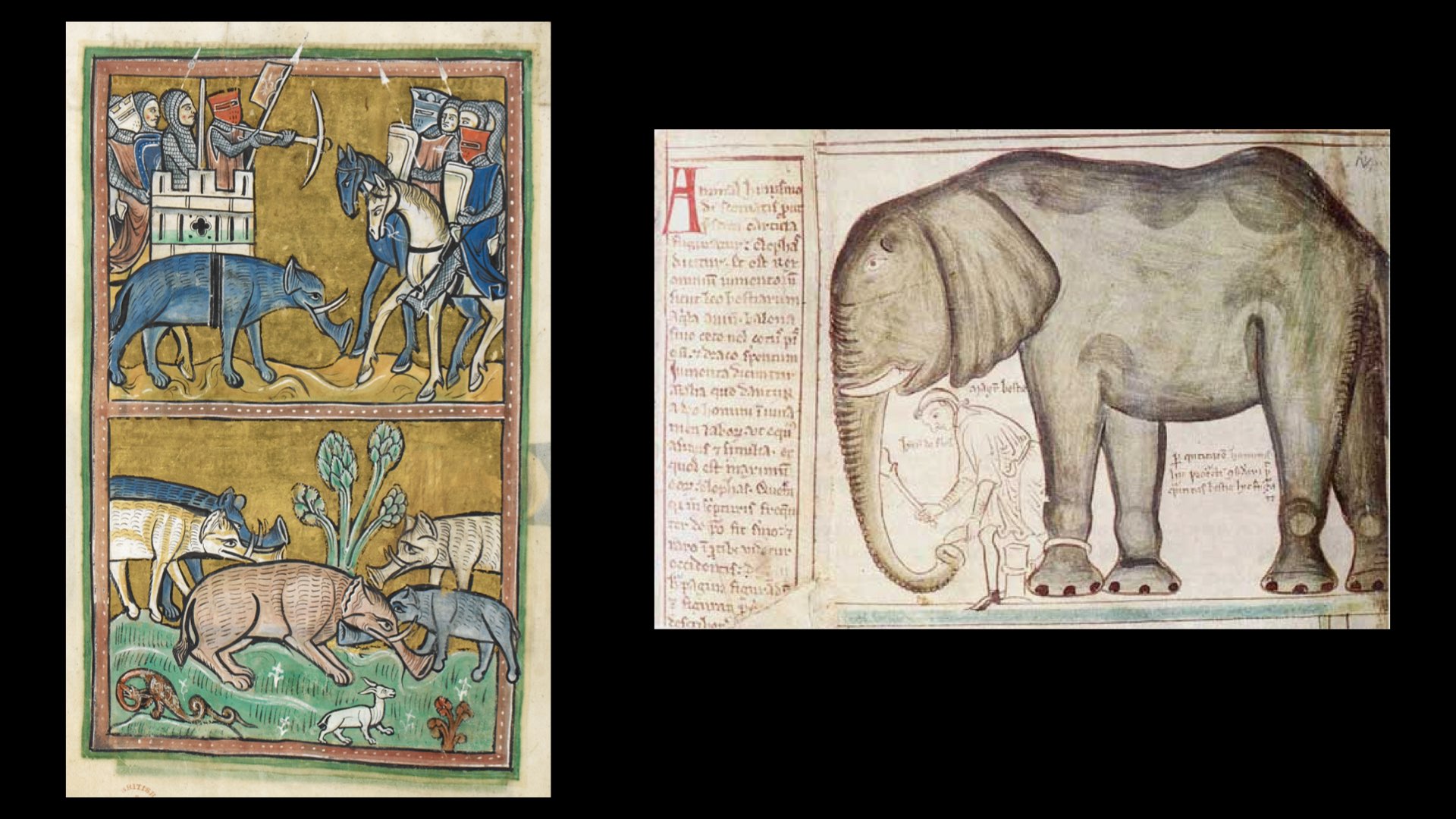

And like medieval scholars drawing elephants they’ve never seen, we make those interpretations through the lens of our own biases.

I love these medieval drawings of elephants. They’re so delightfully strange.

But I’m not showing you these pictures to make a point about how difficult it is to draw an elephant when you haven’t seen one.

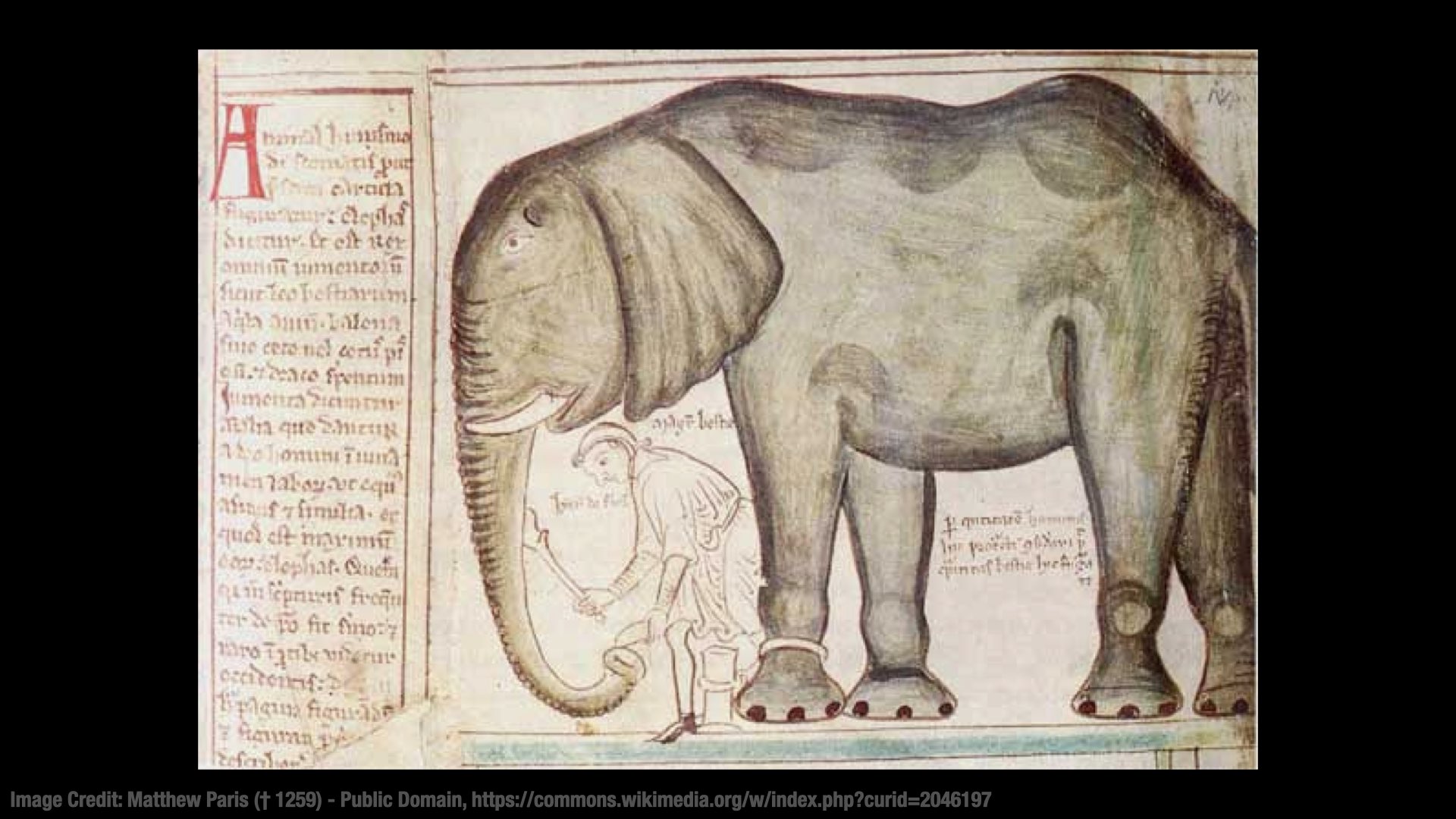

If you go back to Corpus Christi, where they have the Chronophage—not right now! I’ll start talking about software soon, promise. Anyway, at Corpus Christi, they have Matthew Paris’ Chronica Majora. It contains this drawing of an elephant. You might assume that it came much later, because it’s so much more accurate. But all of these drawings were created around the same time, in the 13th century.

It’s quite the difference, isn’t it?

I’m not showing you these images to make a point about medieval monks. I’m actually showing them to make a point about your biases. In the modern era, we expect images to be true to life. We have cameras that give us nearly perfect representations of the world. But realism isn’t what medieval monks were always trying to accomplish. Religion and metaphor were a central part of their lives, to a degree that I think we in the modern world have trouble understanding.

The elephants on the left aren’t really elephants. They’re a way of presenting a moral lesson about your place in the world. The image serves that story. It’s not there to teach you about elephants. It’s there to teach you about God.

So if your first reaction to these elephants was to laugh at those ignorant medieval monks... then perhaps you’ve fallen prey to your biases. The elephant doesn’t look like an elephant because the metaphor was more important than the reality.

The past is a foreign country. They do things differently there.

[beat]

The past informs the present, but the present informs the past. We can’t help but to interpret it through the lens of our own experience, and those biases distort the reality of what it was actually like to live there.

This idea fascinates me, because it’s not only true of the past; it’s true of everything. Our biases and experiences influence so much of how we interpret the world.

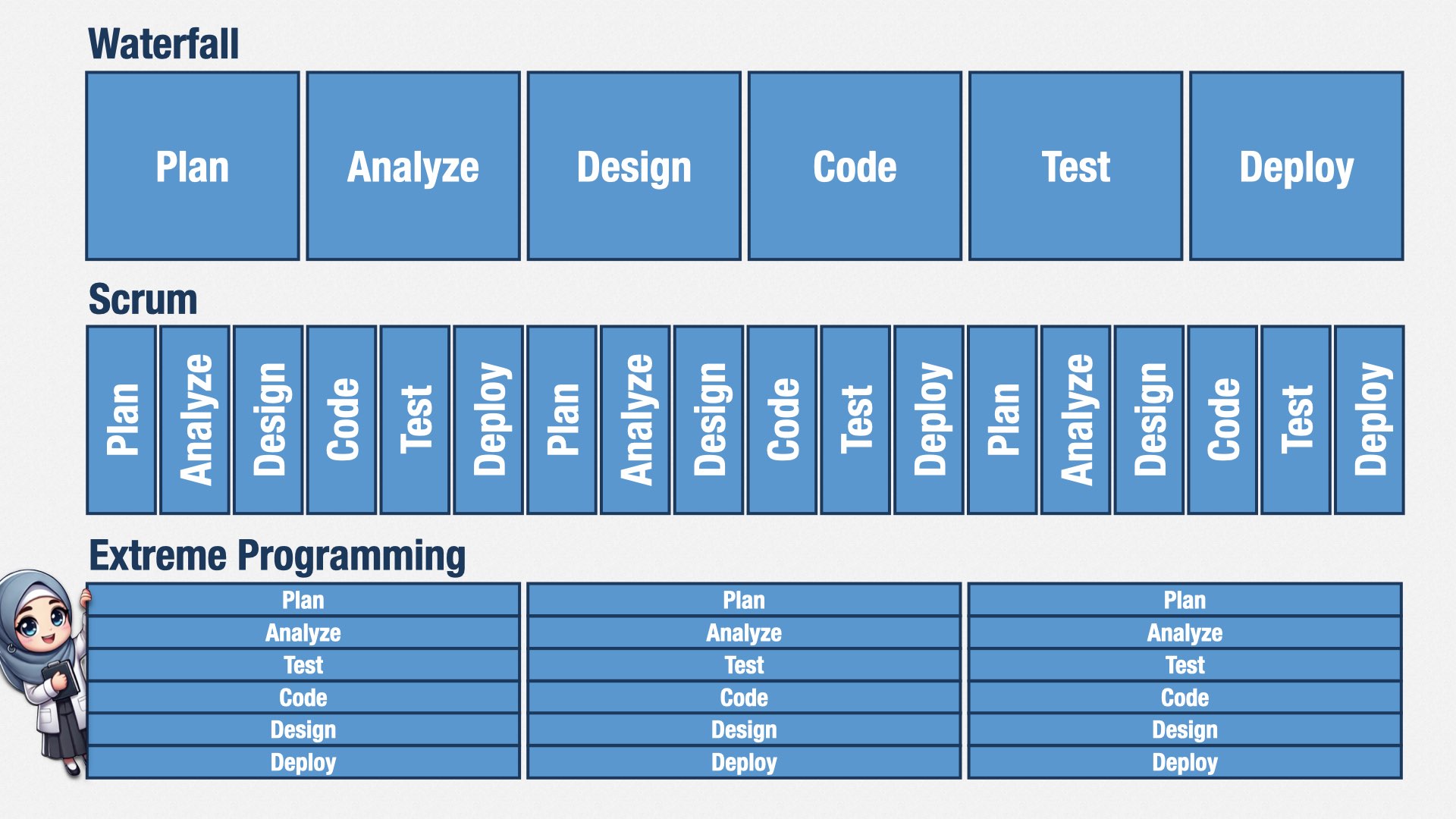

I taught teams Extreme Programming for a few decades, as a consultant. Now that I’m VP of Engineering, I’m still teaching it, in a way. One thing that’s stood out to me over the years is that the people who struggle the most to learn XP are the ones who are more senior.

Junior developers have no problem! It’s the senior developers who struggle. They have too much baggage from their preconceptions.

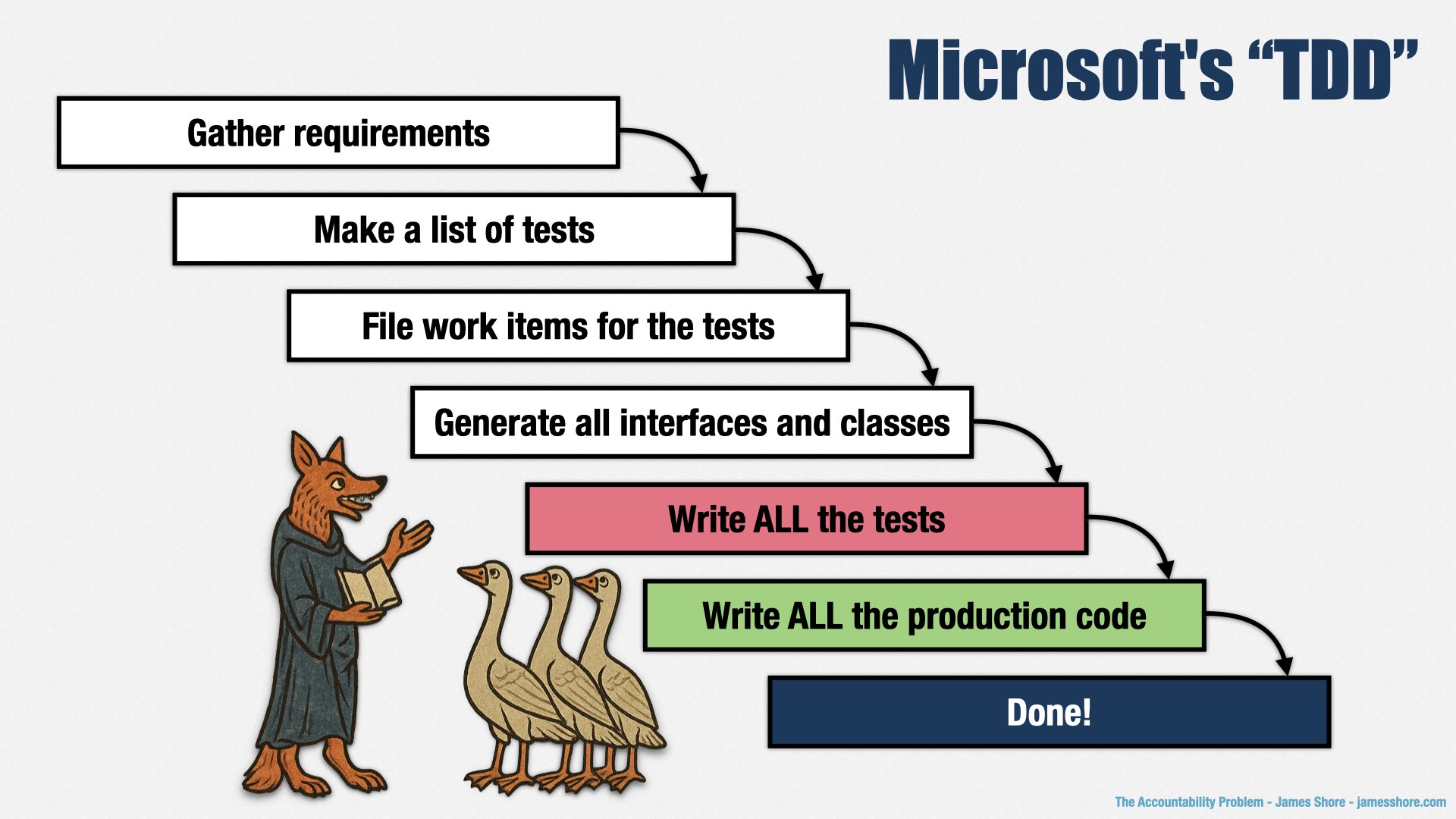

A good example of this comes from Microsoft. XP was popular in the early 2000s, and practices like test-driven development, which come from XP, were entering the mainstream. So Microsoft published a set of “Guidelines for Test-Driven Development.”

There was a big backlash, and Microsoft took their guidelines down pretty quickly, because they got them terribly, ridiculously, horribly wrong. Microsoft didn’t actually practice XP, as far as I can tell, so they didn’t know that XP is a way of keeping software design simple and evolving it in response to customer needs. In XP, you don’t create your design in advance; you discover it as you go, and you focus on keeping it as simple as you can.

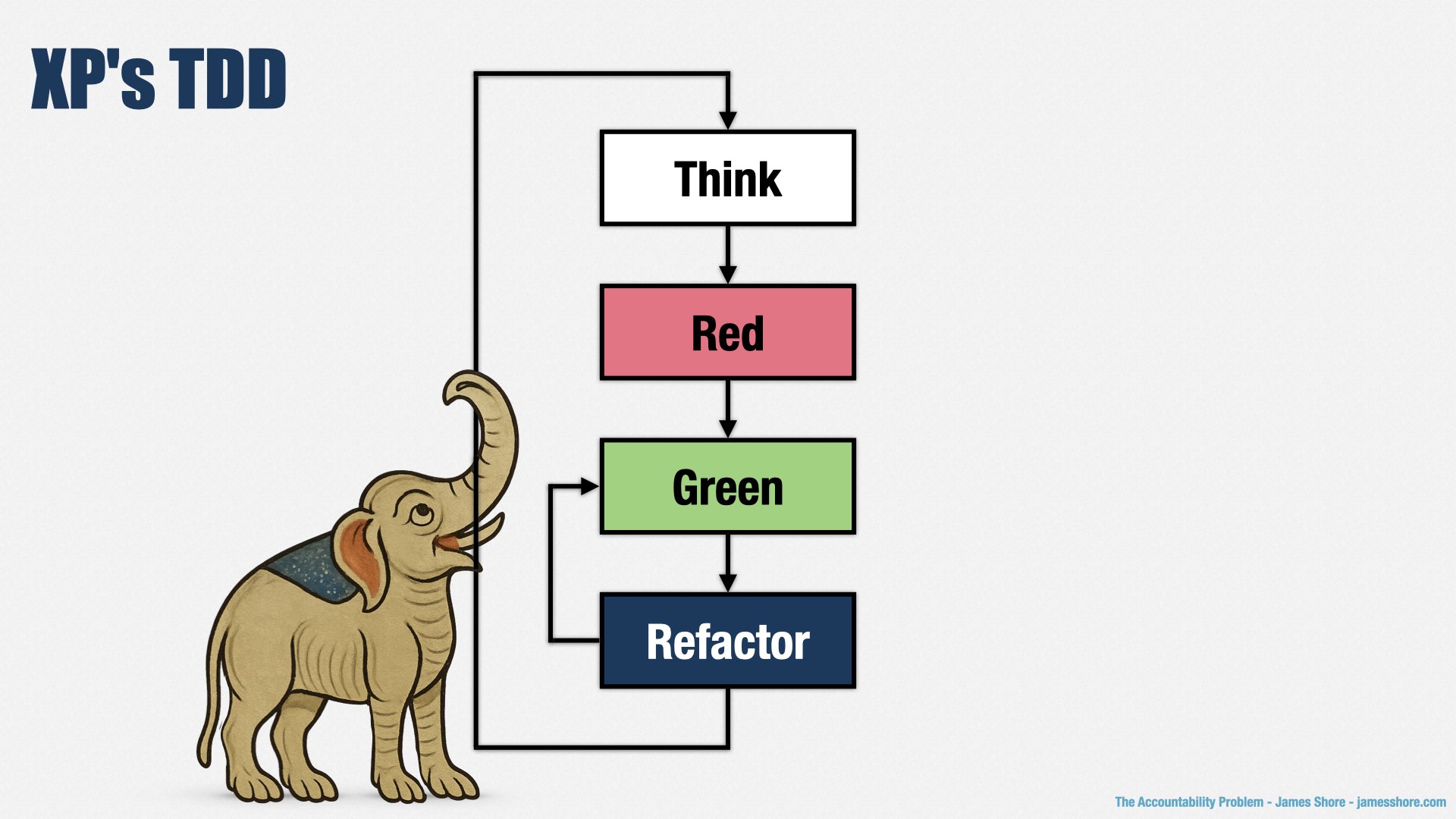

People who have practiced XP know that TDD is about tests and code evolving in step with each other, so that you learn as you go. A few lines of test code. See the tests fail. A few lines of production code. See the tests pass. A few improvements to the design. See the tests pass. A few more lines of test code. See the tests fail. And so on, and so forth, until the software is done, without following a preconceived path.

As with many companies past and present, the Microsoft way wasn’t to evolve their design; it was to come up with a software design in advance, then build to that preconceived design. And so they saw what Kent Beck and others had said about TDD and interpreted it in the only way they knew how: as a way of coming up with a software design in advance, and then building to that design. Their guidelines for TDD were to:

Gather the requirements for your new feature

Make a list of tests that will satisfy the requirements

File work items for the tests that need to be written

Generate all the interfaces and classes you’ll need—using Visual Studio, of course!

Write all the tests

Write all the production code

I’m not exaggerating! This is what they actually said. Refactoring, iteration, learning as you go—key ideas of XP and TDD—nowhere to be found.

Microsoft’s approach to TDD was the exact opposite of what TDD was about. But they were only able to interpret TDD through the lens of their corporate approach to software development. And to this day, you see this same misunderstanding about TDD repeated by people who are steeped in up-front thinking.

XP is a foreign country. We do things differently here.

[beat]

As people, we can’t help but to interpret the world through lens of our own biases. But that means that we make assumptions about the world that aren’t true, and we can’t even recognize that we’re doing it. It’s not just the past that’s a foreign country... almost everything is.

That leads to problems. And in software, one of the biggest, is...

...the Accountability Problem.

[beat]

People who aren’t software developers have probably seen more “programming” in movies and TV shows than in real life. Those shows are filled with magical people who can “hack” anything off-camera and in moments.

“The ship’s going to ram us, captain!” “Quick, hack into their retro-encabulator and reverse the polarity of their thrusters!” (frantic typing, dramatic music, camera zoooooooom) “It just barely missed us! Hoorah!”

I only wish software development was that cool.

Of course, people do know that’s fiction. Some of them might have even written code in school. But in school, people write small programs that fulfill an assignment and don’t have to be maintained.

Or maybe they’ve vibe-coded an app using GenAI.

None of these experiences bear any relationship to the modern world of software development.

TV show hackers are just another deus ex machina... quite literally. It’s lazy writing.

School projects don’t require long-term maintenance or large-scale coordination.

Unsupervised AI coding assistants feel magical, but they break down once you get past the prototype stage.

All of these things trick people into thinking that software development is about code. About hands on keyboard. But that’s not what it’s about at all.

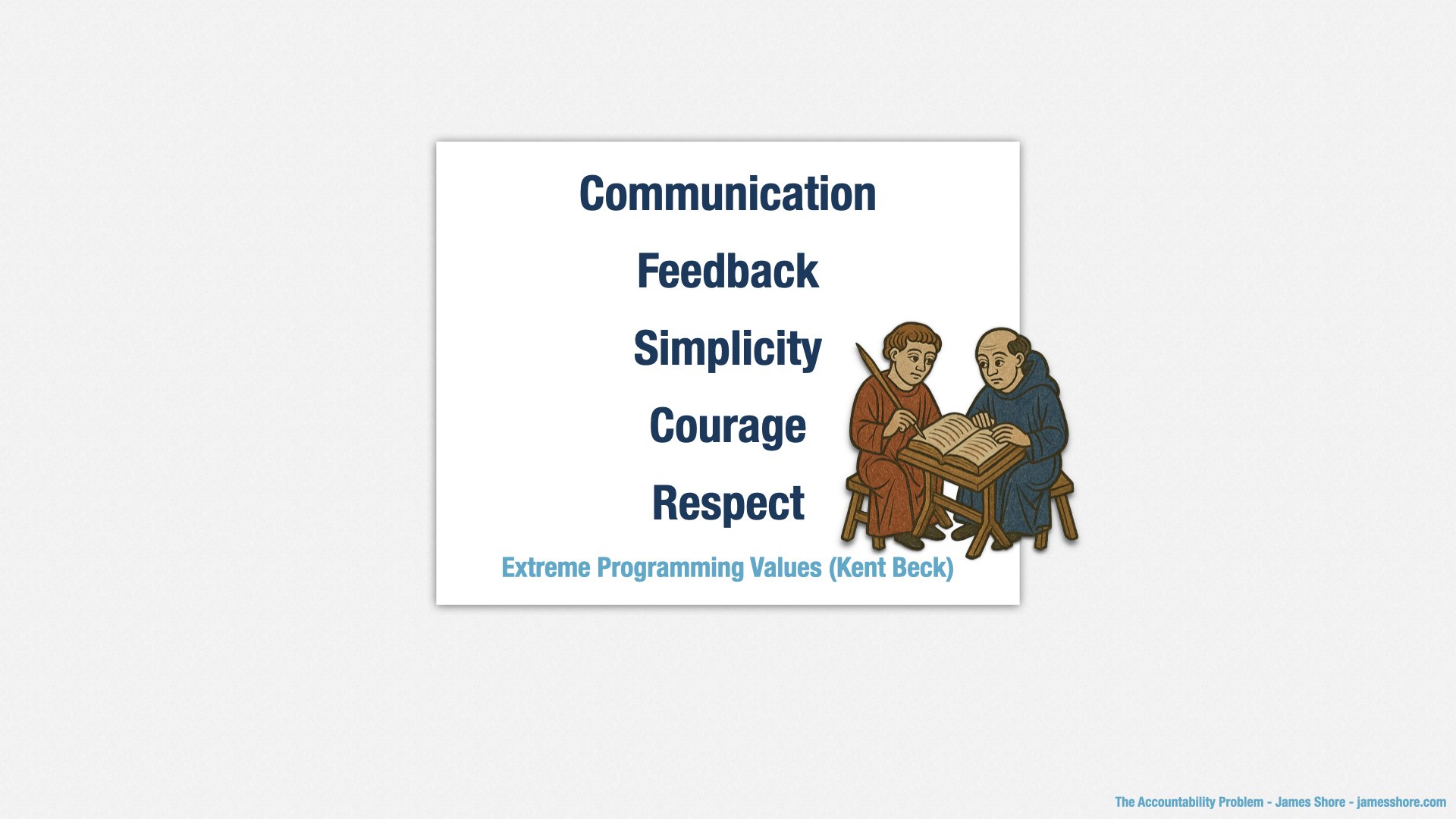

To paraphrase Kent Beck, professional software development is about...

Communication and collaboration between large numbers of people with different perspectives.

Feedback loops that enable us to tell when we’re building the right thing, and the thing right... and when we’re not.

Simplicity, because it’s our ability to understand and change software that determines timelines and cost.

Courage to do the right thing even when it’s hard, and it’s often hard.

Respect for the people doing the work and the people affected by the work.

We know that software development is a matter of discovery and coordination. But to our business partners, we’re a foreign country. They can only see us through the lens of their experience.

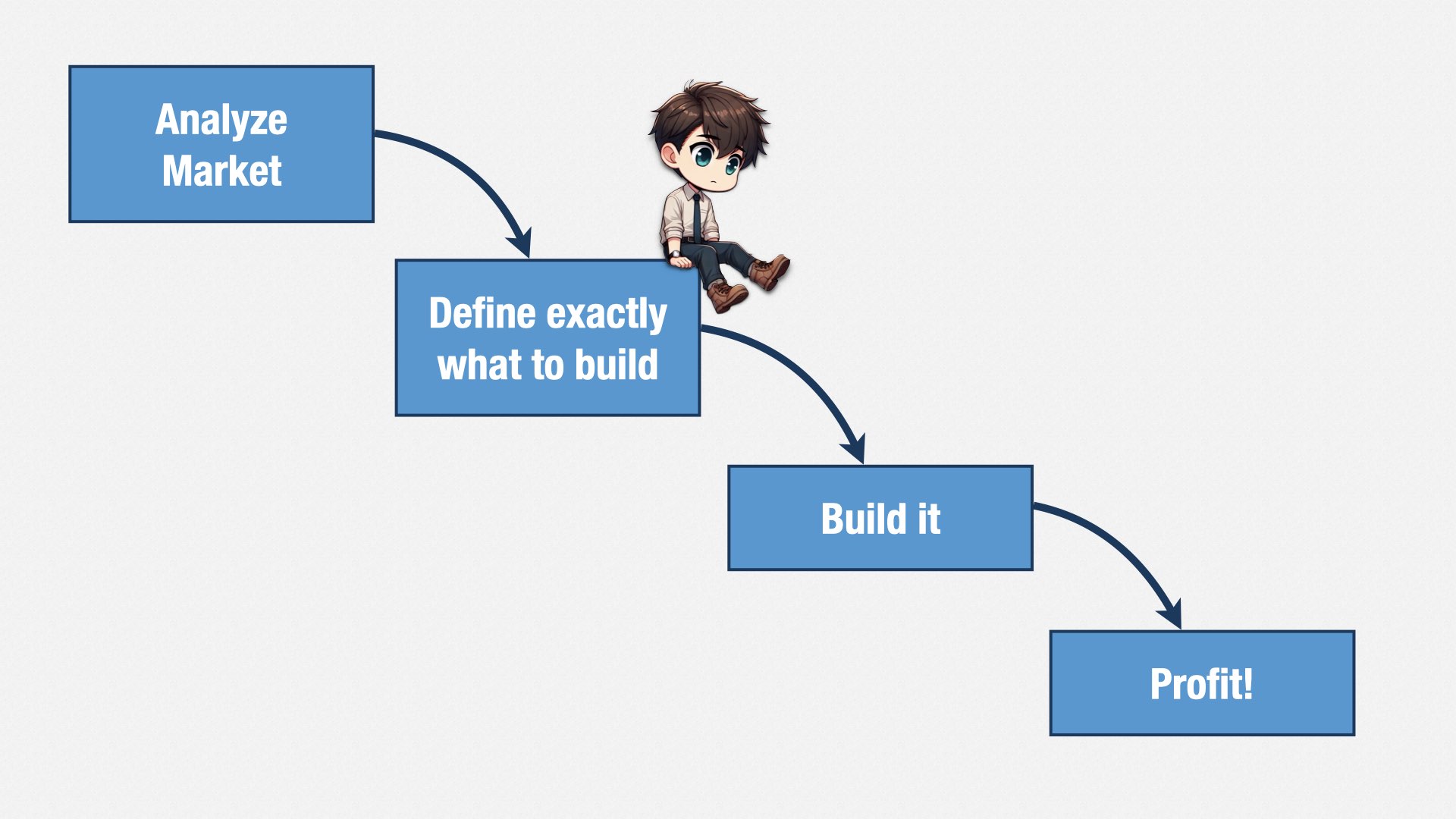

Their experience is that software development is about writing code, in the same manner that someone might do a homework assignment. It’s tedious, perhaps; time-consuming, maybe; but ultimately, a matter of buckling down and doing the assignment... following a straight path from here to there.

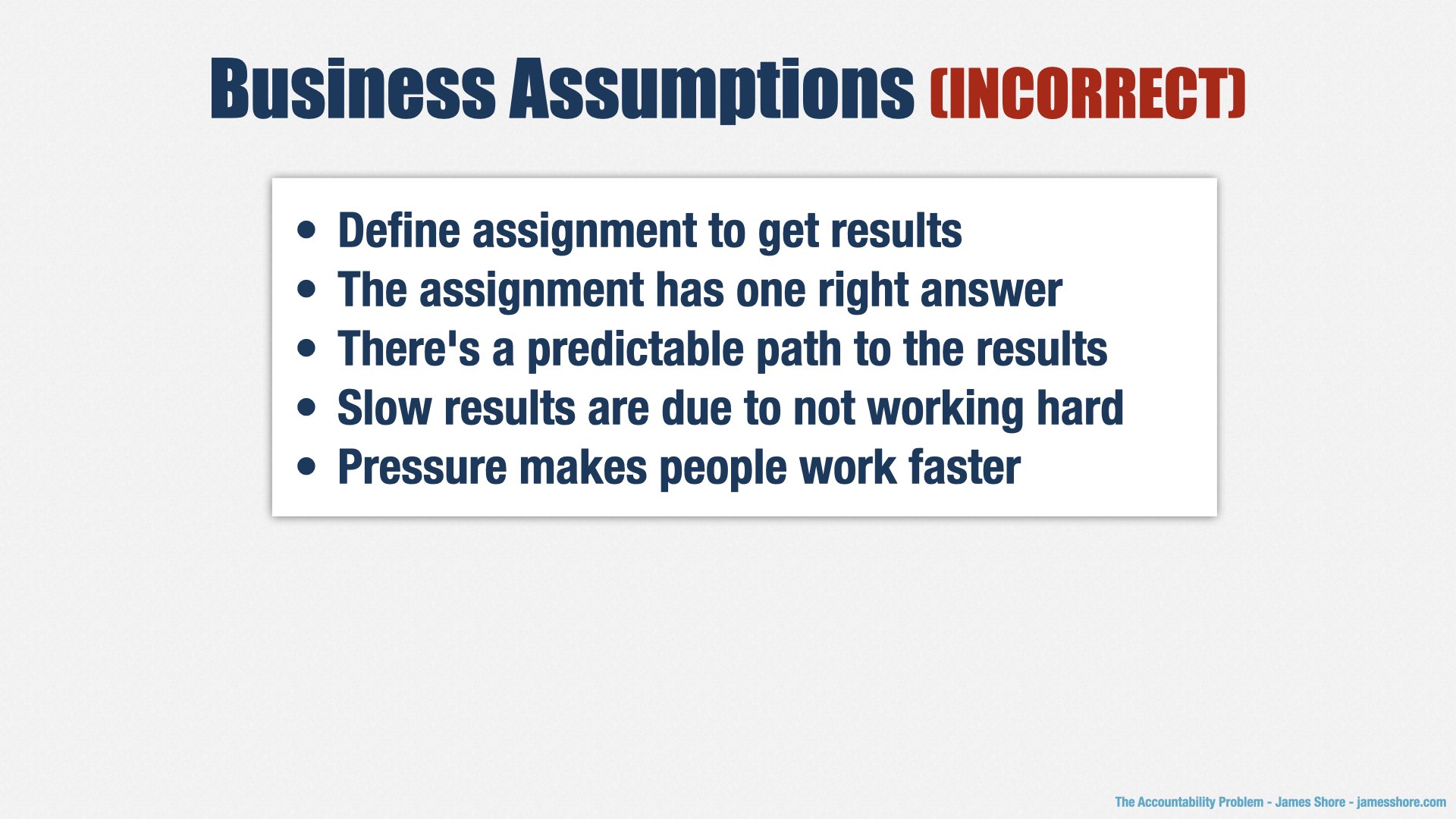

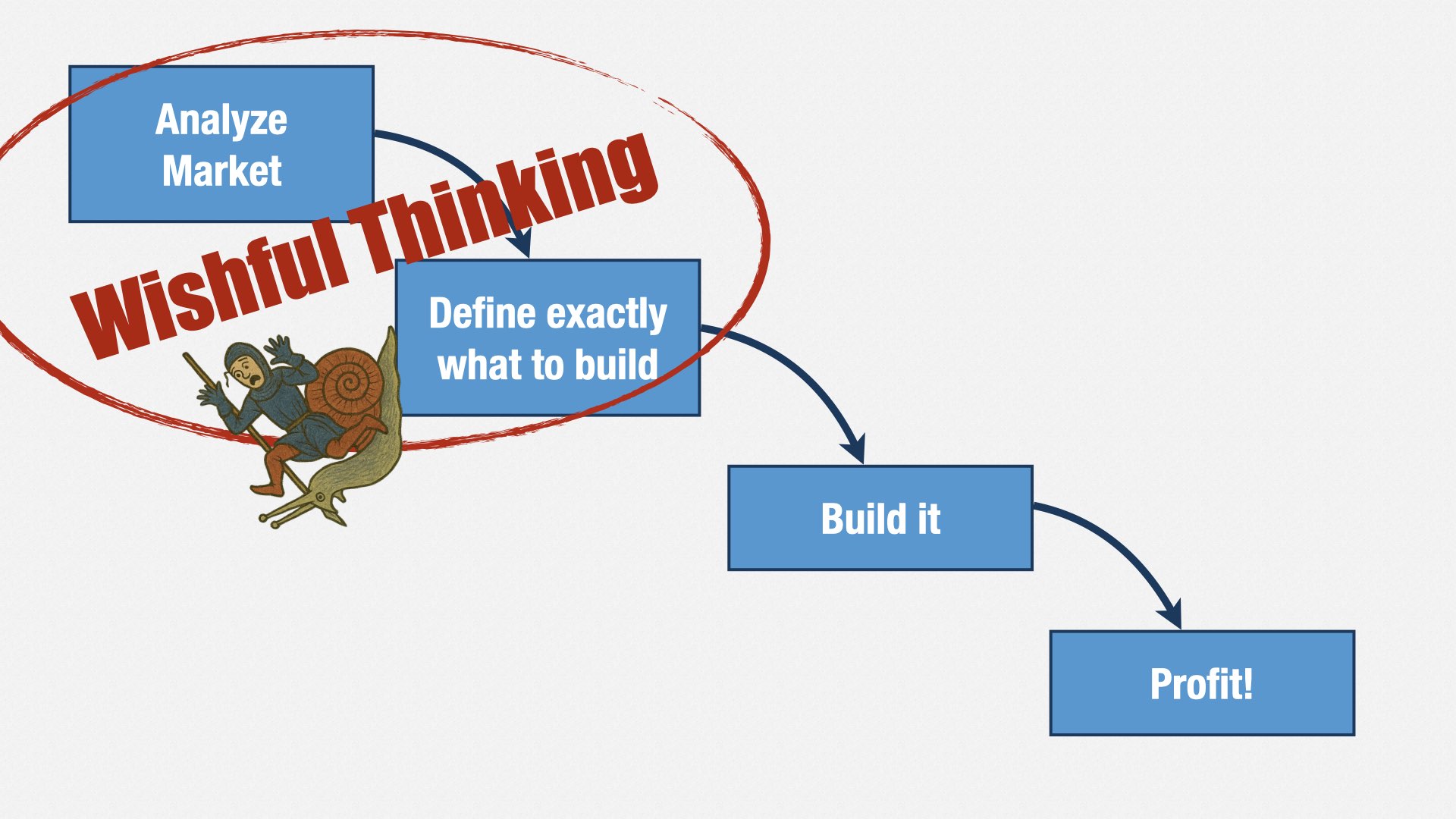

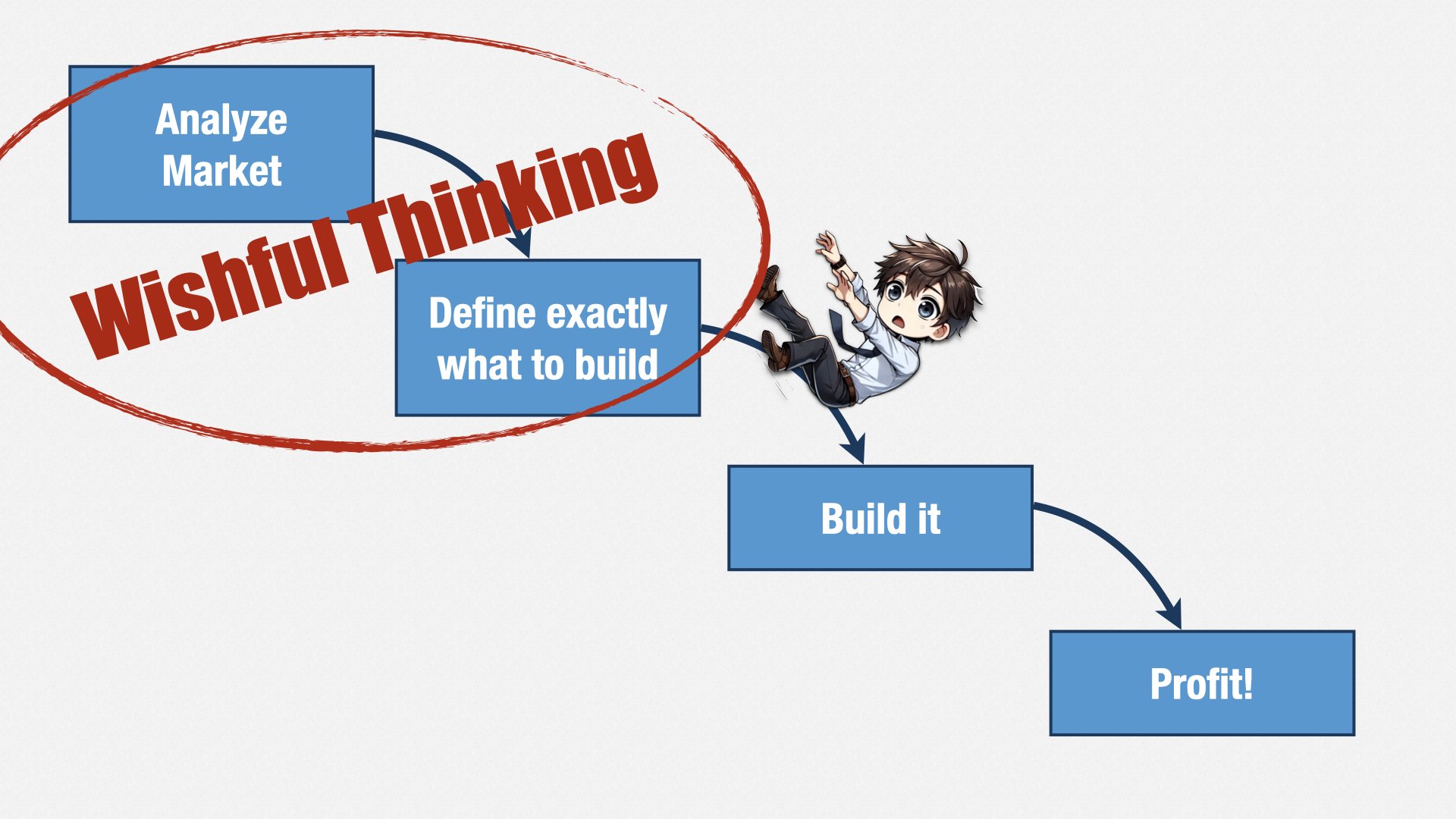

If you think this way—if you think that software development is like a big homework assignment—then you start making a bunch of assumptions.

You assume that you only need to define the assignment correctly to get the right answer.

You assume that the assignment has one right answer, and there’s a clear path to that answer.

You assume that people can tell you what that path is and how long it will take.

You assume that, when work isn’t getting done according to that schedule, it’s because people aren’t working hard enough.

And you assume that, when work’s behind, putting pressure on people will make them work harder and get it done on time.

Ultimately, you think software development looks like this [play animation]: a trip from point A to point B.

When in reality, it’s more like this [play animation]: a process of exploration and discovery, where the outcome isn’t known until you get there.

Software development is a foreign country. We do things differently here.

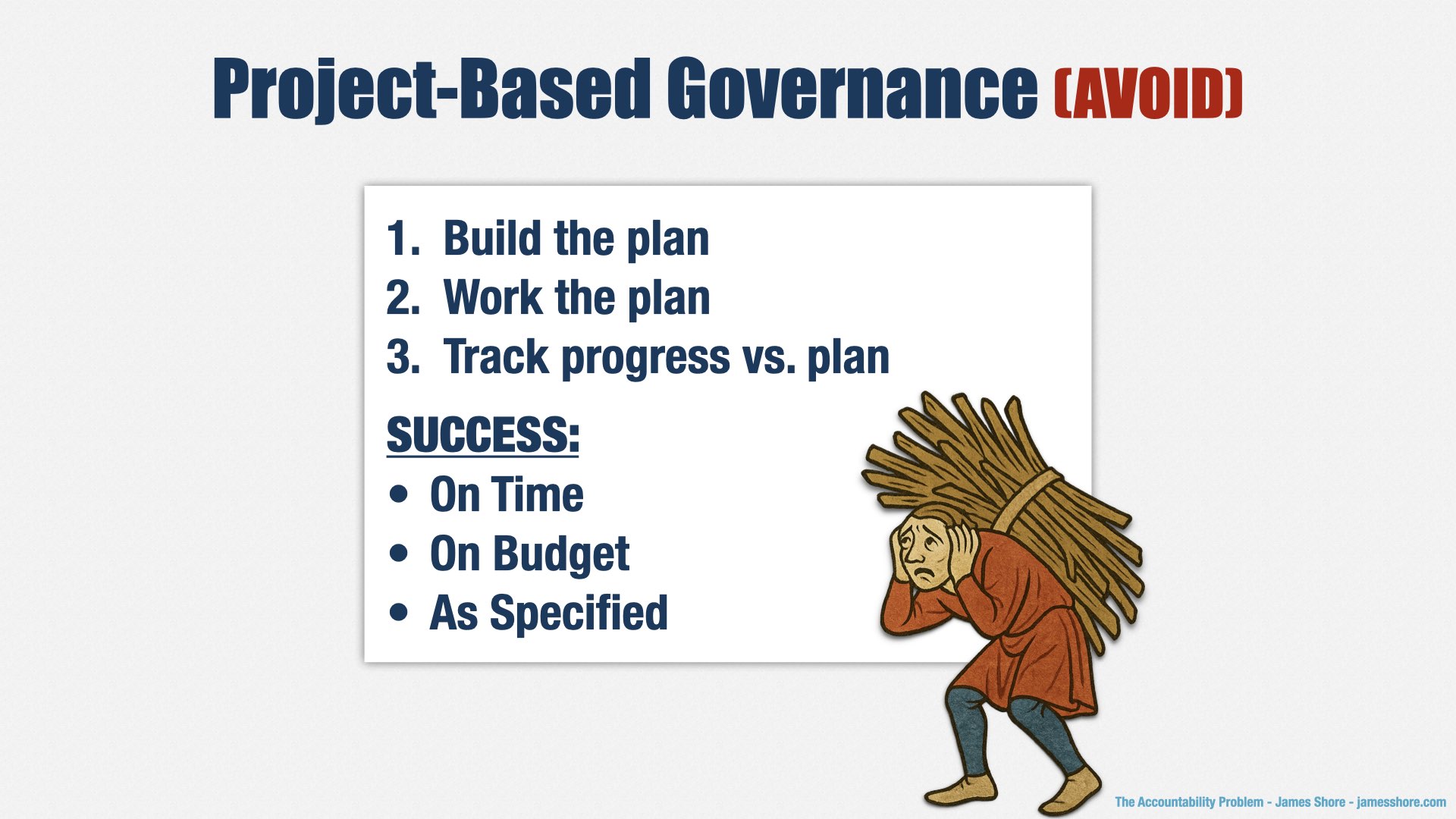

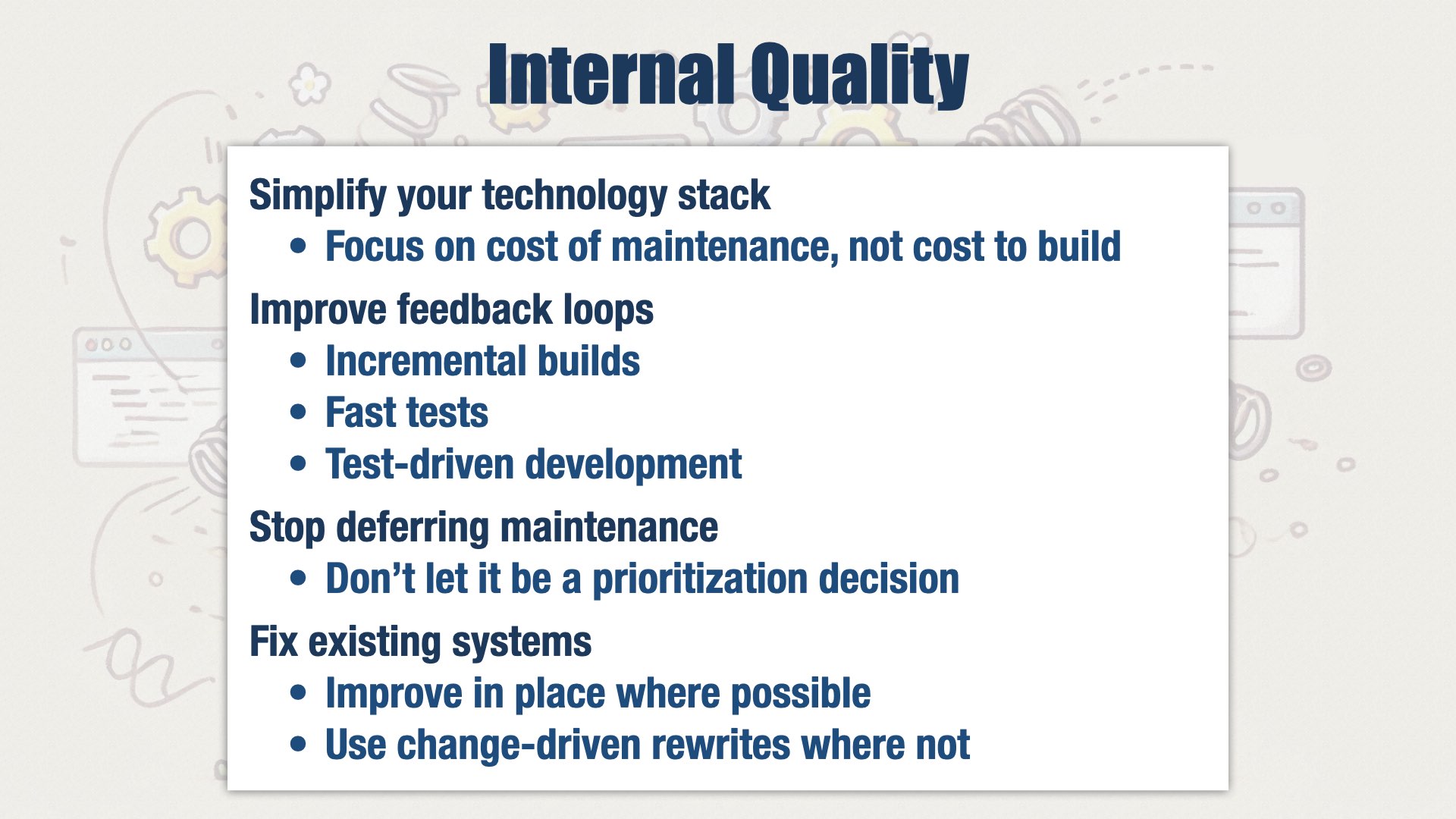

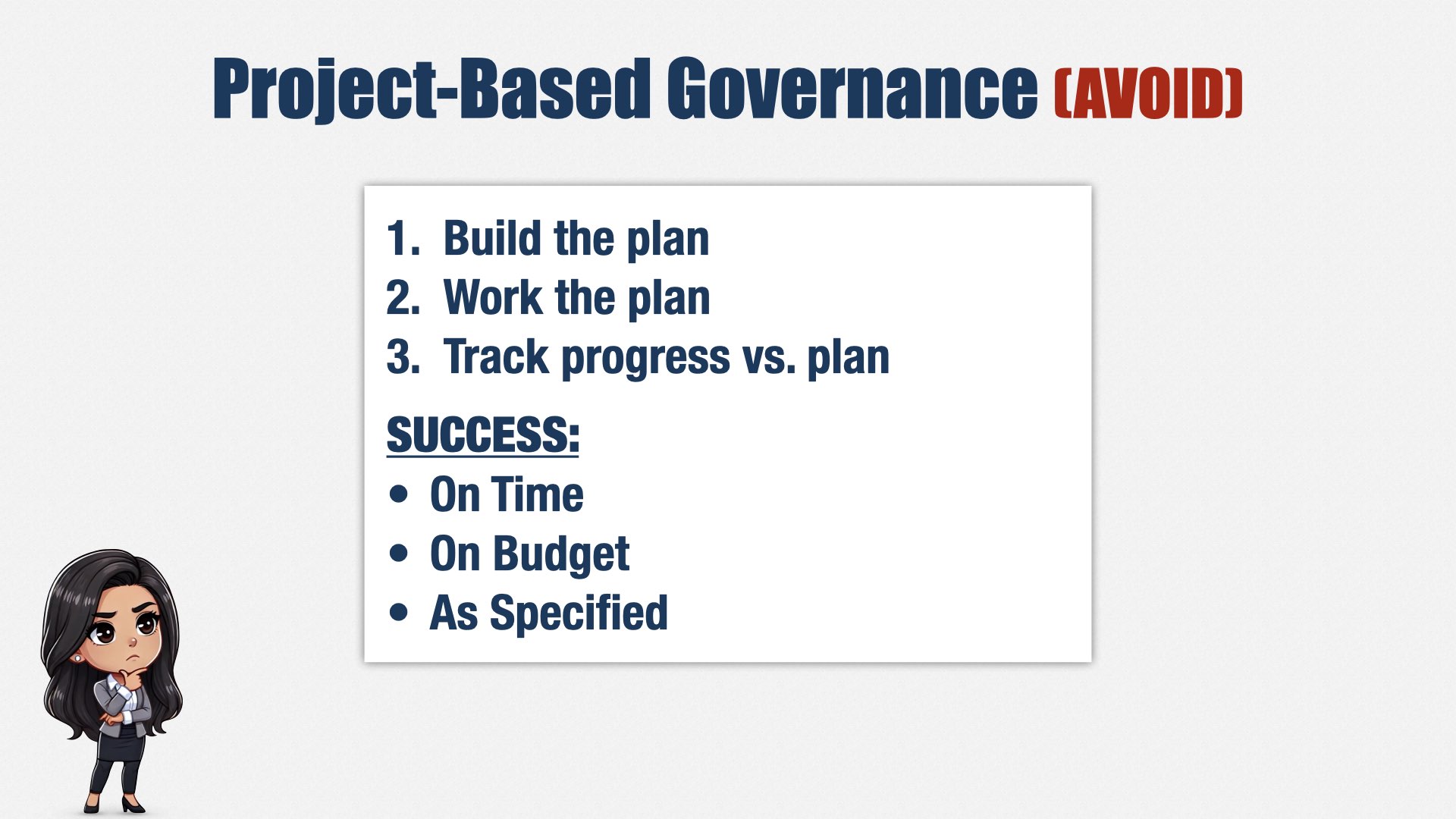

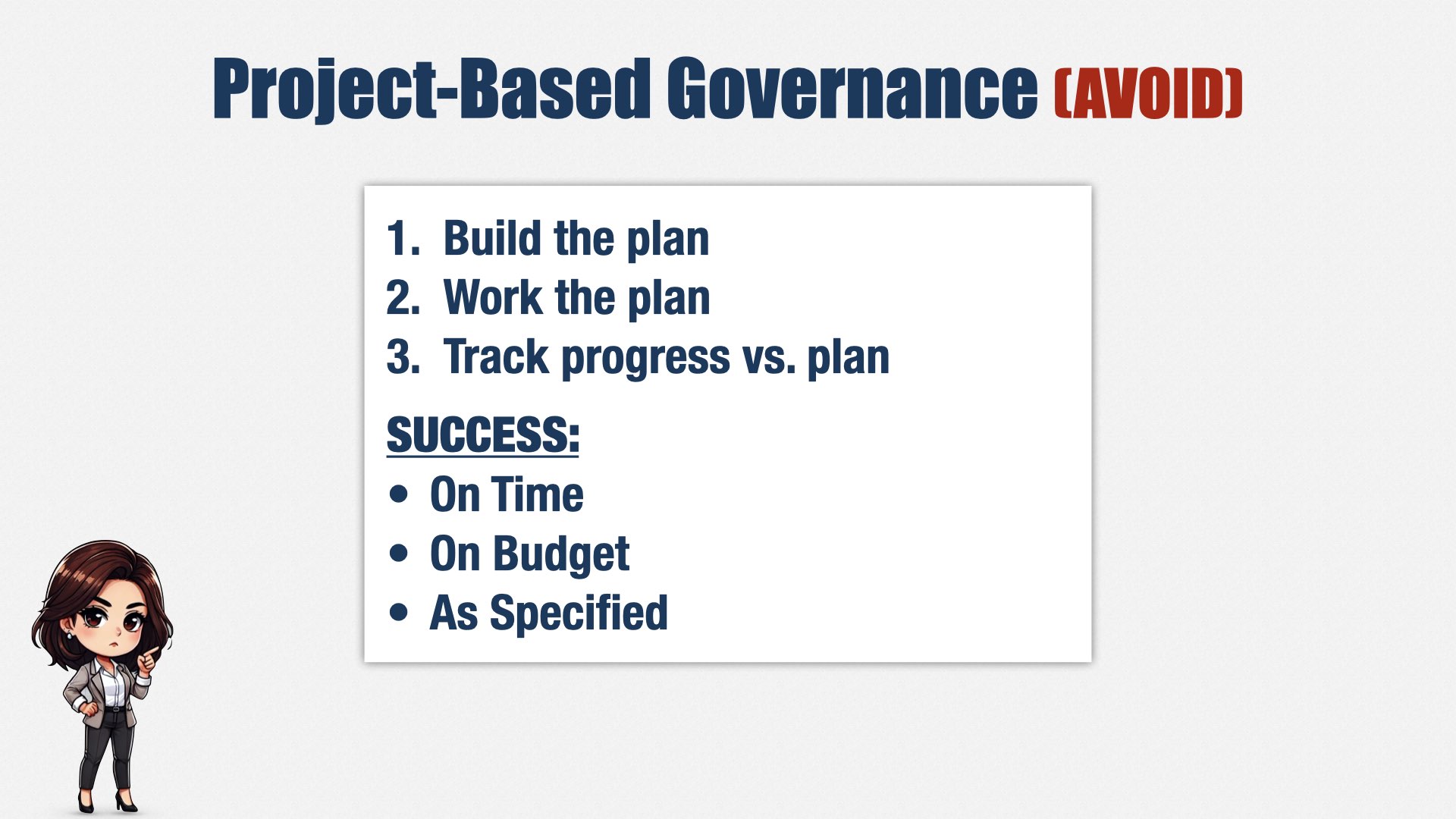

These misconceptions aren’t harmless. They extend deep into organizational structures. The biggest impact is how software development is run in most organizations. Most organizations use project-based governance. You create a plan, then you work the plan. If you execute the plan properly, you’ll be successful, and you’ll finish on time.

In this environment, it’s management’s job to make sure that the plan is created correctly, worked correctly, and that people don’t slack off.

How do you know management is doing their job? What are they accountable for?

Delivering software on time and on budget.

It’s clean, it’s neat, it’s easy to understand, and it matches people’s misconceptions about software development.

And it results in bad software.

The whole premise that we can define the assignment in advance is incorrect. Software development is a process of discovery—of iteration and refinement. We learn as we go, and that changes our plans.

This is an Agile development conference. You’ve heard it all before. I’m not going to belabor the point.

But our business partners haven’t heard it before, or if they have, it’s counter to their experiences. Like us seeing medieval pictures of elephants, like Microsoft with TDD, they can’t help but interpret the world through their own biases. And those biases lead to project-based governance.

In their minds, anything less... is a lack of accountability.

So what can we do about this?

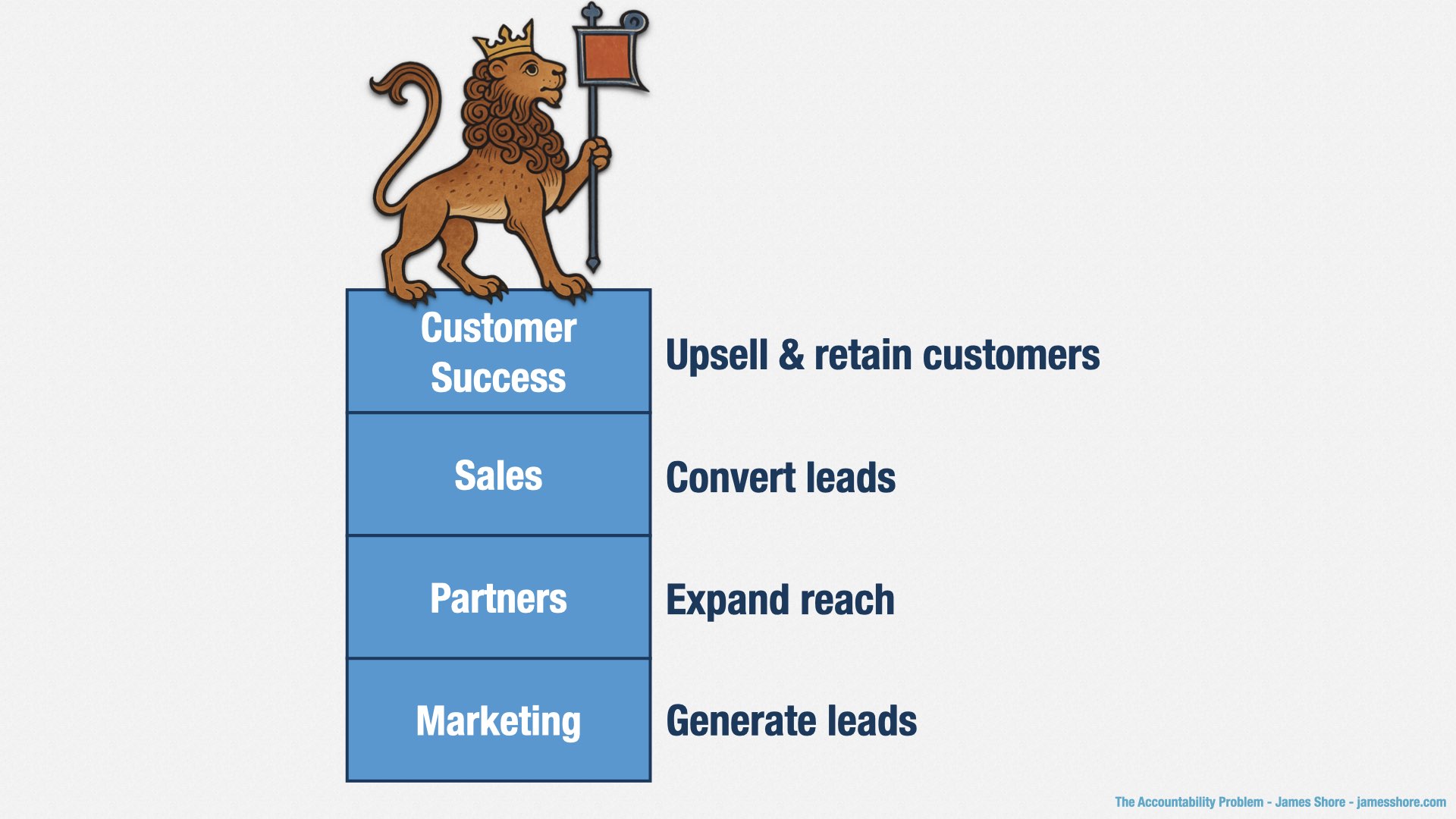

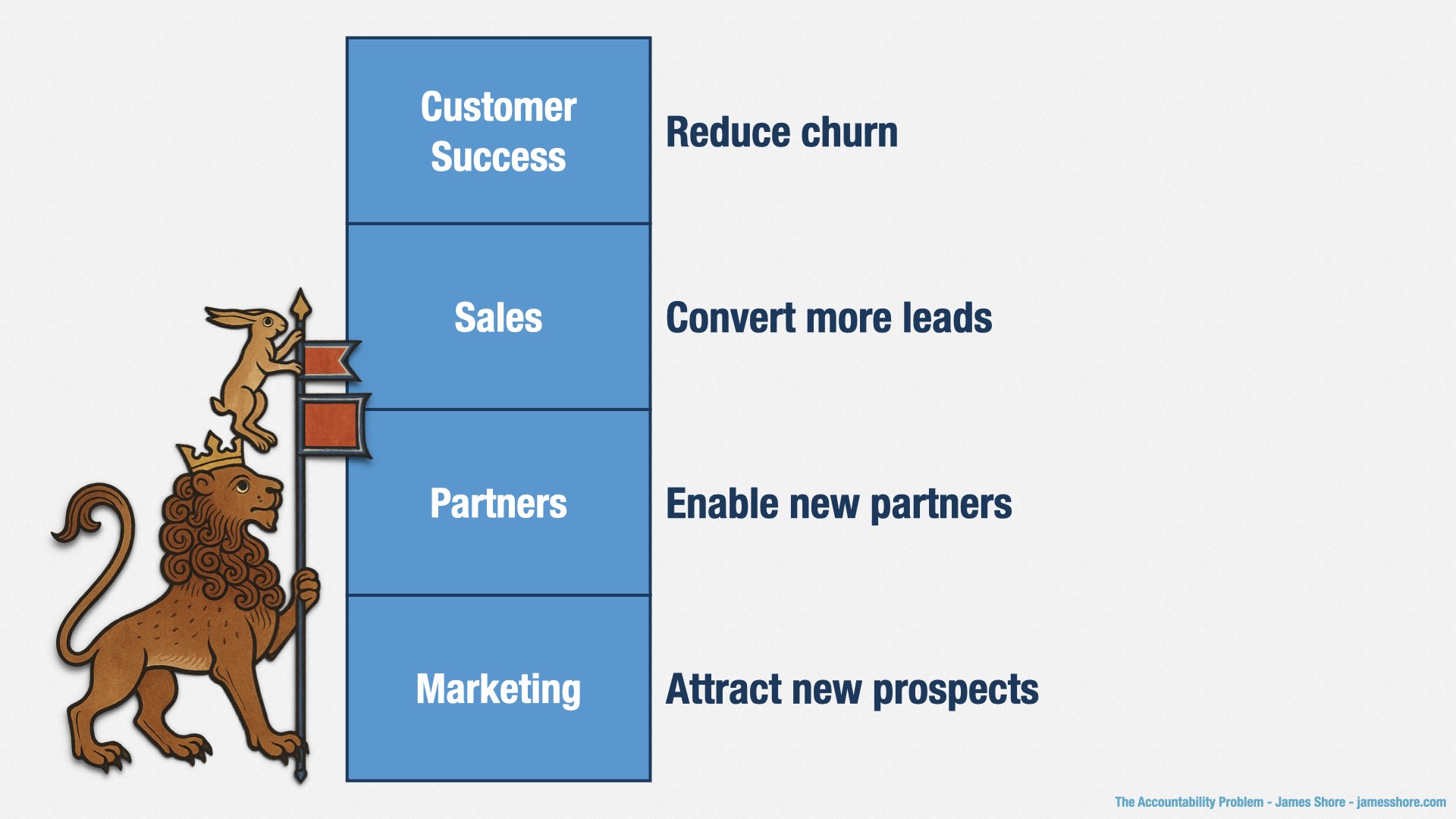

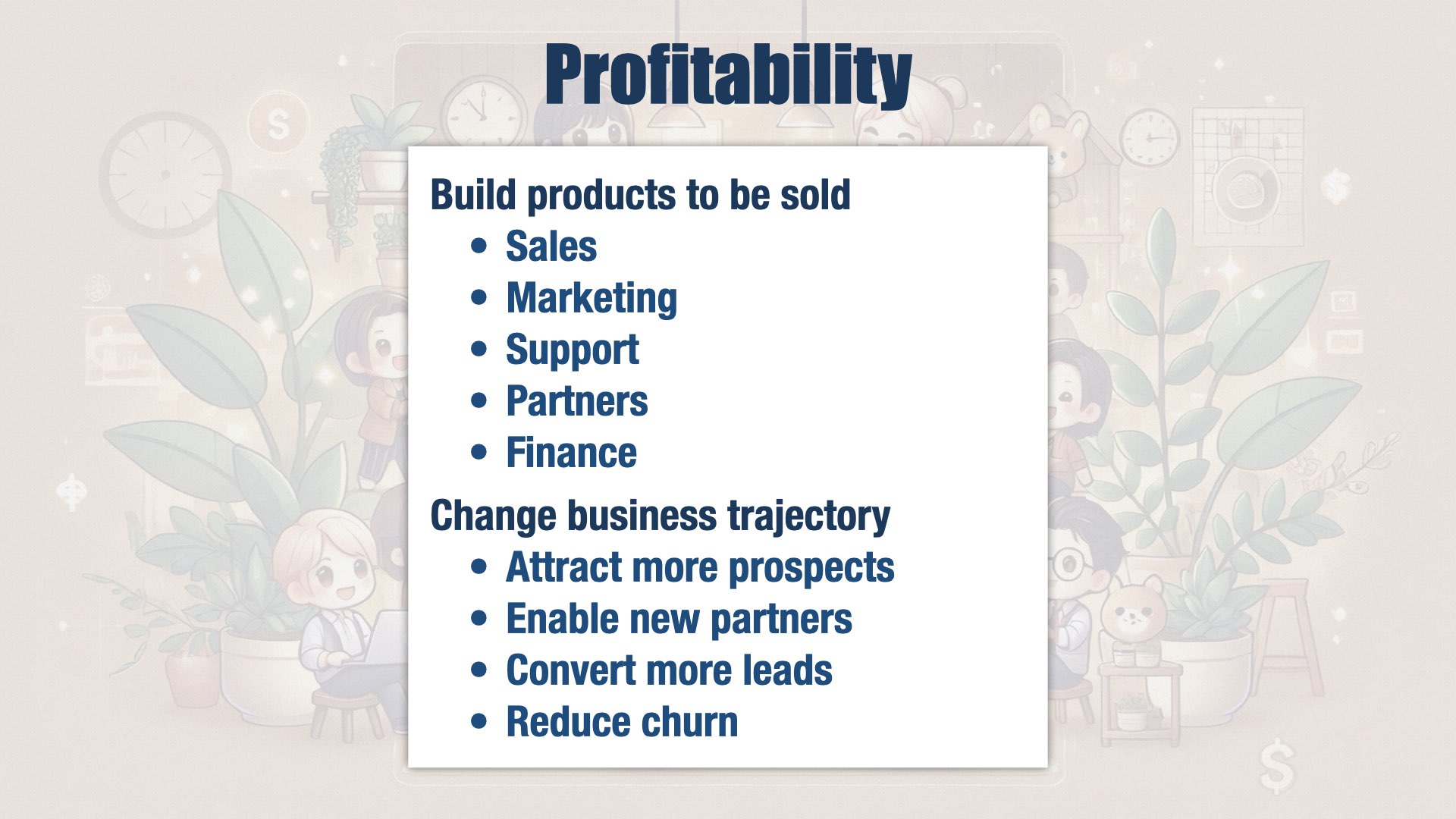

Ultimately, accountability is about being responsible for a set of results. At the executive level, everybody has to be accountable.

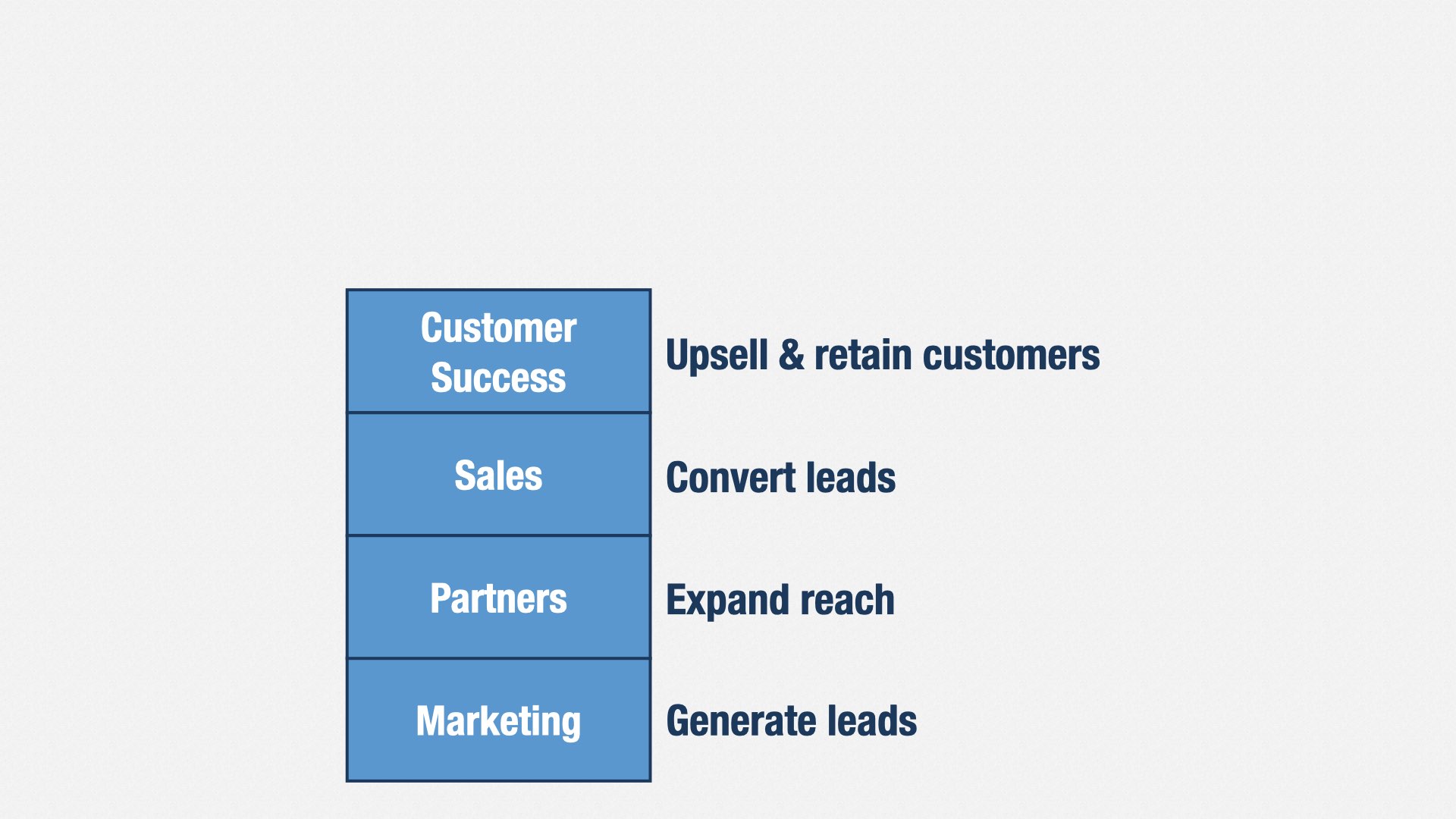

Marketing is responsible for generating leads for your Sales department. They say how many qualifying leads they’re going to create, and they’re accountable for having done so.

Partners also generates leads, or even sales, from people who are using complementary products and services. They’re accountable for bringing in partners, and for the revenue those partners generate.

Sales converts leads into paying customers. They’re accountable for the revenue generated by those customers.

Customer Success takes care of your customers. They’re accountable for retention, and for generating additional revenue from upsells.

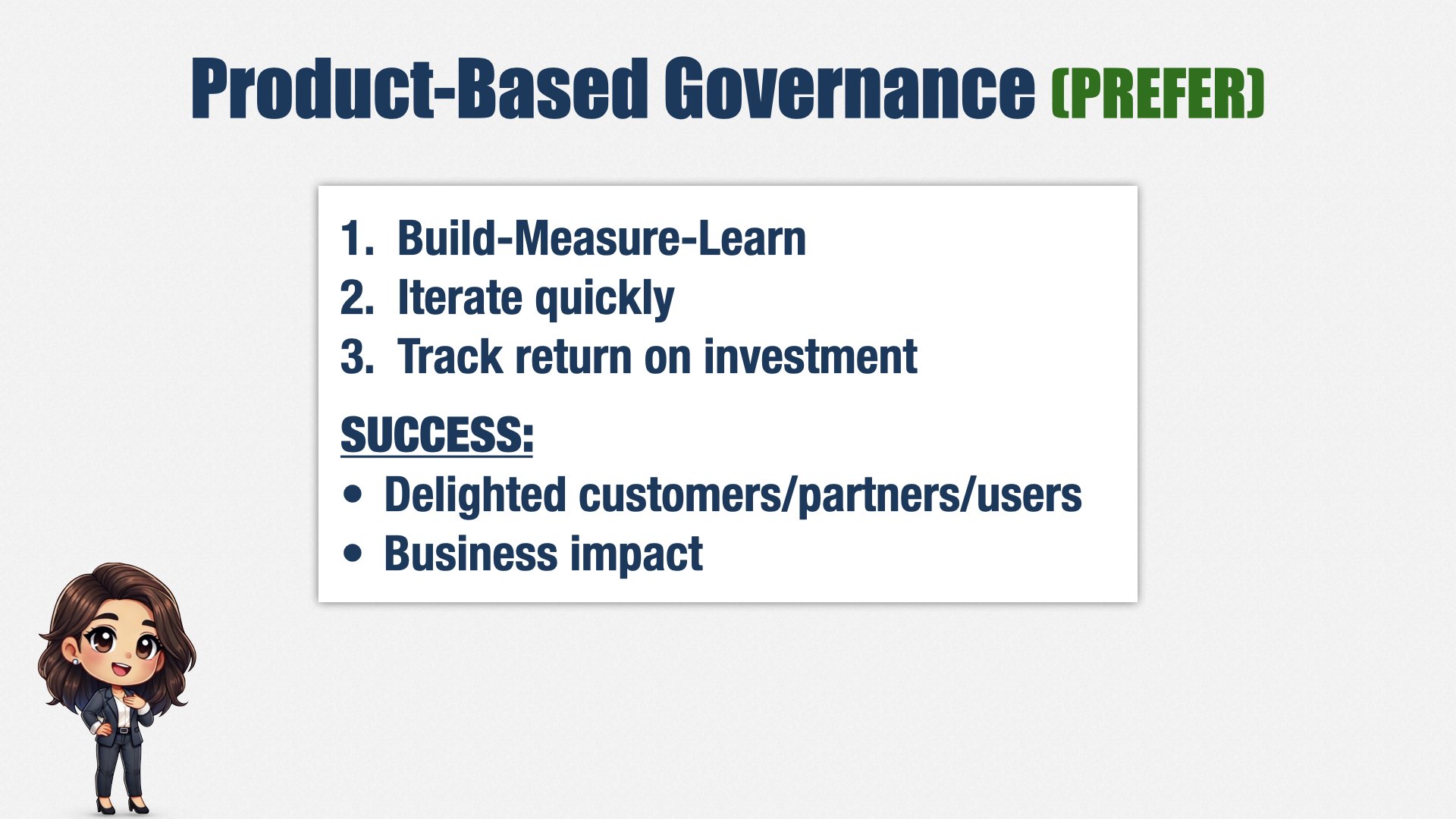

Everyone is accountable for doing what they say they’ll do, including us in software development. But there’s something different about how everyone else is accountable. Did you notice?

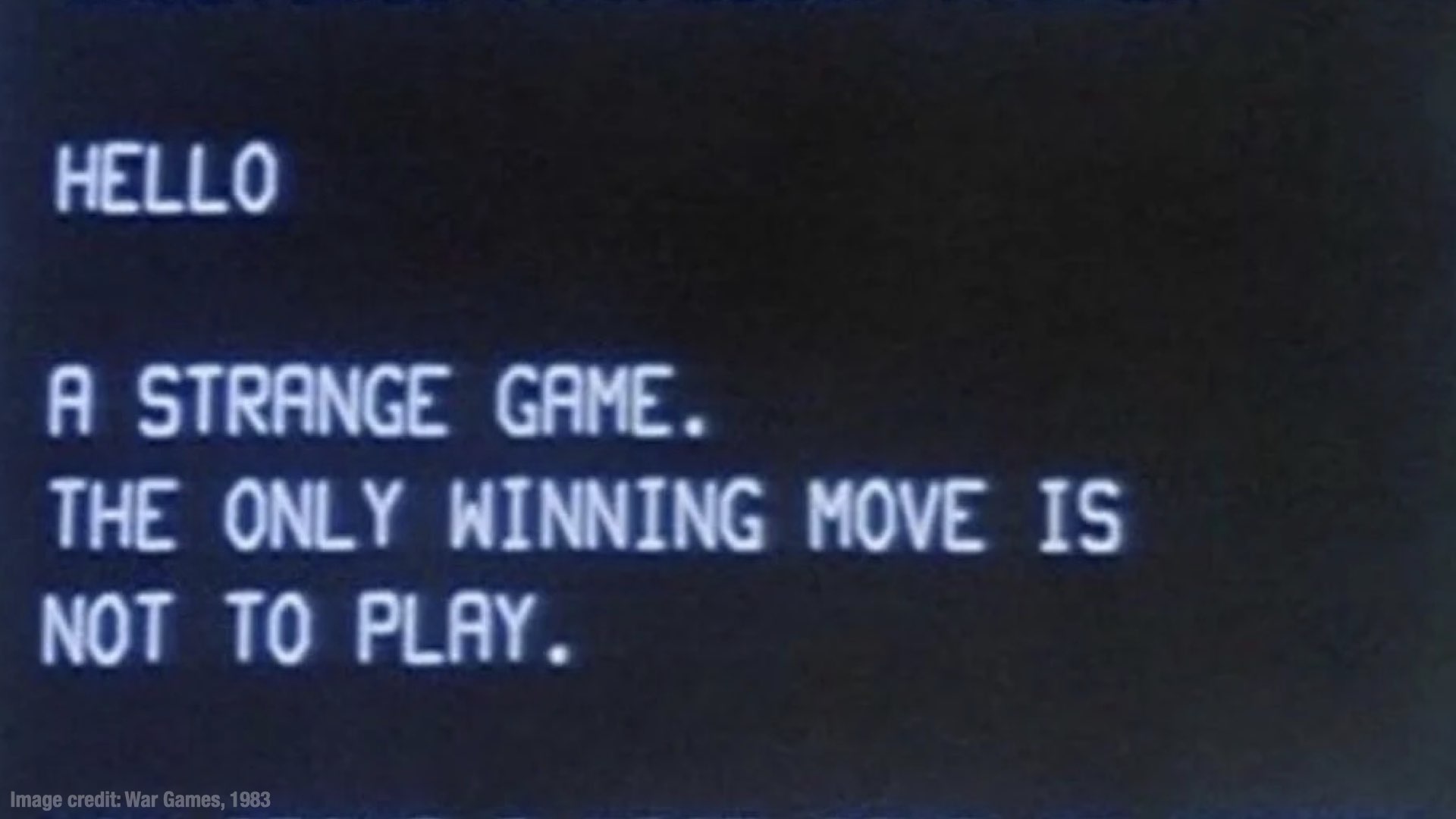

For other departments, accountability is about the results they’re bringing to the organization, not the work they’re putting in. Sales isn’t saying, “we’re going to land customer X on date Y.” Everybody knows that sales take time, and things go sideways. So Sales says “we don’t know exactly which customers we’re going to land, or when, but overall, we’re going to generate X dollars of revenue.” Same for Marketing, and Partners, and Customer Success. We in software are the only ones who have to predict exactly what and when.

Our business colleagues aren’t unreasonable. They understand that things go wrong. But they also believe, deep in their hearts, that if you aren’t accountable, you won’t put forth your full effort.

And if we don’t define how we’re going to be accountable, they’ll do it for us, in the only way they know how. Which features are you going to deliver? When? If you don’t deliver them on time, you aren’t being accountable.

We have to change the script.

So what should we be accountable for instead? What, exactly, do we do? What results do we create?

[beat]

We create new opportunities. Let’s say that the trajectory of your company is to grow its annual revenue by $10mm per year. Our job is to increase that rate of growth, to $12, $15, $20mm per year. Every time we ship a new feature, we should be increasing that rate of growth.

Our features should open up new markets, allowing Marketing to generate more leads.

We should provide useful APIs, allowing Partners to build new relationships.

We should respond to market trends, allowing Sales to convert more leads.

And we should fix the problems that get in customers’ way, reducing churn and increasing upsell.

What are we accountable for? We’re accountable for improving our companies’ trajectories. Every dollar invested into software development, other than keeping the lights on, should be reflected in permanent improvements to the value your company creates. That value may not be literal dollars or pounds; it may be helping to cure malaria or fighting climate change. But however you define value, the purpose of our work is to change that trajectory for the better.

It’s easy to say that we’ll be accountable for improving our companies’ trajectories. But how do we actually demonstrate that we’re doing so?

It’s nearly impossible to quantify the impact of any individual feature. It takes months to see an impact from a new feature, and even then, we can’t say that feature X resulted in change in behavior Y. Let’s say churn went down by half a percent. That’s great! Did it go down because of the feature we just released? Or because of a different one? Or is it more that interest rates just dropped and we hired an amazing new director for our customer success department?

This is why it’s tempting to look at when you’ll deliver a feature. It’s easy to measure.

But ultimately, features are a means to an end, not the end itself. There’s an old cliché that people don’t want a shovel, they want a hole in the ground. And they don’t want a hole in the ground, they want a building foundation. And they don’t want a building foundation, they want a nice big stable. And they don’t want a stable, they want war elephants that make their enemies say things like, “Carthago Delende Est!”

When we talk about delivering features, we’re talking about shovels when we should be talking about striking fear into the hearts of Roman soldiers.

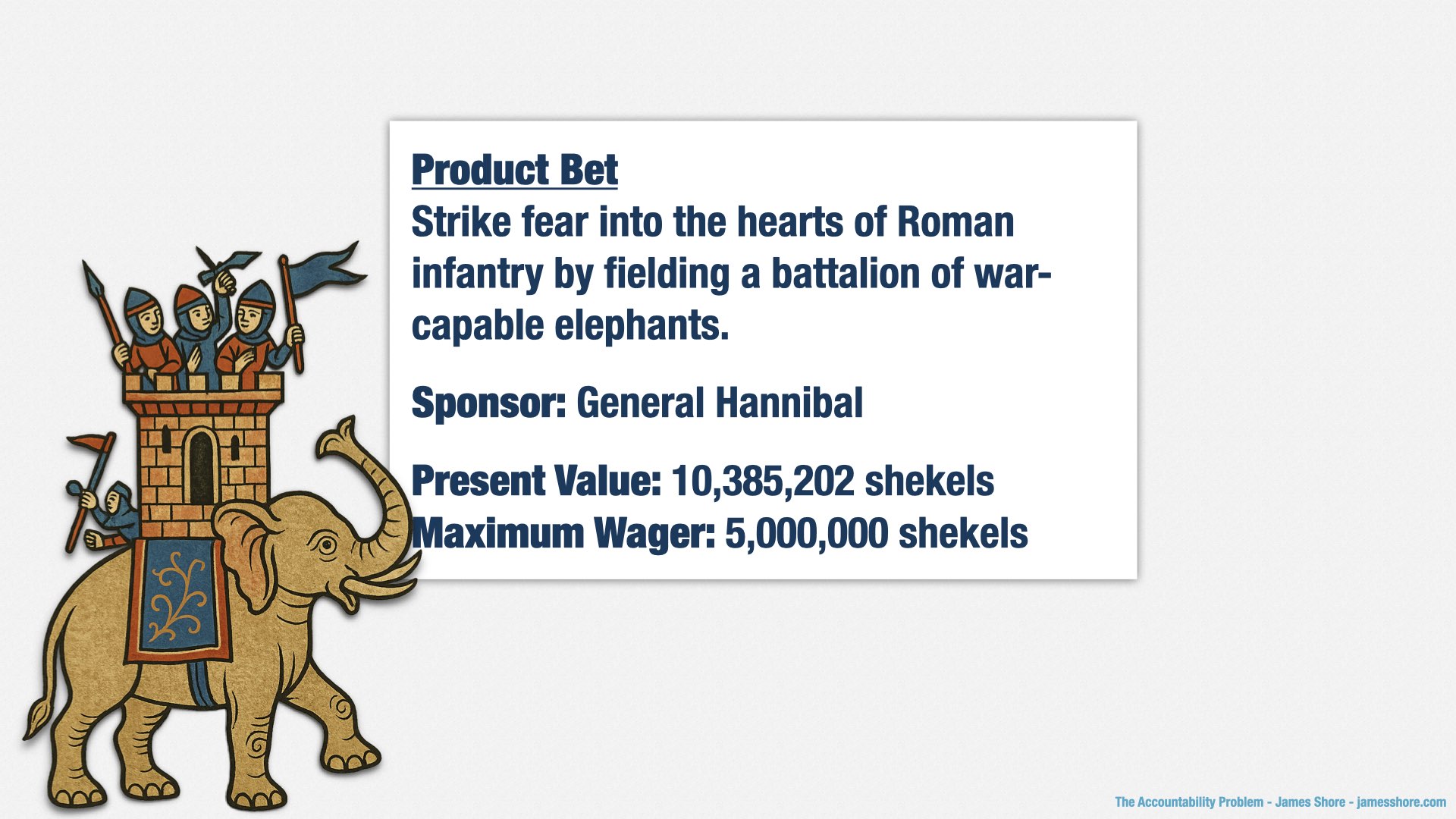

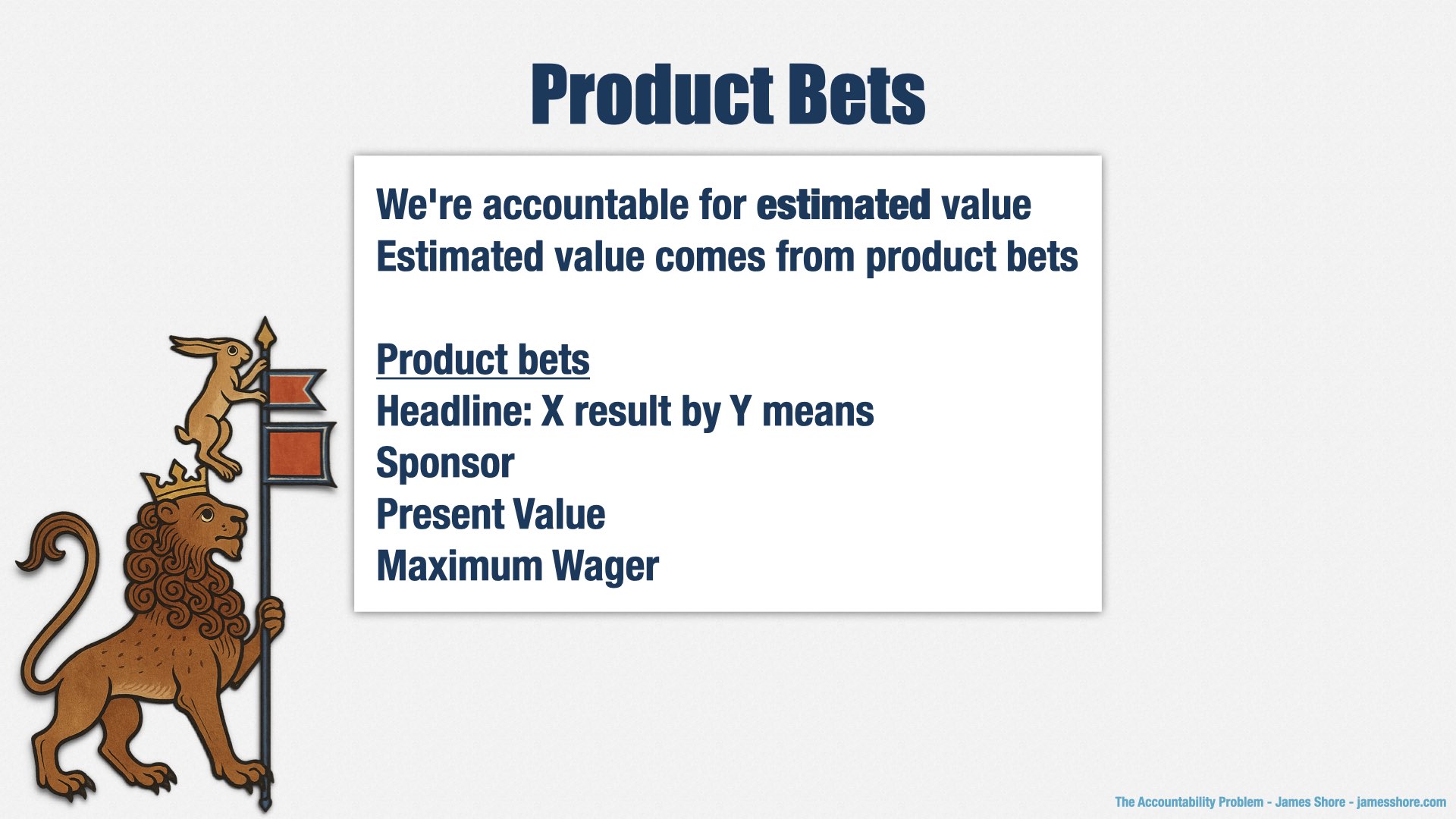

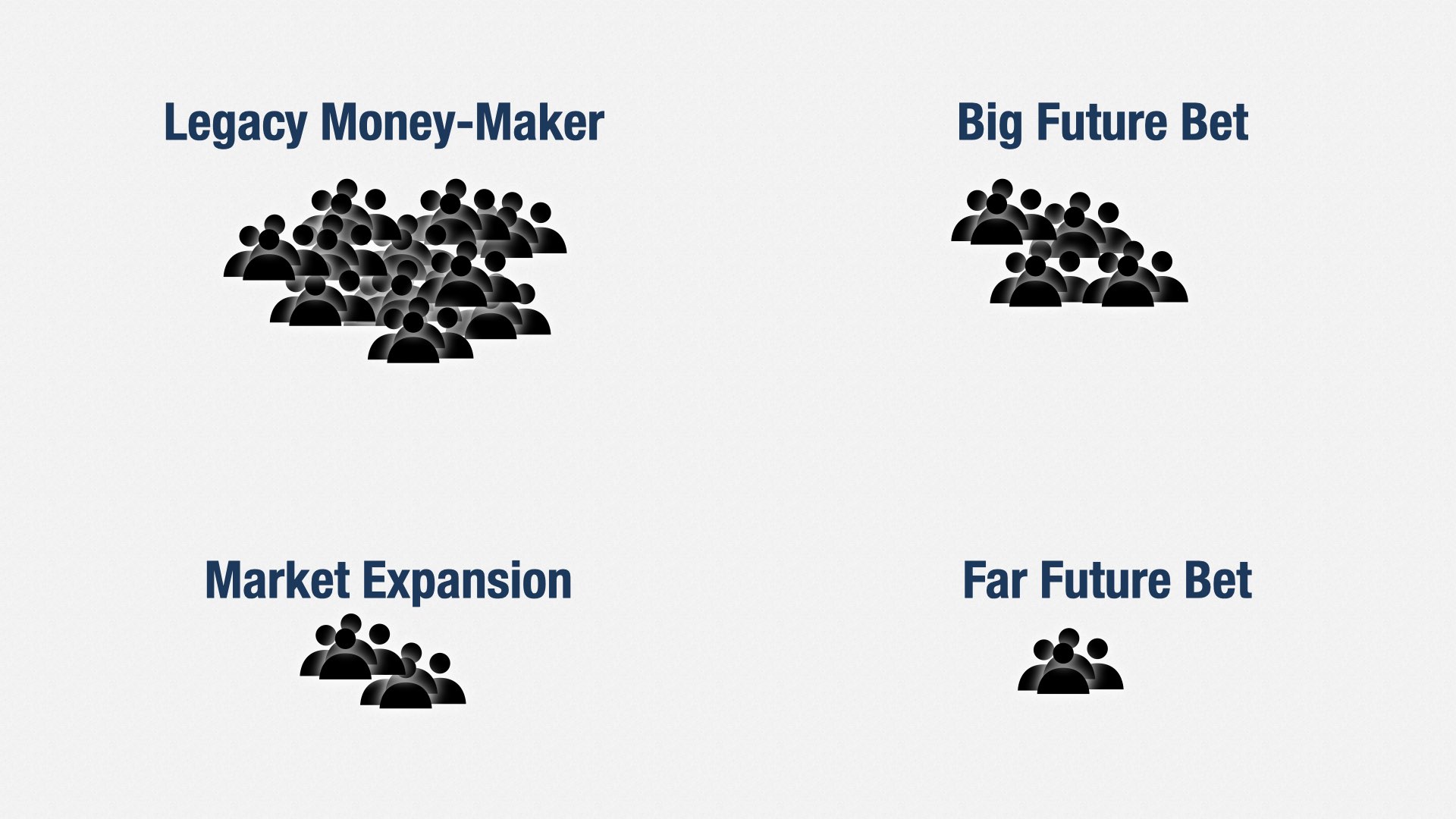

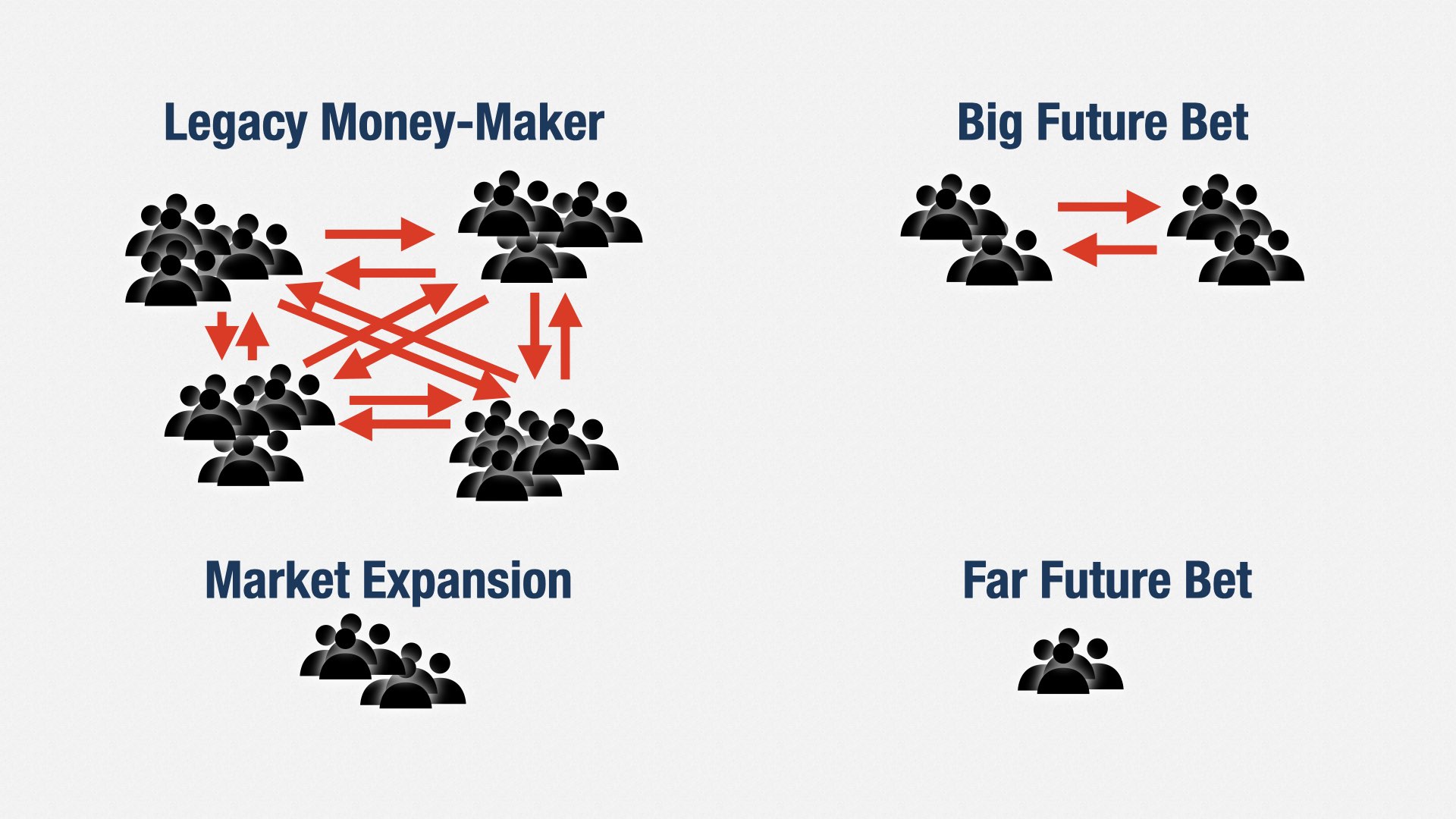

So instead of talking about features, I’ve introduced a way of talking about value. At OpenSesame, we’re calling them “Product Bets.”

Before we go further, a quick disclaimer. The term “bet” is common among startups and other entrepreneurial organizations, so you’ll hear the phrase “product bet” from a lot of different people. Each of us is using it in our own way. So my use of “product bets” isn’t the same as what you might have seen from somewhere else.

Okay, so what do we mean when we say product bet?

Ultimately, it’s a strategic investment in a business result. It’s summarized with a single sentence that has two parts:

First, the business outcome: Strike fear into the hearts of Roman infantry!

Second, the means by which we do so: ...by fielding a battalion of war-capable elephants.

The result always comes first: strike fear. The mechanism comes second: war elephants. And even then, it’s high level. We need a stable, we need animal breeders and trainers, we need to train soldiers, we need a supply line. We need so many things, and not just software. Those are features. We don’t talk about features in our product bet. We keep it high level. Just the headline.

Next, we need a sponsor. Who amongst our leadership team is going advocate for this result? At OpenSesame, it’s usually our Chief Product Officer. But sometimes it’s our Chief Customer Officer, who’s in charge of sales and retention.

For the Carthaginians, of course, the sponsor is General Hannibal.

Next we talk about estimated present value. This is a core innovation. As I said, it’s nearly impossible to measure the impact of any feature, or even set of features. There’s too many confounding factors.

So we don’t measure the impact. We estimate the impact.

My software department takes accountability for delivering estimated value, not measured value.

Now, that’s not to say that we don’t want to validate results. Jeff Patton talks about using Dave McClure’s Pirate Metrics to do so. I welcome and encourage that kind of validation. Ultimately, you have to decide if the bet was successful.

(Spoilers: Hannibal’s bet isn’t going to be as successful as he was hoping.)

But the key idea of these product bets is that you don’t have to measure value. You only have to decide if the bet was successful. If it is, we get credit for the estimated value, not the actual value, which saves us a lot of time and trouble.

Estimating value allows us to be accountable without predicting specific dates and features.

Remember that the head of Sales is accountable for delivering a certain amount of new business every year. Let’s say it’s 10 million dollars. They’re going to deploy a certain number of sales people towards small-to-medium businesses, some towards mid-market, some towards enterprise. They’re going to conduct training and organize incentive programs. They’re going to get everybody fired up about how they need to sell, sell, sell! They’re going to monitor calls, check SalesForce, make sure people are following up.

But they’re not going to say, “Enterprise X is going to sign on date Y.” Because they can’t. The buyer’s going to go on vacation. Legal’s going to demand redlines. A year in advance, nobody knows when the contract will be signed, or if it will even be signed at all. But overall, they’ve got enough going on that they can say, “yes, we’re going to close $10mm in sales this year.”

The same is true of us. A year in advance, we don’t know which bets we’re going to do. We don’t know how much it’s going to cost to build them. We don’t know which ones are going to be successful and which ones are going to fail. But overall, we can say, “Yes, we’re going to deliver bets that are worth $10mm in estimated value this year.”

And that’s accountability.

[beat]

Wait a moment. “We don’t know how much it’s going to cost to build a bet?” How can we decide what to do if we don’t know how much it’s going to cost?

At this point, all we have is a headline. There’s no way for us to know how much it will cost, because we don’t know exactly what we’re going to build.

And if we’re doing Agile right, we will never know exactly what we’re going to build until after it’s done. As you all know, Agile software development is iterative and incremental. It’s a process of discovery.

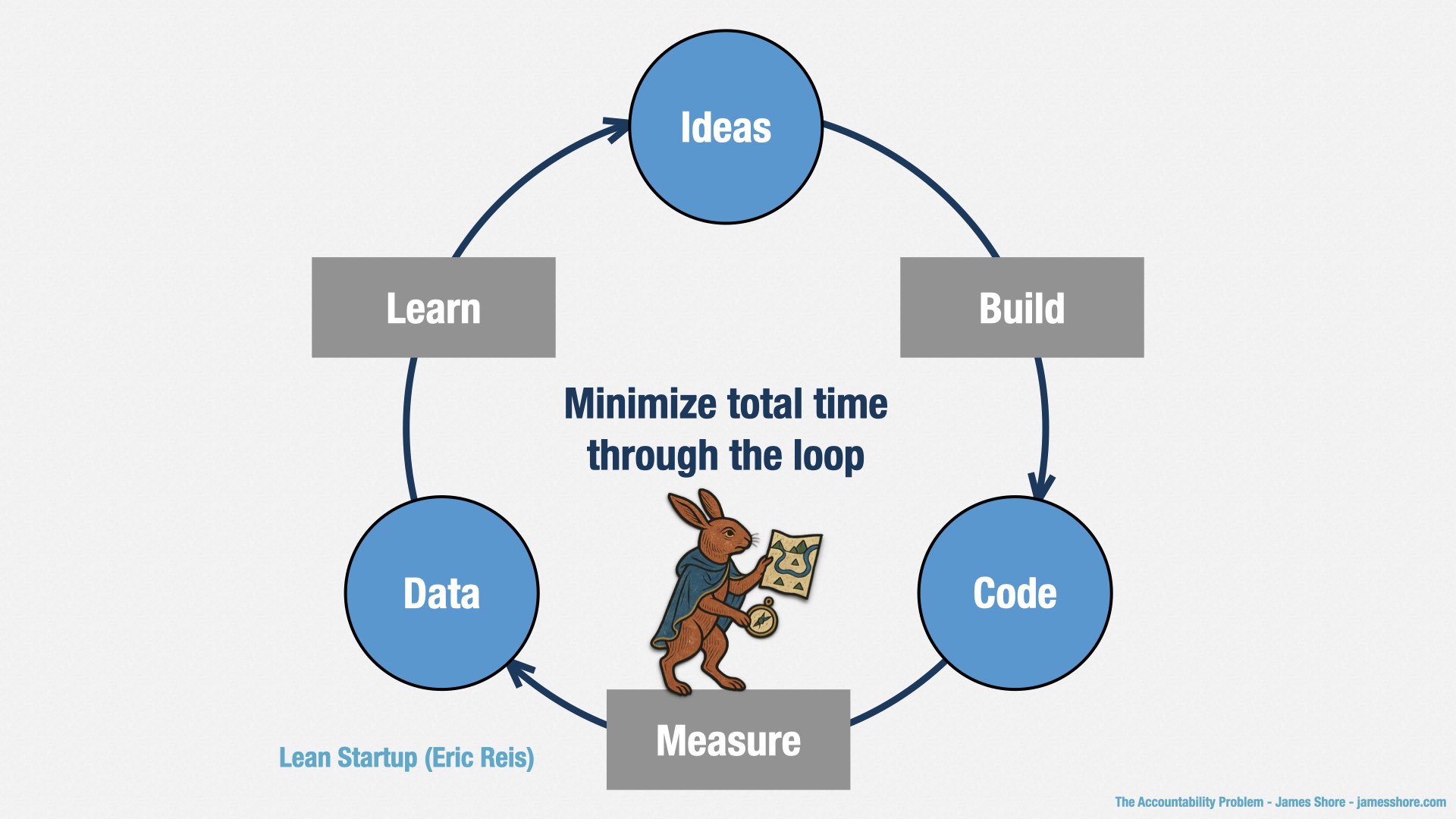

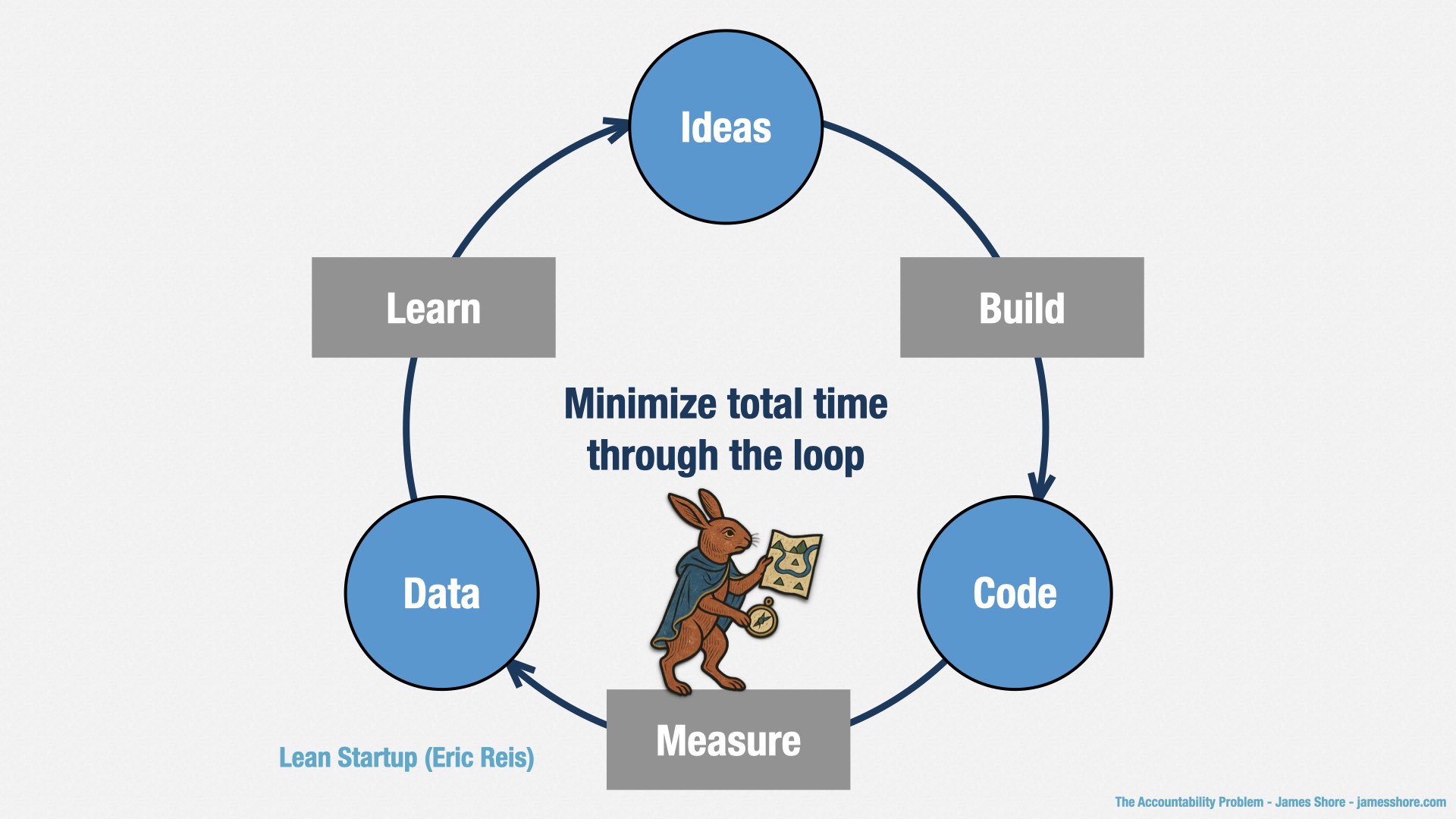

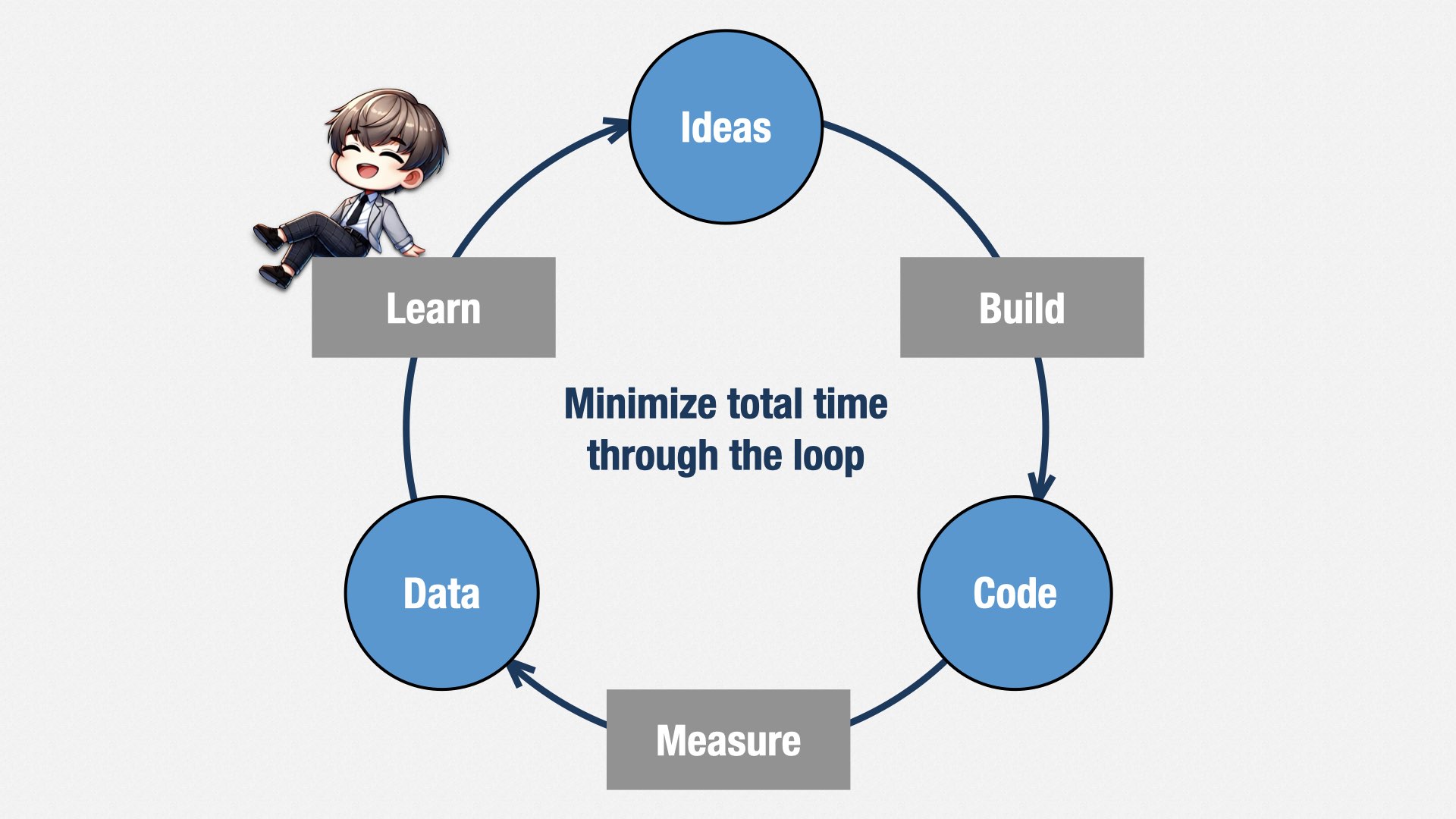

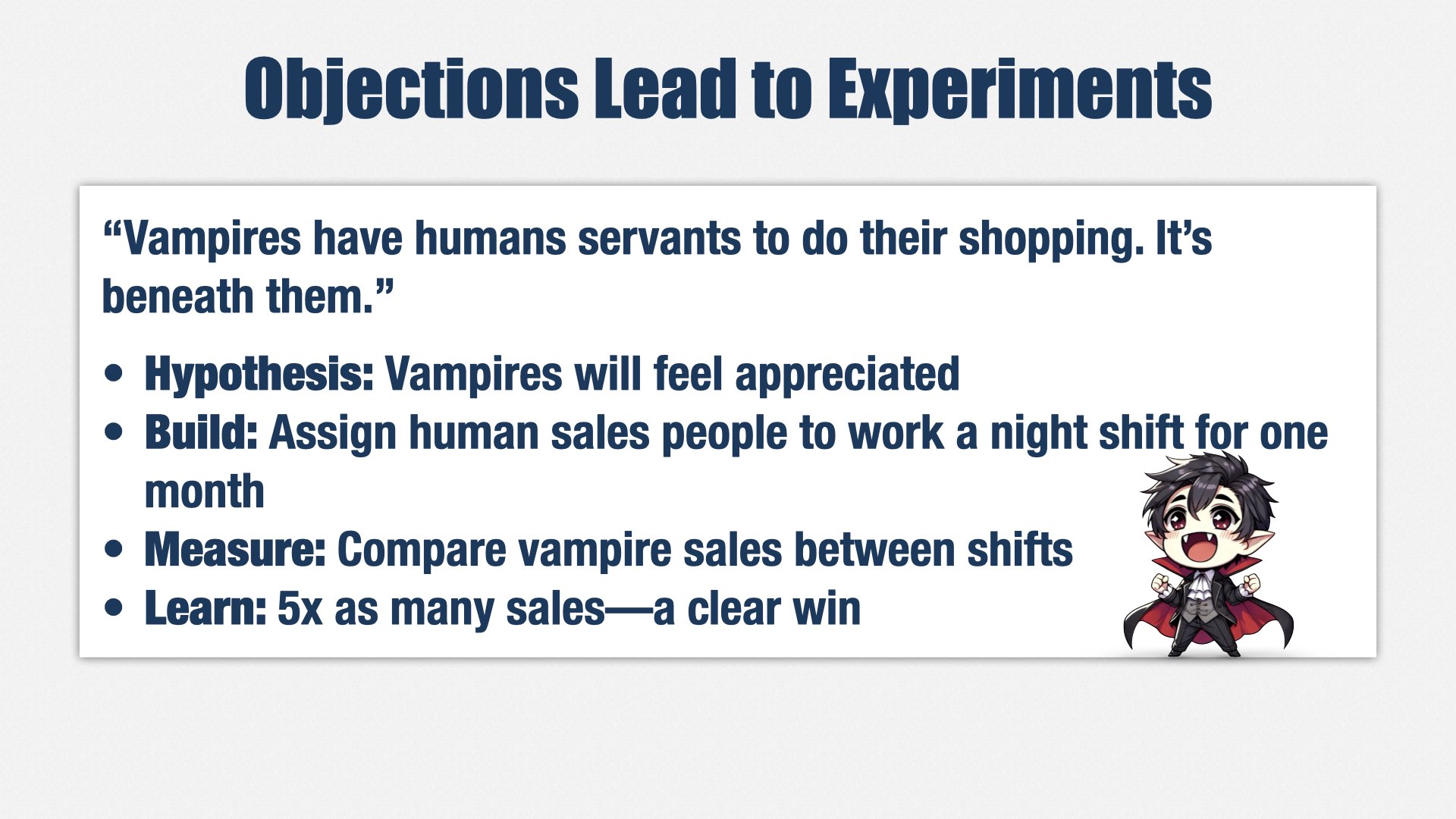

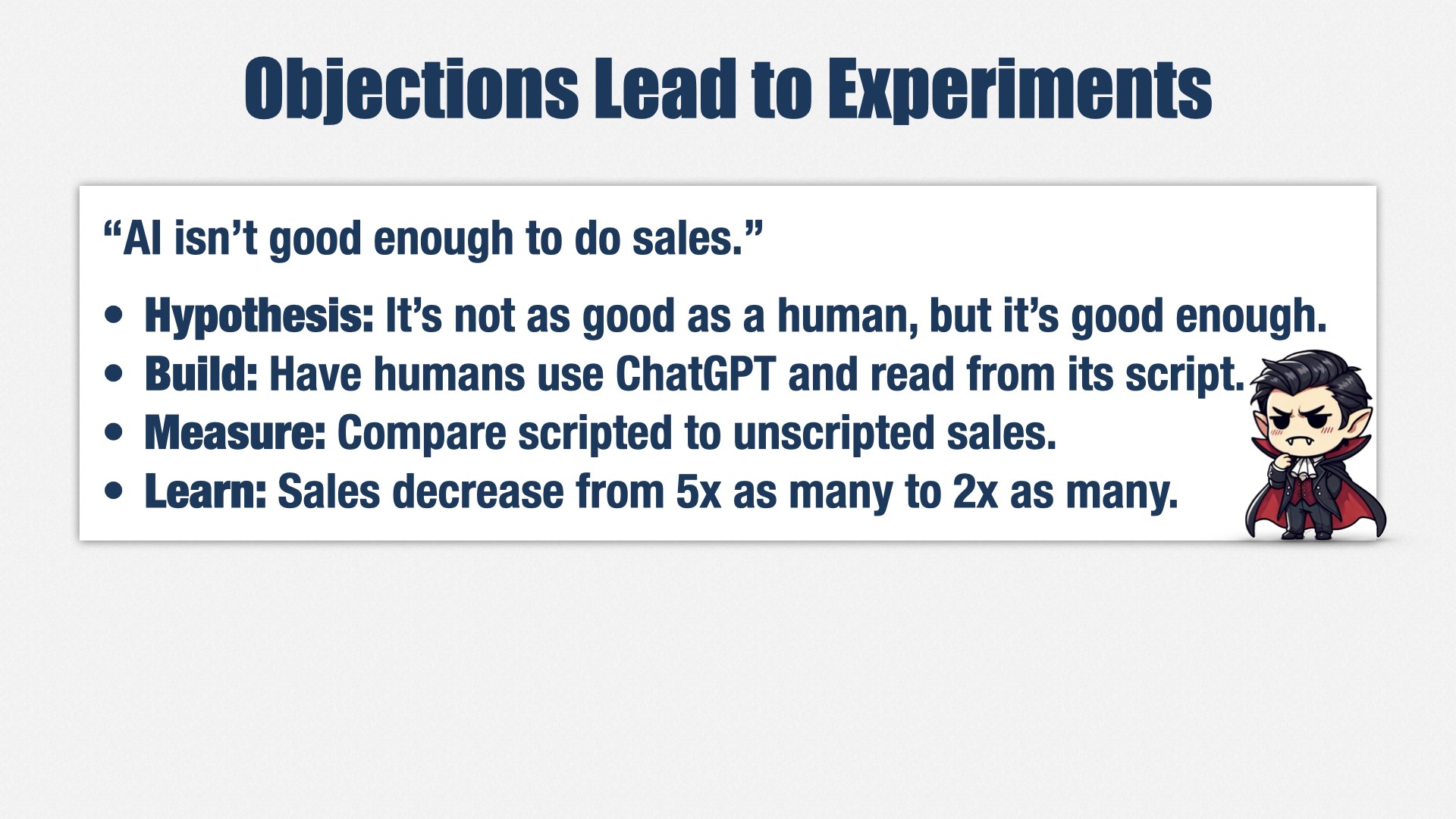

I like Eric Ries’ characterization of this idea: we build, we measure, we learn, over and over again. And we don’t know what we’re going to do here [points at “build” step] until we know what happened here [points at “learn” step]. As long as we’re genuinely learning, we can’t know our costs in advance.

What we can do, though, is put a maximum limit on how much we’ll spend. I call it the “maximum wager,” to continue with the betting theme. We track our spending, and if we’re not successful by the time we hit the limit, the bet has failed. We shut it down and move on to the next one. Or, at the very least, take a hard look at where things are at and decide on a new wager. As long as the total spending is less than the present value, it could still be a good investment.

The amount of the maximum wager is for your leadership team to decide. It’s not an estimate of cost. It’s a gut check about risk and value. The higher the value of the bet, the more you can wager. But you don’t want to wager so much that it would be crippling if the bet failed. Some bets will fail, and you’ll get nothing for your efforts. Success doesn’t mean fielding elephants. Success means winning a war with our elephants, and those Romans can be tricky.

The maximum wager is based on your leadership team’s gut feel of the risk and value involved. It’s not based on how much we think the bet will cost; it’s based on how much we’re willing to lose.

And then we do our best to make sure that potential loss is minimized. We use the "Build, Measure, Learn" loop to validate whether the bet is going to be successful early on. Maybe one loop is focused on taking elephants up into the mountains to see how they handle the harsh conditions, and another loop dedicates a “red team” to see if they can be spooked into fleeing during battle.

It turns out they can. It would be nice to discover that early, not in the middle of battle with the Romans.

Although we in software are accountable for estimated value, not actual value, we only get to take credit for successful bets. It’s in our interest, and everyone’s interest, to weed out the unsuccessful bets early, so we can spend more time focusing on the successful ones. And so, we should design our build-measure-learn loops to test for failure as early as possible.

With value and a maximum cost, we can perform an apples-to-apples comparison between bets and choose the one that seems like the best one to do next. Often, that will be the one with the highest value.

But don’t be fooled by all these numbers! They’re just estimates and guesses. A smart leadership team will go with their gut, not just follow the numbers like robots. The numbers are there to feed a conversation: to get people thinking. They’re not there to substitute for experience and judgment.

The big question: Does this work?

For me, so far, yes. It took me nearly two years to get my leadership team to really engage with this approach, and I needed the strong support of my CEO and CPO to get there. My CEO, in particular, had to get pretty insistent before people would engage.

The fact is, putting together bets, even such high-level ones, takes work. It also makes people accountable, by putting concrete numbers on previously-vague statements about value, and despite everybody’s desire for other people to be accountable, most leadership teams I’ve worked with aren’t really looking to take on more accountability themselves.

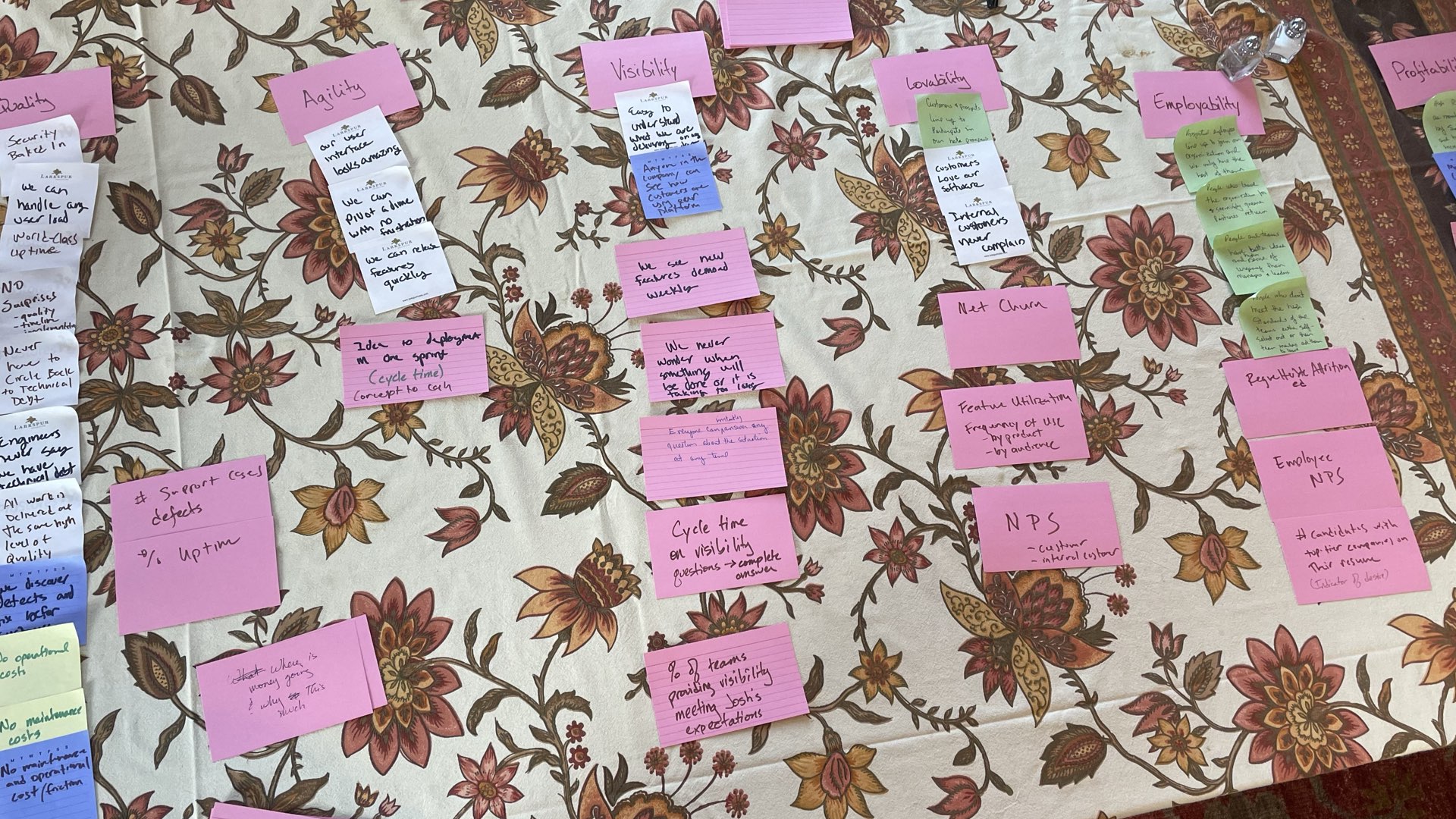

But, thanks to my CPO and CEO’s support, I can say that we are building software using product bets. We identified a handful to take to the leadership team earlier this year. They estimated the value, then chose a specific set of bets for us to pursue based on our capacity. It’s definitely elevated our conversation around product strategy, and I can see it getting even better as we gain familiarity with the approach.

What we haven’t done yet is finish any bets. We just started our first formal bets this year. So I can’t yet tell you how it will turn out.

What I can tell you is that I’m getting a lot less pushback than I used to about features and dates. The conversation is focused on bets, not features and dates, and when we talk about what folks want from Engineering, it’s less about, "tell me when you’re going to be done," and more about how we can take on more bets.

So, even though I haven’t yet used product bets to truly demonstrate accountability, they already seem to be helping.

Does it work? For me, so far, yes.

To summarize, we’re working on demonstrating accountability with product bets.

Specifically, we’re going to commit to delivering a certain amount of estimated value each year.

That estimated value comes from product bets. Each product bet is summarized by headline that focuses on a business result with a high level description of how we’ll achieve that result.

The bets to pursue are decided by the leadership team, and each bet has a leadership sponsor who champions it within that team.

Bets have an estimated value, and we focus on the estimate rather than trying to prove out actual value.

The leadership team also defines a maximum wager for each bet, which is based on a gut feel of risk and benefits, not costs, and together with the present value allows us to perform apples-to-apples comparisons of the bets.

At this point, you might be wondering: where does that "present value" number come from?

The answer, like all things in business, is spreadsheets. Magical spreadsheets filled with arbitrary guesses.

The secret to spreadsheets is that they make our guesses look official. Professional. Good Business-y.

But seriously, yeah, spreadsheets. Let me show you.

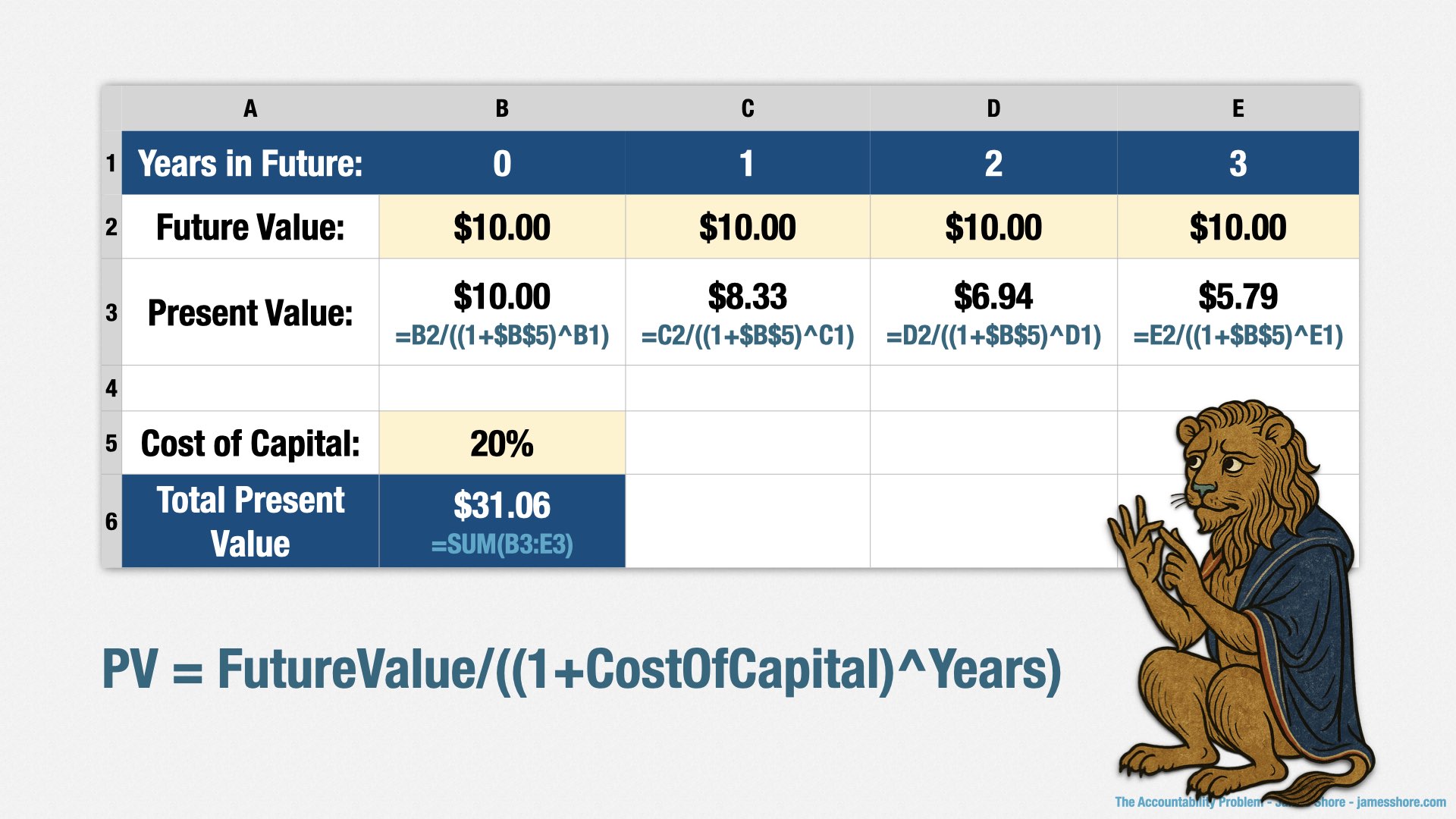

Let me start out by explaining what “present value” is, just in case some of you aren’t familiar with it.

The core idea of “present value” is that money—let’s say $10—is worth more today than it is tomorrow. Today, I can buy a couple of candy bars with $10. In a few decades, I’ll only be able to buy half a candy bar due to inflation.

This is called “the time value of money,” but it’s very simple: money today is worth more than money tomorrow.

What this means is that earning $10 today is better than earning $10 next year, and even better still than earning $10 in two years. If inflation was 20%, $10 in future value next year would be equivalent to $8.33 in present value today. $10 in future value two years from now would be equivalent to $6.94 today. And so forth.

Of course, inflation isn’t 20%, thank goodness. But when your company makes an investment, they expect a certain return on that investment. The return they expect is called “cost of capital.” Your leadership team will tell you the cost of capital to use. It’s based on their judgment of how much they could get from using the money on other investments along with an adjustment for risk. For these examples, I’m arbitrarily choosing a 20% cost of capital.

The neat thing about cost of capital is that you can wager your entire present value and still get a good return on investment. As long as the bet is successful, even if you spend all of the present value, you’re still making money.

If you ask me for an investment and promise to return $10 to me today, $10 next year, and so on for the next three years, you’ll return $40 total. If my cost of capital is 20%, then I can look at the present value of each of those returns. It’s $10 today, $8.33 next year, $6.94 the following year, and so forth. Adding up those future returns gives me the total present value, which is $31.06, which means that I can invest up to $31.06 and still get at least a 20% return on my investment.

Okay, so that’s what present value is. Now, how do we determine what numbers to use?

As I said before—spreadsheets and guesses. You build a financial model that makes guesses about the future.

I’m going to share the model I used, but I have to be honest: I had a lot of trouble getting my leadership team to engage with product bets at first. In order to get this off the ground, I had to provide the financial model myself... and honestly, I think it could be a lot better.

We have a new CFO at OpenSesame, so I showed him about the model I’m about to show you. He said—this is a direct quote—“it’s an okay framework to start.” He also said, “come talk to me early when you start on the next set of bets.”

So, yeah. Thank you for coming to my okay talk. I’m sure it will be better next year.

In all seriousness, our CFO liked the general idea of product bets, and the categories I was using. He just thinks he can make the specifics more rigorous, which is great, and I’m looking forward to his help.

The fact is, it doesn’t really matter if the model is accurate or not. The important thing is to get people to engage with value rather than cost and dates being the primary driver of decision-making. You can use a rough, back-of-the-envelope model to get started. That’s what I did. As long as you’re consistent with your approach across bets, it’s still useful.

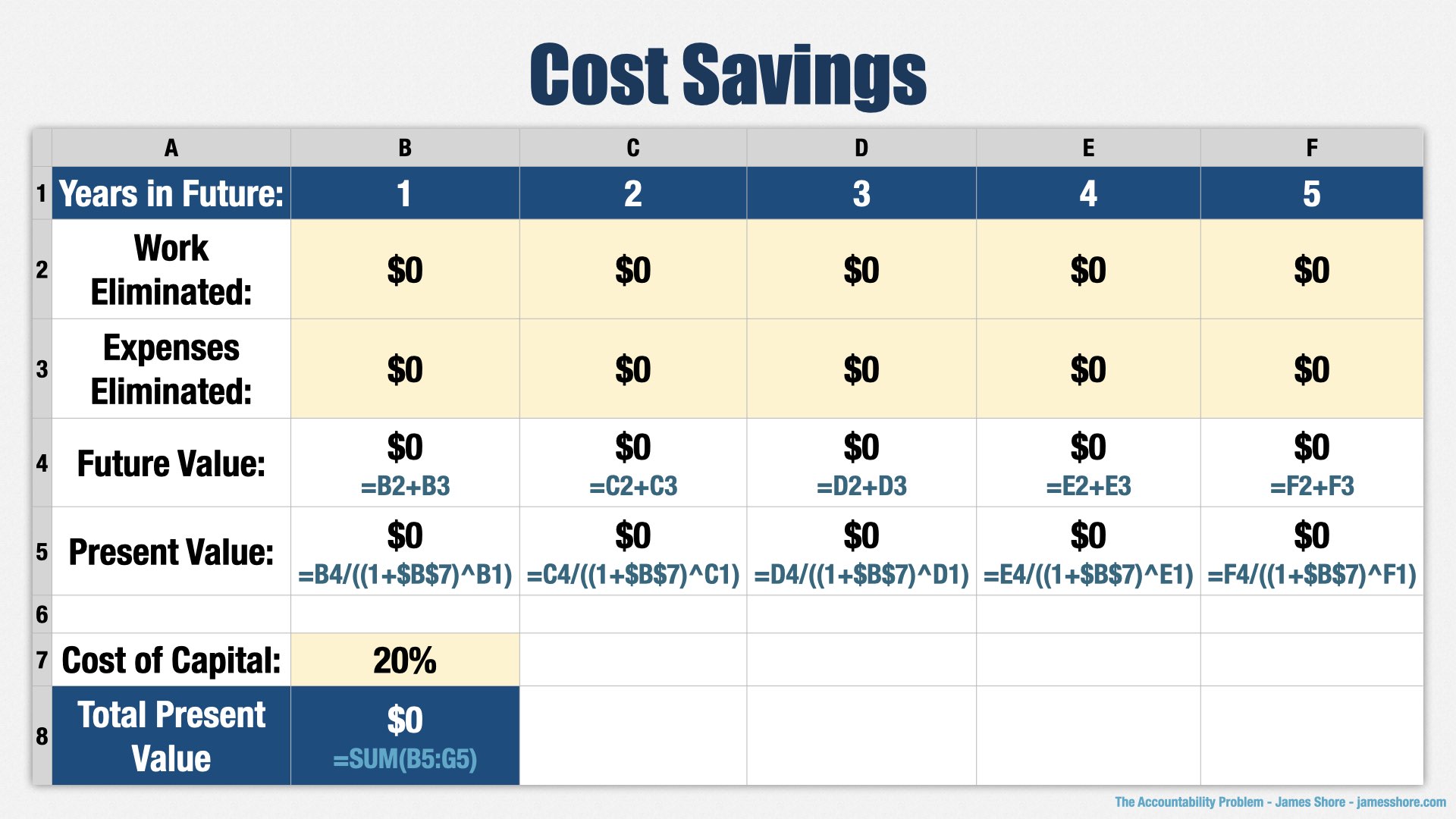

With that said, our product bets are broken down into five sections. Each one has its own little present value calculation.

There’s Sales, which represents the money we make from new customers as a result of the bet.

Upsell, which is the money we make from existing customers as a result of the bet.

Retention, which has to do with the fact that we sell subscriptions. Once we make a sale, we keep making money from that customer every year, so long as we can retain them. This is typical in the modern software-as-a-service world. So retention is a very important number.

Cost savings is reduction in spending, which counts as value, because spending $5 less on candy each year means I have $5 more in my pocket.

And then expenditures, which is additional spending we’ll incur as a consequence of the bet. For example, maybe I spend $5 less on candy each year, but I have to spend $1 every year on a budget tracking app that reminds me not to waste money on candy.

To illustrate these ideas, let me introduce you to my new employer: War Elephants as a Service.

We’re your one stop shop for all elephant-related warfare. We take care of the elephants, so you can take care of the invasion! Look at our glowing testimonials from top customers: Carthage... and Rome! Business is good. Or at least, it was. There’s not much demand for war elephants these days.

PS: Apologies for the mutant two-trunked elephant in the logo. Our ex-CEO tried to solve our financial problems with cost-cutting, so he replaced all of our graphic designers with AI. His last words as he was escorted out the building were, “I’ve made a terrible mistake.”

But we have new CEO now! Babar is our new “Chief Elephant Officer,” and he has an idea for keeping our business relevant in today’s fast-paced world. Since nobody seems to want war elephants any more, we’re going to switch from “war elephants” to “more elephants!” Elephant parades! Elephant-themed merchandise! And especially, cute baby elephants! Nothing says “more elephants” like an adorable fuzzy pachyderm.

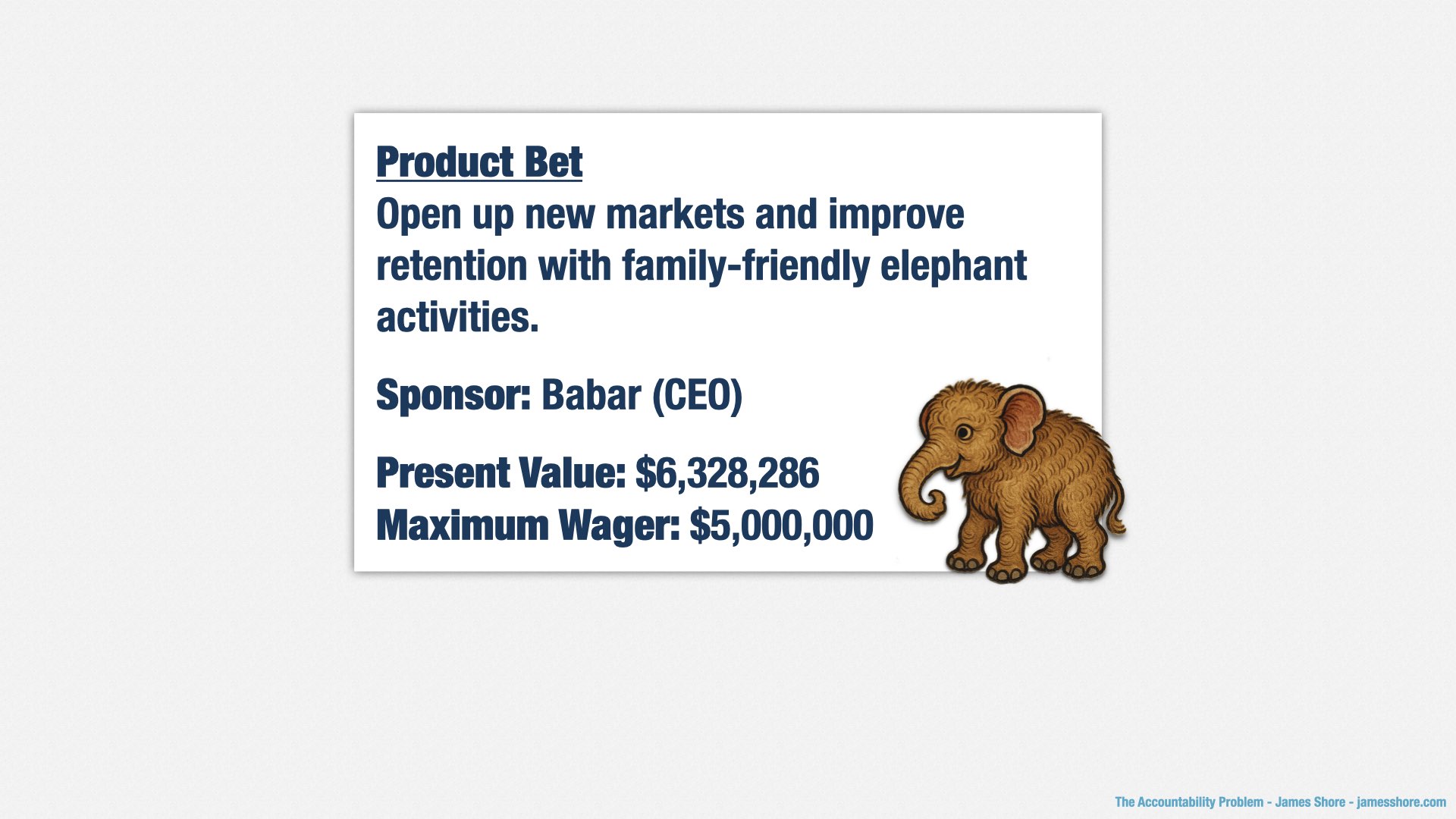

Specifically, we’re going to open up new markets and improve retention by introducing family-friendly elephant activities. That’s our bet.

To quantify this bet, we’re going to look at the five categories I mentioned before: Sales to new customers, upsells to existing customers, retention, cost savings, and expenditures.

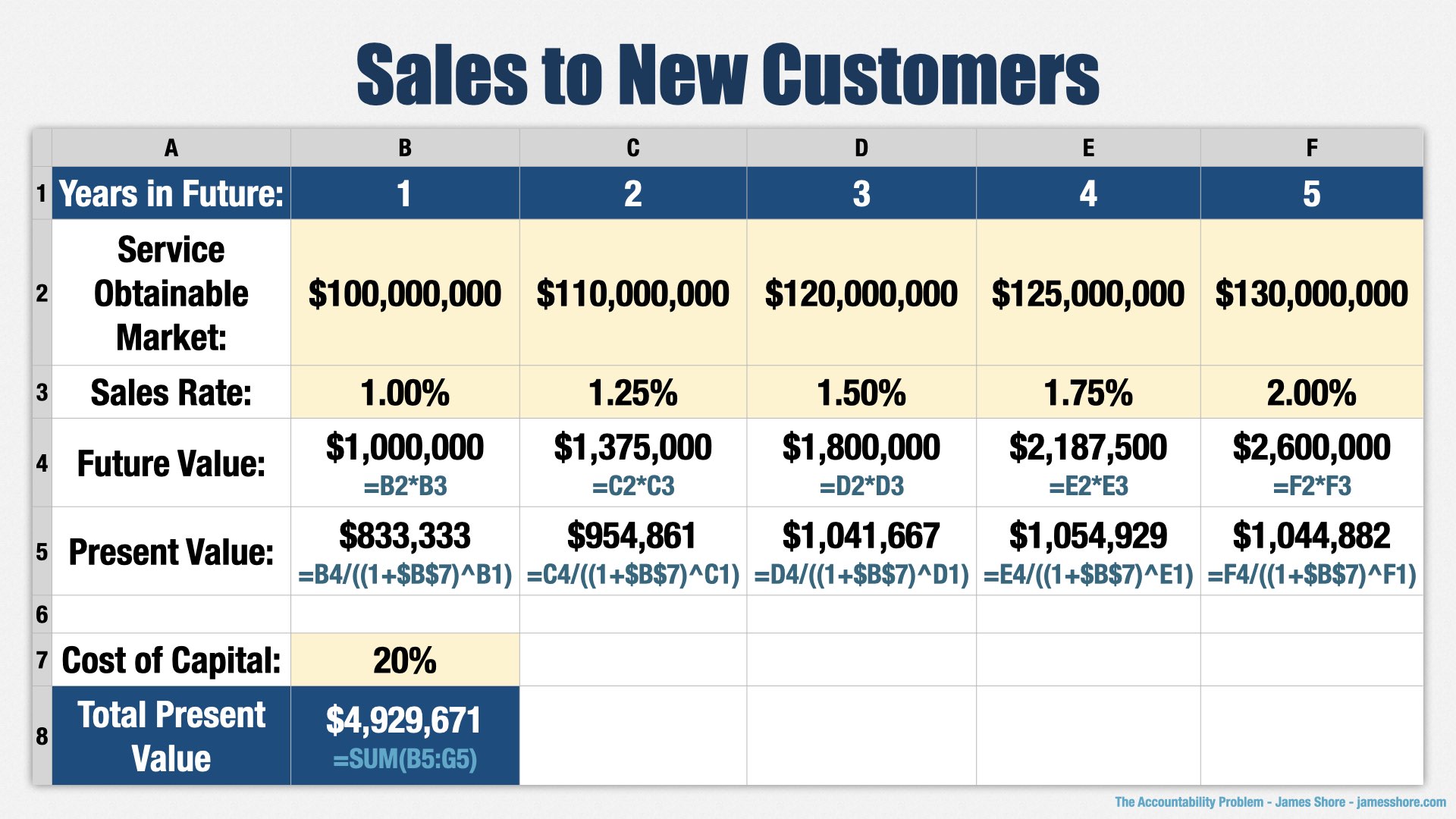

[points to “Service Obtainable Market” row] For new sales, we’re going to look at the “service obtainable market,” which is the total size of the market that we can reach for family-friendly elephant activities. Let’s say it’s 100 million dollars at the end of the first year, and grows over time as word gets out.

[points to “Sales Rate” row] Next, we’re going to estimate how much of that market we can capture. We face competition from zoos, but nobody has quite the expertise deploying large numbers of elephants that we do, so we’re going to say we can sell into 1% of the market, and that will also grow over time.

[points to “Future Value” row] Multiplying the service obtainable market by our sales rate of 1% gives us the amount we expect to make each year in future dollars. [points to “Present Value” row] Then we apply our present value formula at a 20% cost of capital and [points to “Total Present Value”] add it all up to get a total present value of nearly $5 million from new sales.

It all looks very official, doesn’t it? But how do we know it’s a 100 million dollar market? How do we know we can sell into 1% of it?

We don’t! It’s guesses. Educated guesses, maybe, but ultimately... guesses. That’s how these things work, and that’s why you need your leadership team to get involved. You can make your models more and more rigorous, but at the end of the day, somebody’s making their best guess, and those guesses should be overseen by the people in charge of those departments.

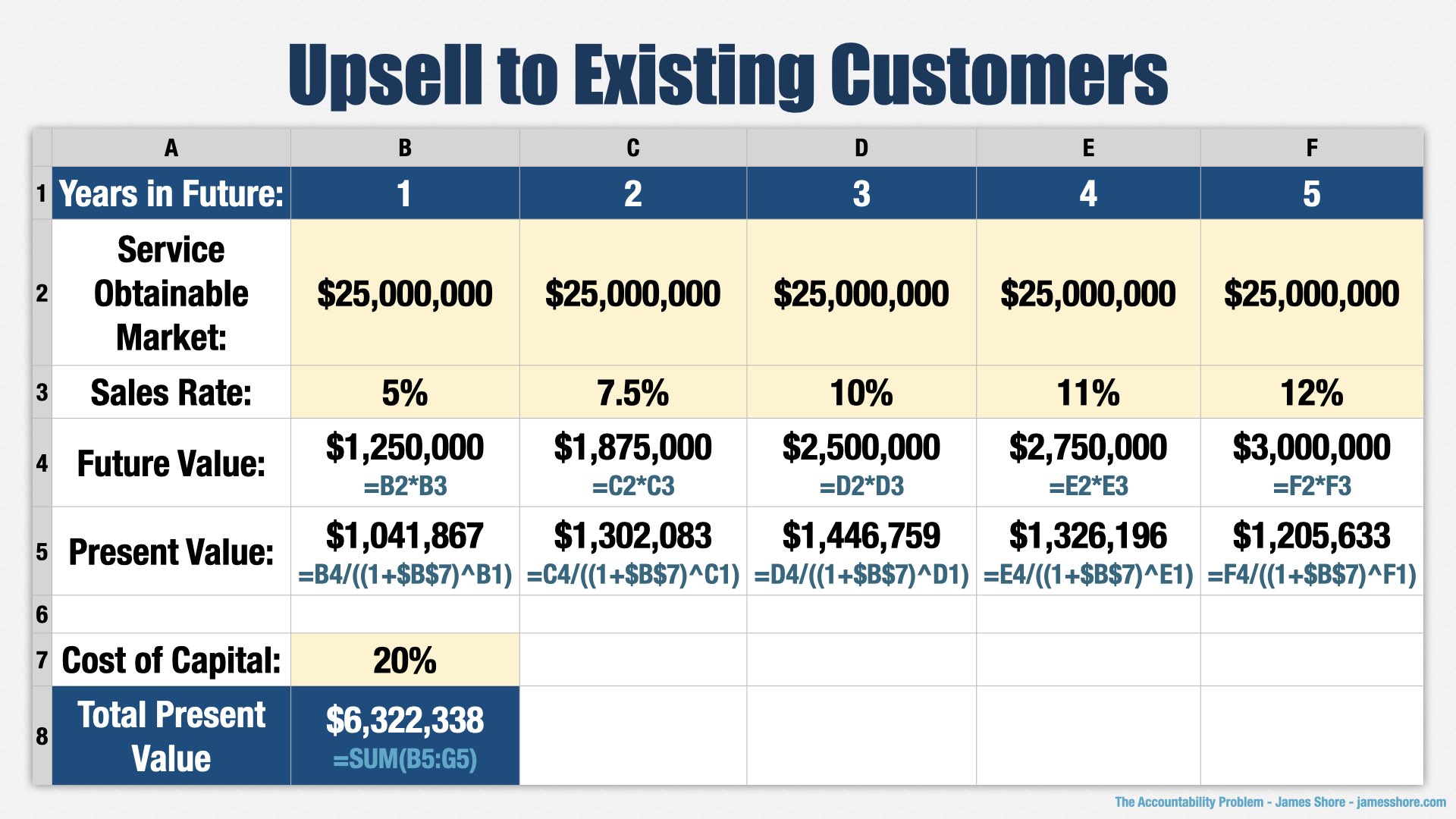

Next, we look at upsell. How many of our existing customers can we convince to try our new family-friendly elephant activities?

[points to “Service Obtainable Market” row] As before, we start with the total market that we can reasonably reach. This is the amount we think that our existing customers would be willing to spend on our new offering. In our case, it turns out our customers aren’t actually using their war elephants for war, but for things like parades. We think there’s a good $25 million to be made from our existing customers, and we don’t expect that to change much over time. To be clear, that’s not what we make from our existing customers, it’s the extra amount we think they’d pay for our new service.

[points to “Sales Rate” row] Then we look at our sales rate for that market. Given that our customers are already using their elephants for parades, we think they’re going to be pretty receptive to us providing services to support them. We estimate that we’ll be able to convert 5% of the upsell market, and that number will also grow over time.

[points to “Total Present Value”] Multiply the numbers, apply present value formula, and we have the total upsell value of $6.3mm.

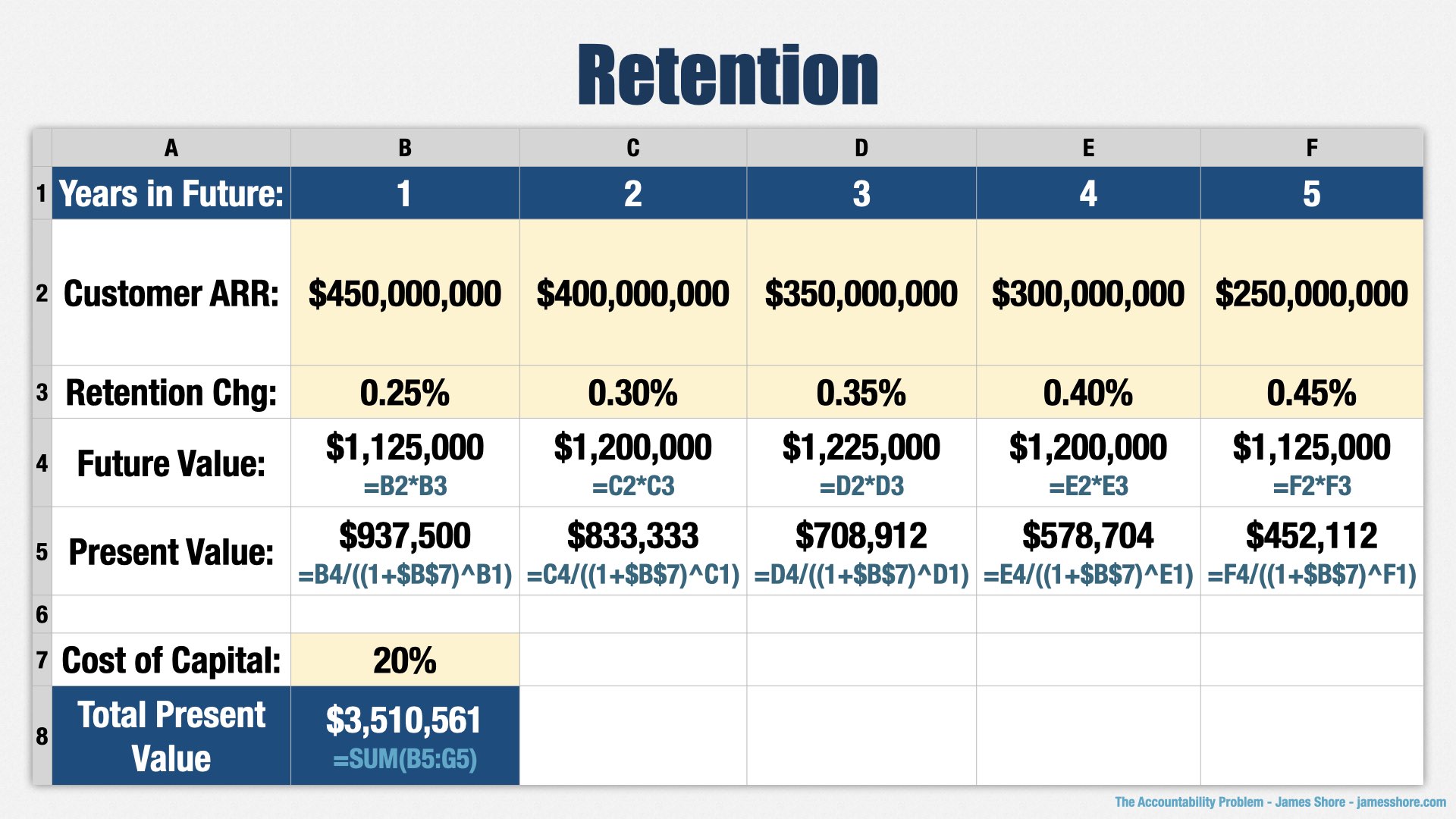

Now let’s talk about retention. Our retention numbers have been pretty bad—as I said, countries don’t really need war elephants any more. [points to “Service Obtainable Market” row] But we still have a hundreds of millions of recurring revenue, even though it’s going down each year. That’s the ARR line—annual recurring revenue.

[points to “Retention Change” row] By pivoting from a focus on war to a focus on the military parades our clients are actually using elephants for, we think we can stem the bleeding a bit. Not much... about a quarter of a percent each year, going up slightly over time.

[points to “Total Present Value”] Multiply, present value, and there you have it. Three and a half million.

What about cost savings? [points to “Work Eliminated” row] Is this bet going to eliminate any of the existing work our employees do? Not really. [points to “Expenses Eliminated” row] Is it going to eliminate any expensive software subscriptions or other expenses? No, probably not.

[points to “Total Present Value”] Normally, we’d add up the cost savings and apply the present value calculation, but the numbers total out to zero in this case.

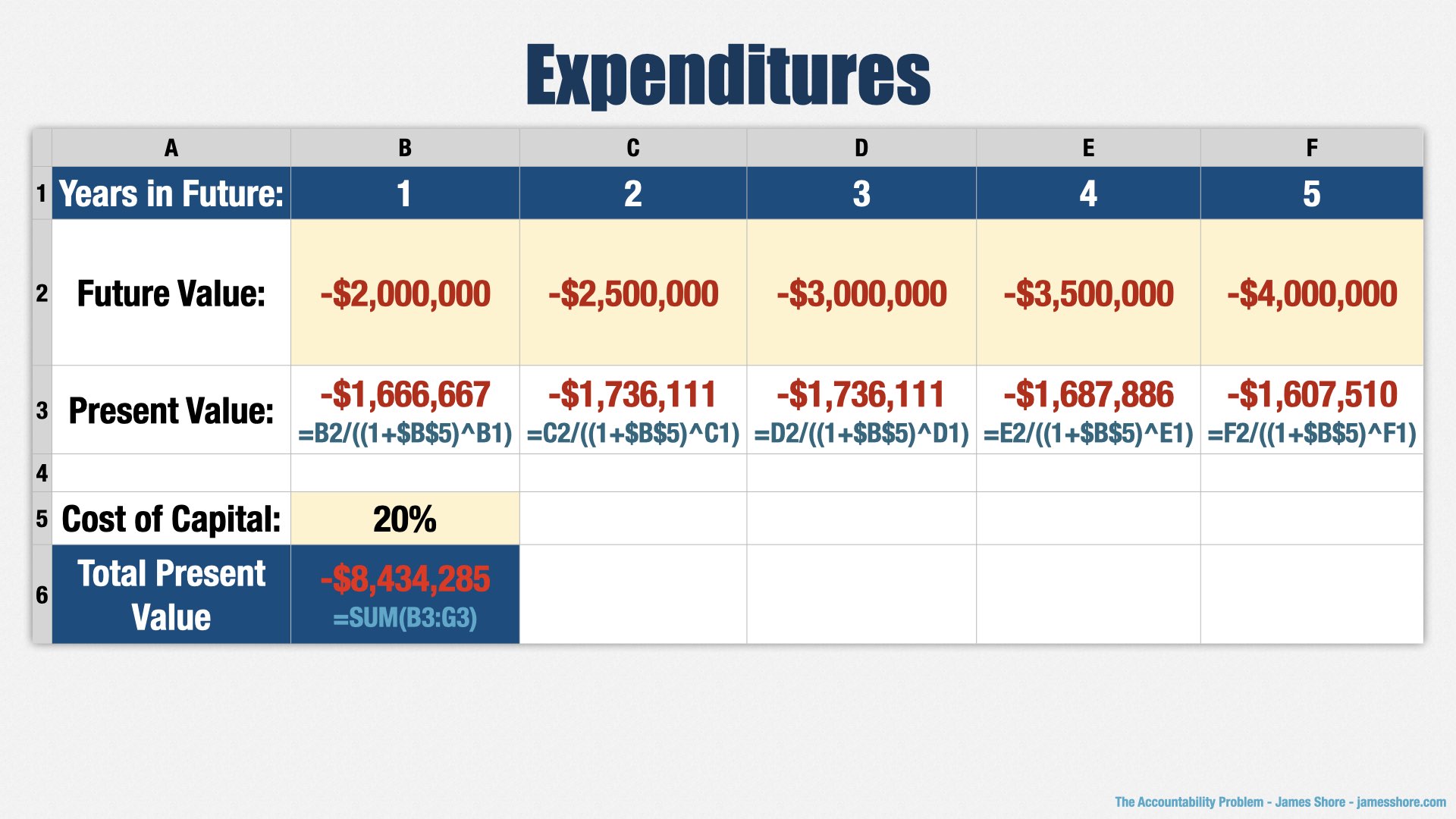

And finally, expenditures. How much more are we going to spend as a result of this bet?

Well, there’s the cost of developing the bet itself, which is our wager, but we’ll bring that in later. In this section, we’re looking at the ongoing costs of running the program. [points to “Future Value” row] I’m going to hand-wave that a bit—you might have multiple line items here normally—but let’s just say it’s $2mm per year, going up as the program becomes more popular. Elephants aren’t cheap.

[points to “Total Present Value”] Present value, etc., gives us a total of $8.5mm in expenditures.

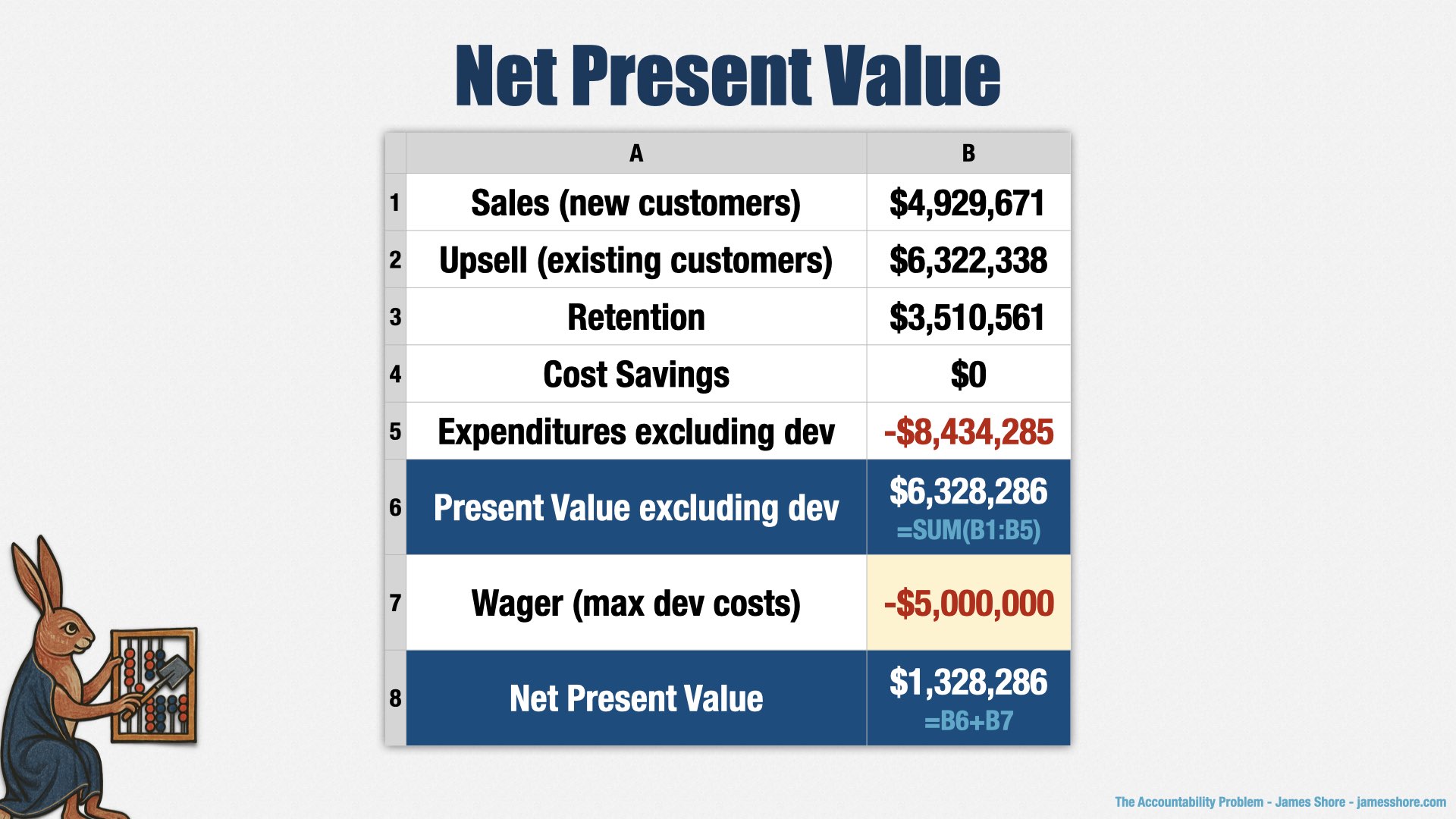

Bringing it all together, we have $5mm in new sales, $6.3mm in upsell, $3.5mm in improved retention, $0 in cost savings, and $8.5mm in expenditures. That comes to a total present value of $6.3mm before our development costs.

Now, how much do we want to wager on development? The leadership team thinks this is a slam dunk, and a way to save the business, so they’re going to wager nearly all of the value. Five million dollars. Remember, using cost of capital to determine present value means that we could wager the entire present value and still come out ahead... if the bet is successful.

That said, bets still have a risk of failure. Our leadership team is making some assumptions about how much people will be excited about baby elephants, so we’ll want to work incrementally and iteratively to test their assumptions early.

To summarize, the present value of the bet is based on sales to new customers, upsell to existing customers, change in retention, cost savings, and non-development expenditures related to those benefits.

And that’s how we come up with the numbers in the product bet. To bring it back around, we’re betting that we can open up new markets and improve retention with family-friendly elephant activities. Babar is the sponsor for this bet and he thinks it’s worth $6mm in present value, and he’s willing to spend up to $5mm to try to make it work.

To calculate the value of those categories, we took a back-of-the-napkin approach where we estimated the size of the market and our ability to sell into that market. There’s certainly room for more rigor, and I encourage you to talk to your finance team about how to improve the model.

But do remember that it’s all still guesses at the end of the day. It’s better to have some model than a perfect model. The real benefit is in shifting the conversation from features and dates to about being accountable for value.

We may be a foreign country, but we can still speak our business partners’ language.

[beat]

But how do we get them to talk to us?

A leader I respect once told me, “You have 18-24 months after becoming VP of Engineering to make a difference. After that, the organization’s problems become your problems.”

I think he was right on target. As a leader, your colleagues in other departments will reserve judgement for the first six months or so. They’ll get impatient over the course of the next year. By the end of two years, they’ll be holding you accountable. If you don’t define what that looks like, they’ll define it for you, and they’re going to default to features and dates.

The problem with product bets, as an idea, is that they require leadership participation. You can’t create these spreadsheets on your own. Even if you did, nobody’s going to pay attention if you don’t have their buy-in. I’ve tried variants of the product bet idea many times over the years and getting that participation has been extraordinarily difficult. I’m a little surprised we’re able to do it at OpenSesame, to be honest.

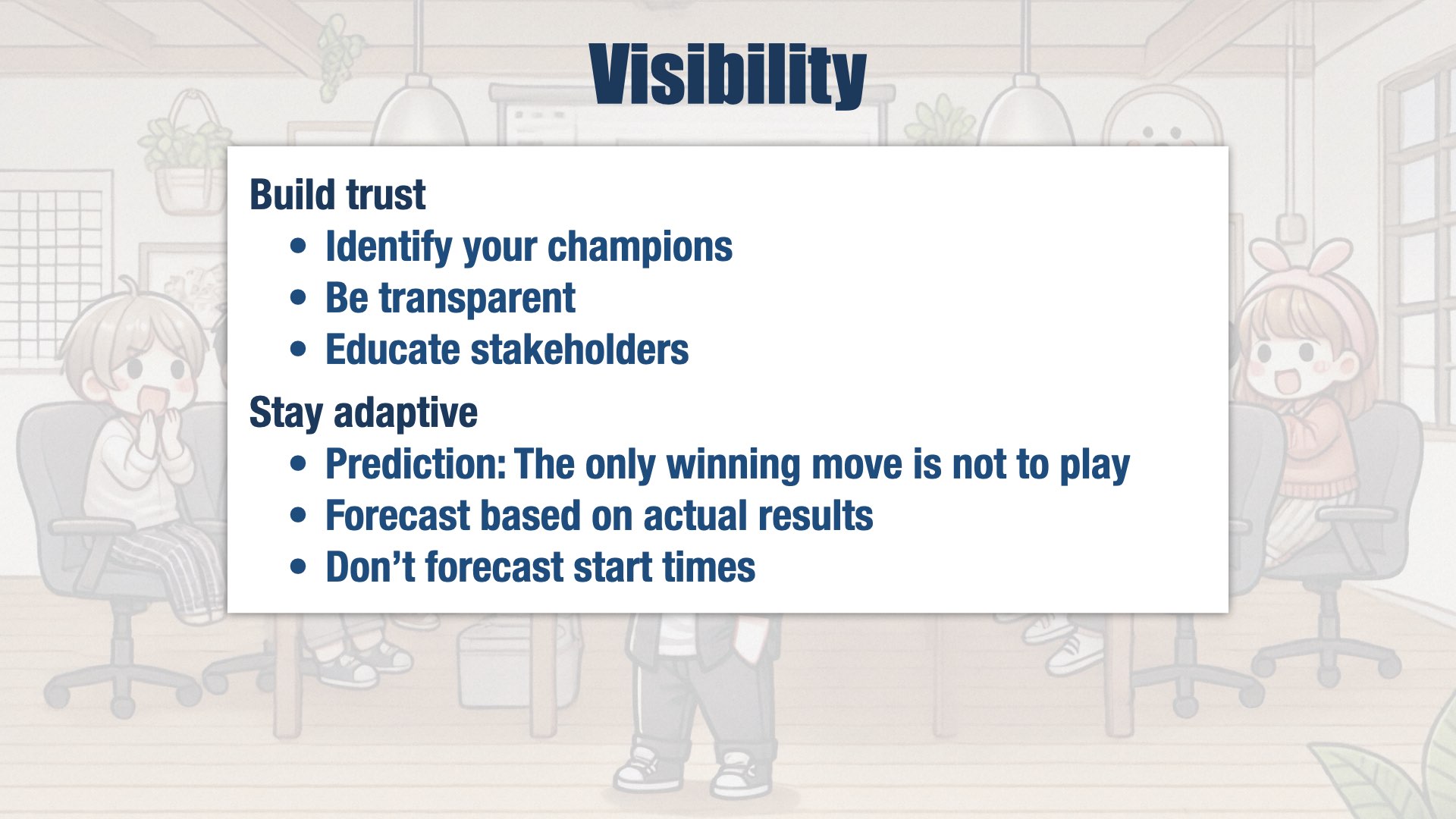

Before you can get people to buy in to your definition of accountability, you need them to trust you. And in order for them to trust you, you need to be accountable.

I’m not sure how to solve this chicken-and-egg problem for your organization. I can tell you how I solved it for mine. Any change you introduce has to be in the context of your specific situation, so I’m not saying that you should do it my way. Some of my changes were pretty radical, and they’re not going to be a good idea for every situation.

We don’t have time to go into every detail, so this is going to be more of an overview than a how-to guide. I’ll provide resources for further investigation.

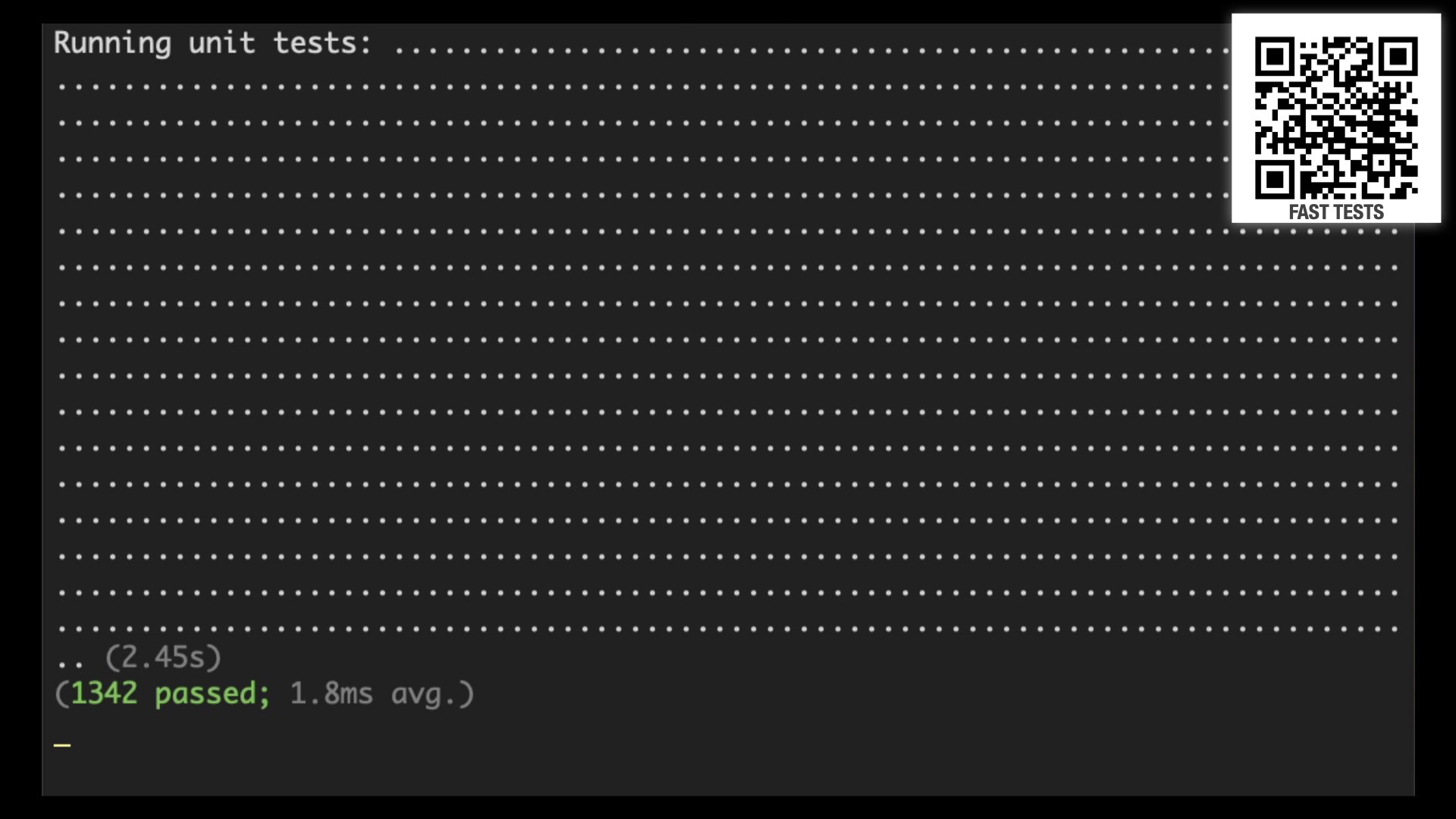

QR Code: FaST: An Innovative Way to Scale

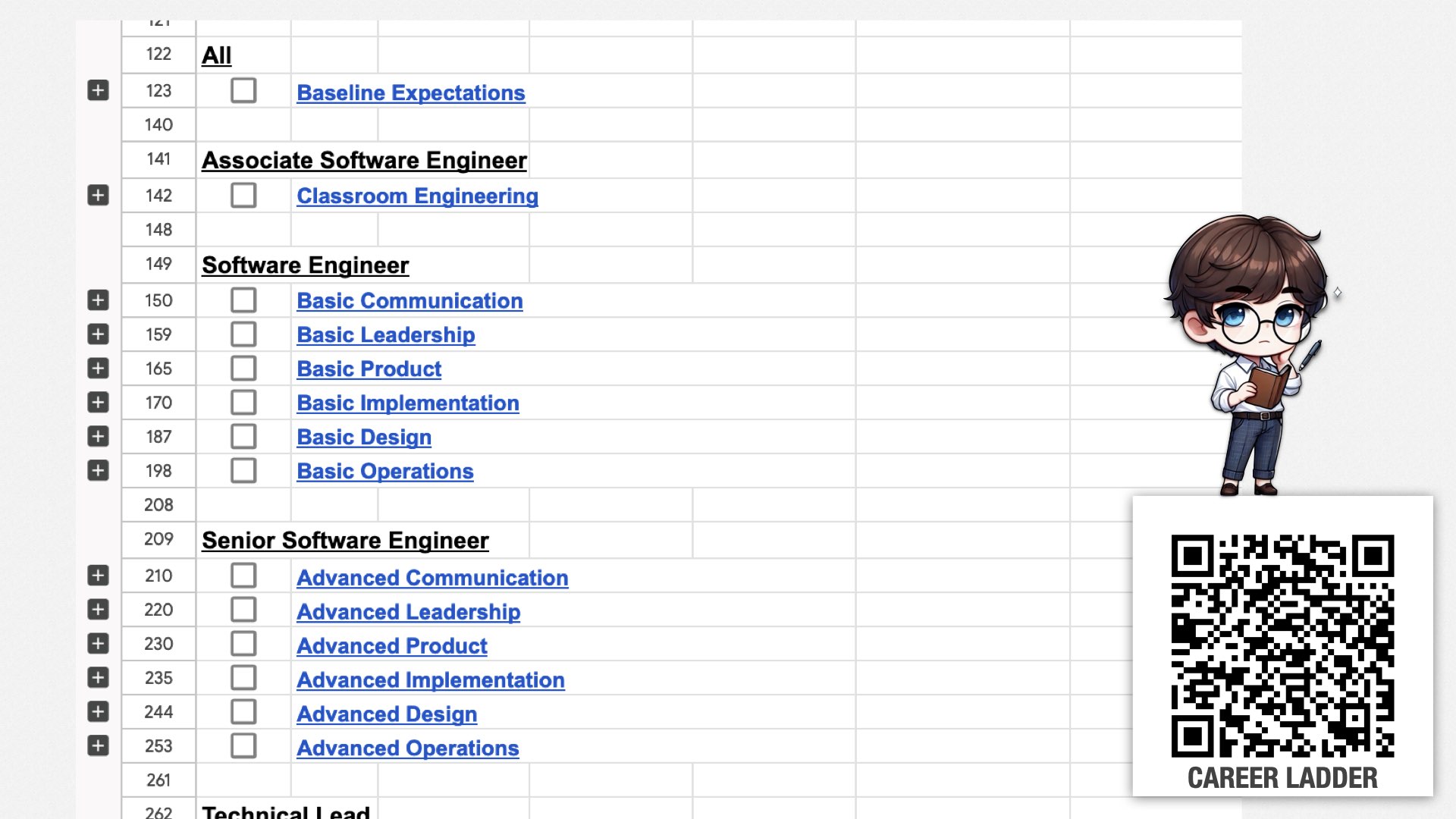

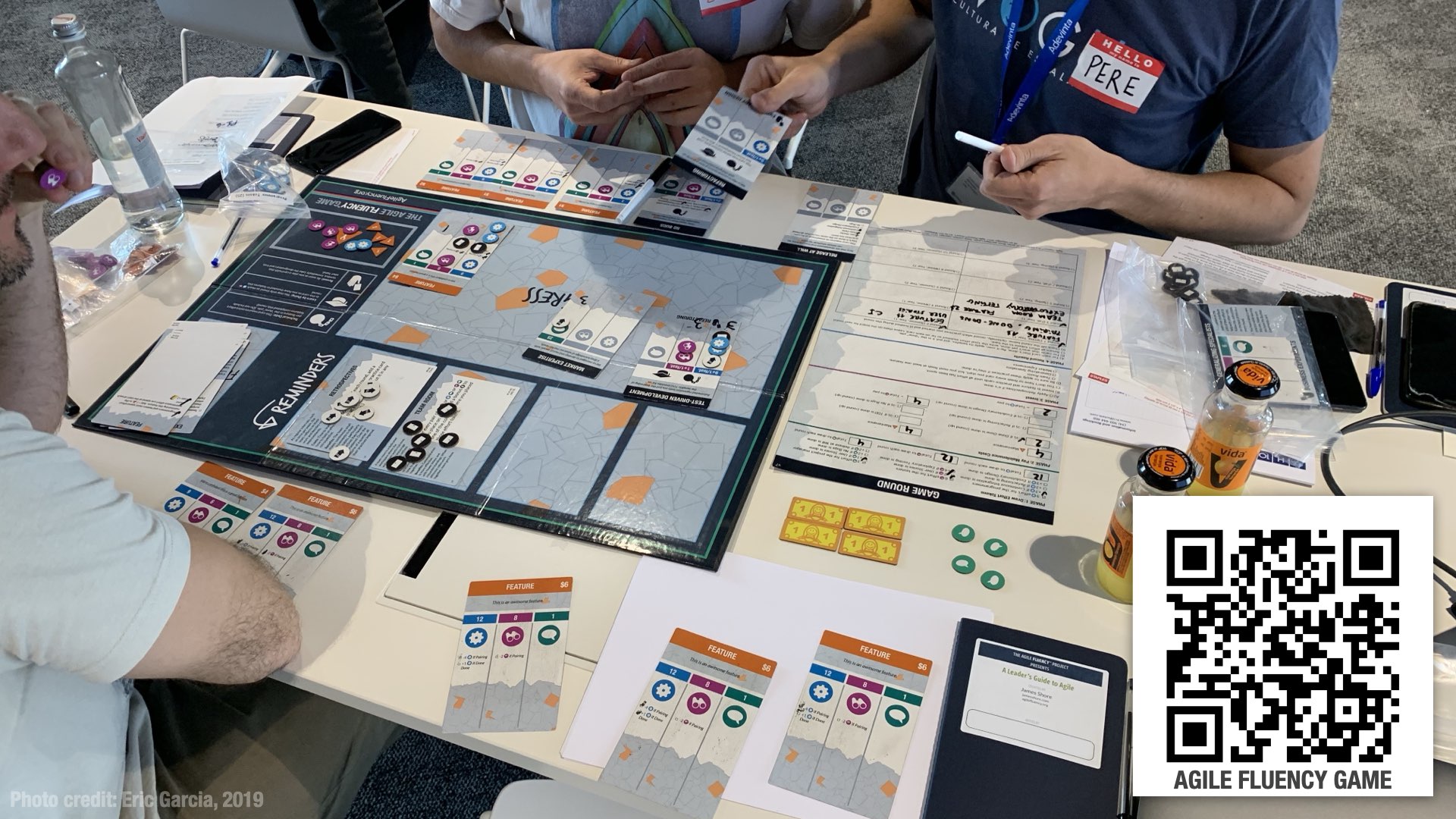

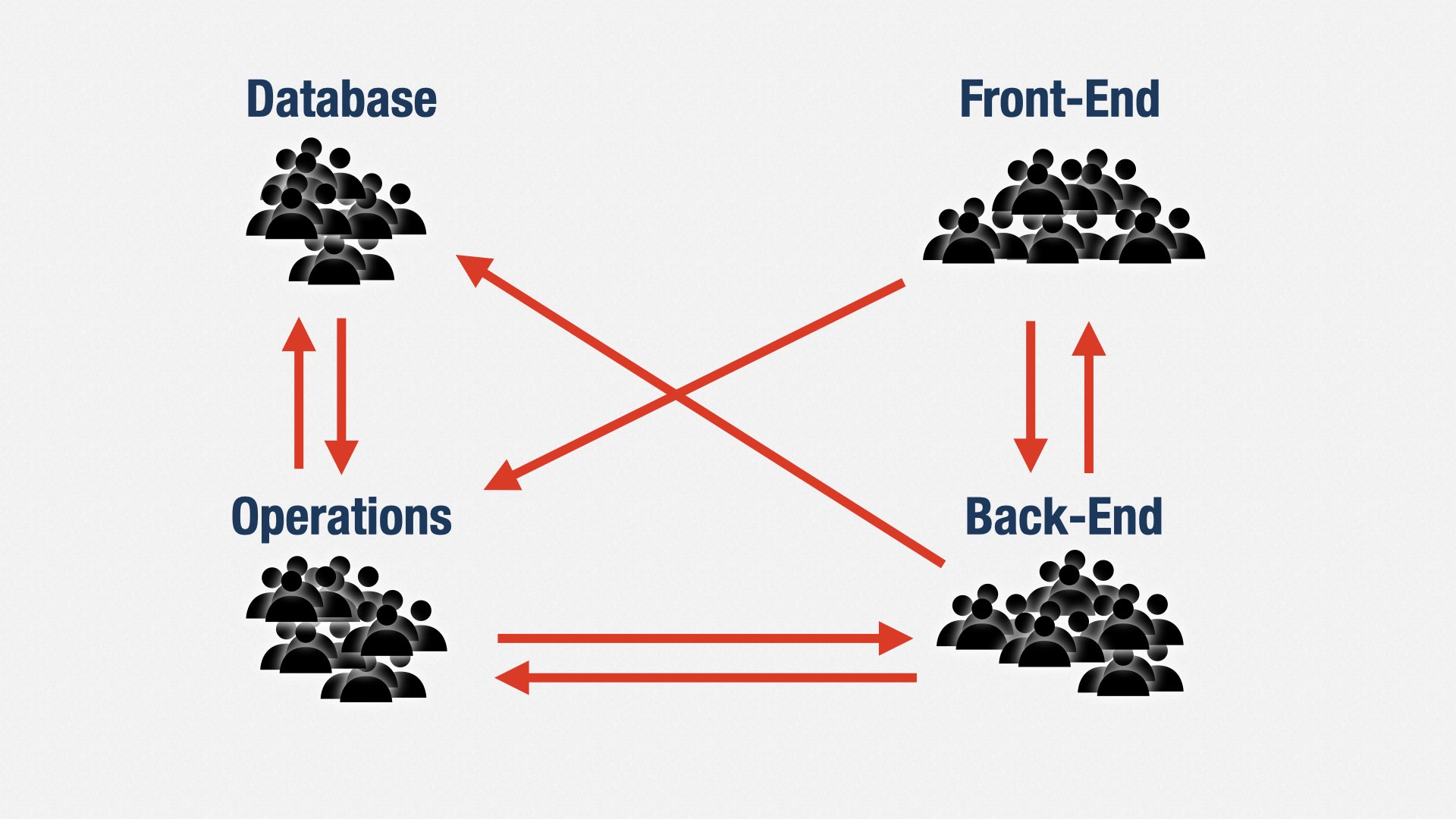

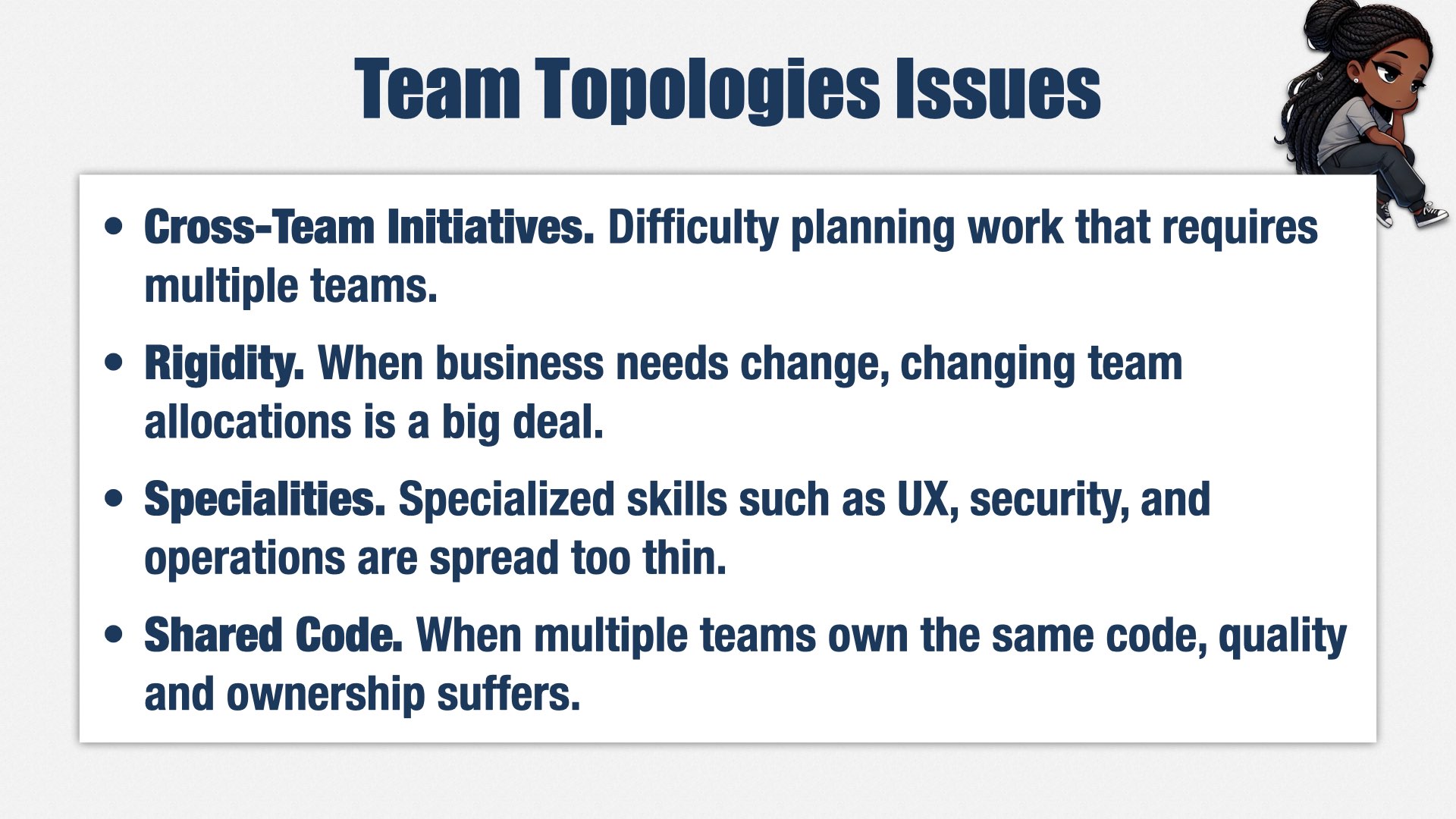

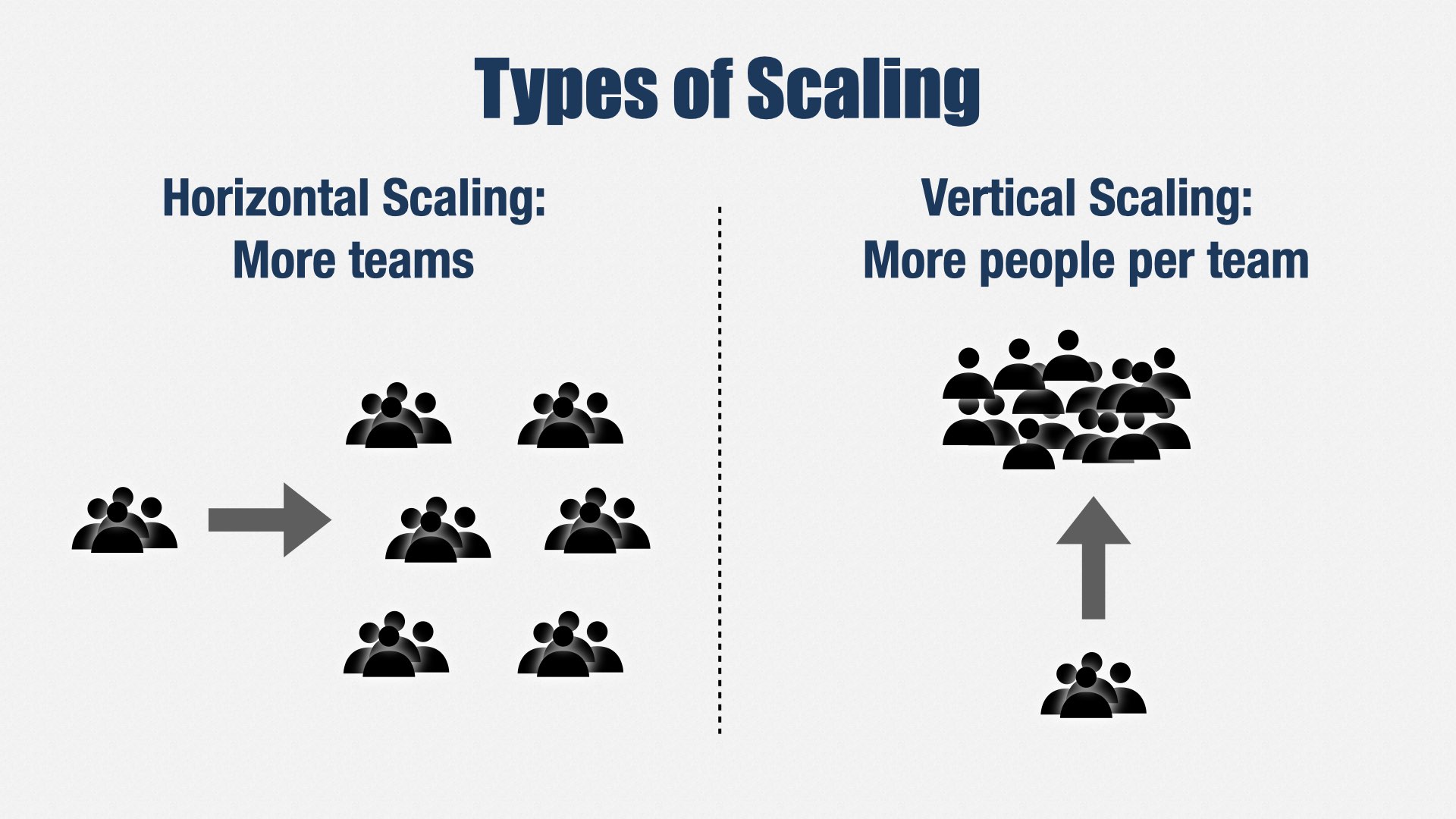

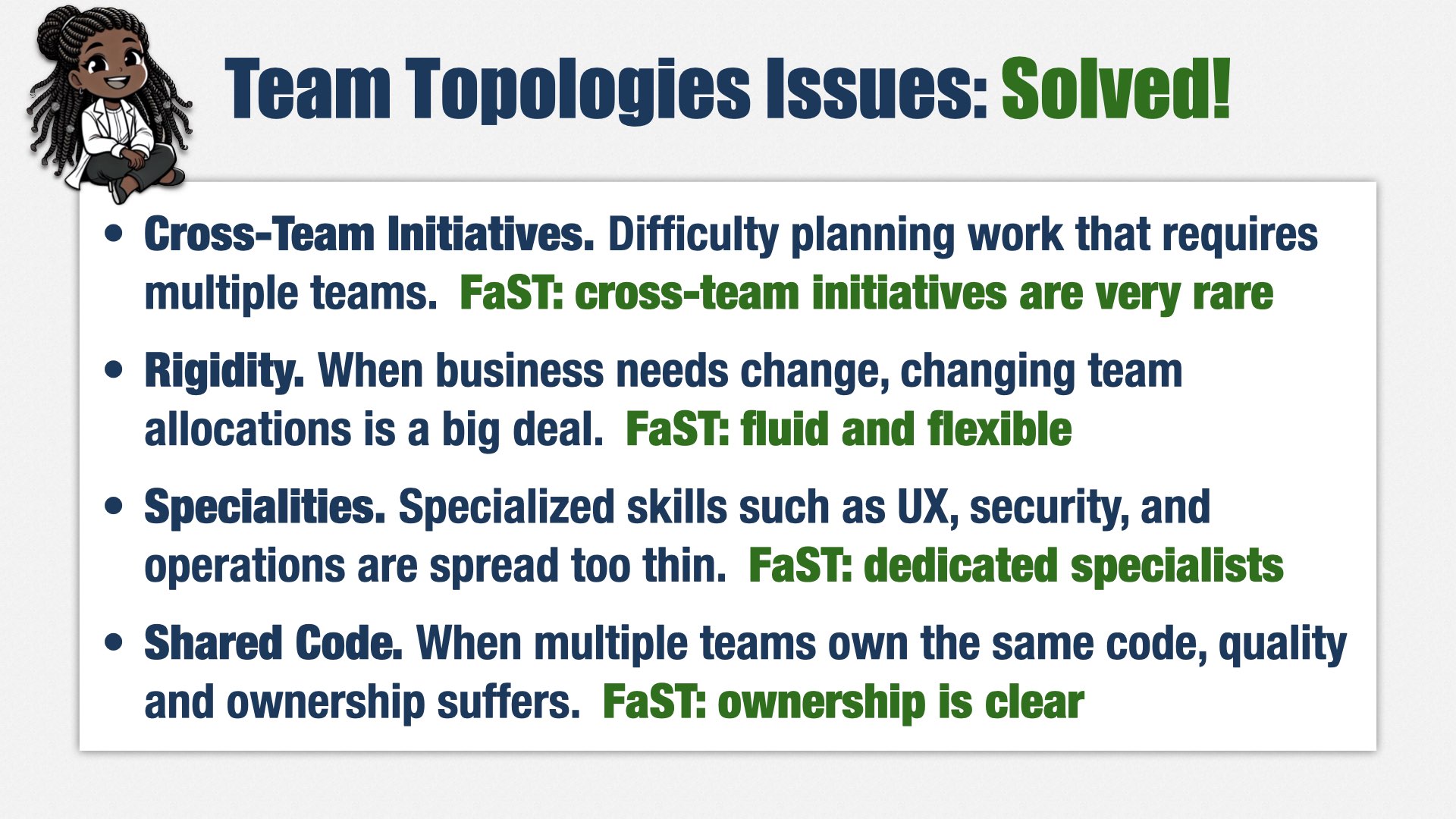

When I joined OpenSesame, I started by getting the lay of the land and deciding what to do. One of the things I saw was that the teams were heavily siloed by technology area, rather than by product line. Cross-team delays weren’t too bad, although they often can be in this situation, but it did mean that teams’ work didn’t line up to our business needs. So the first thing I did was to introduce Quentin Quartel’s Fluid Scaling Technology, or FaST.

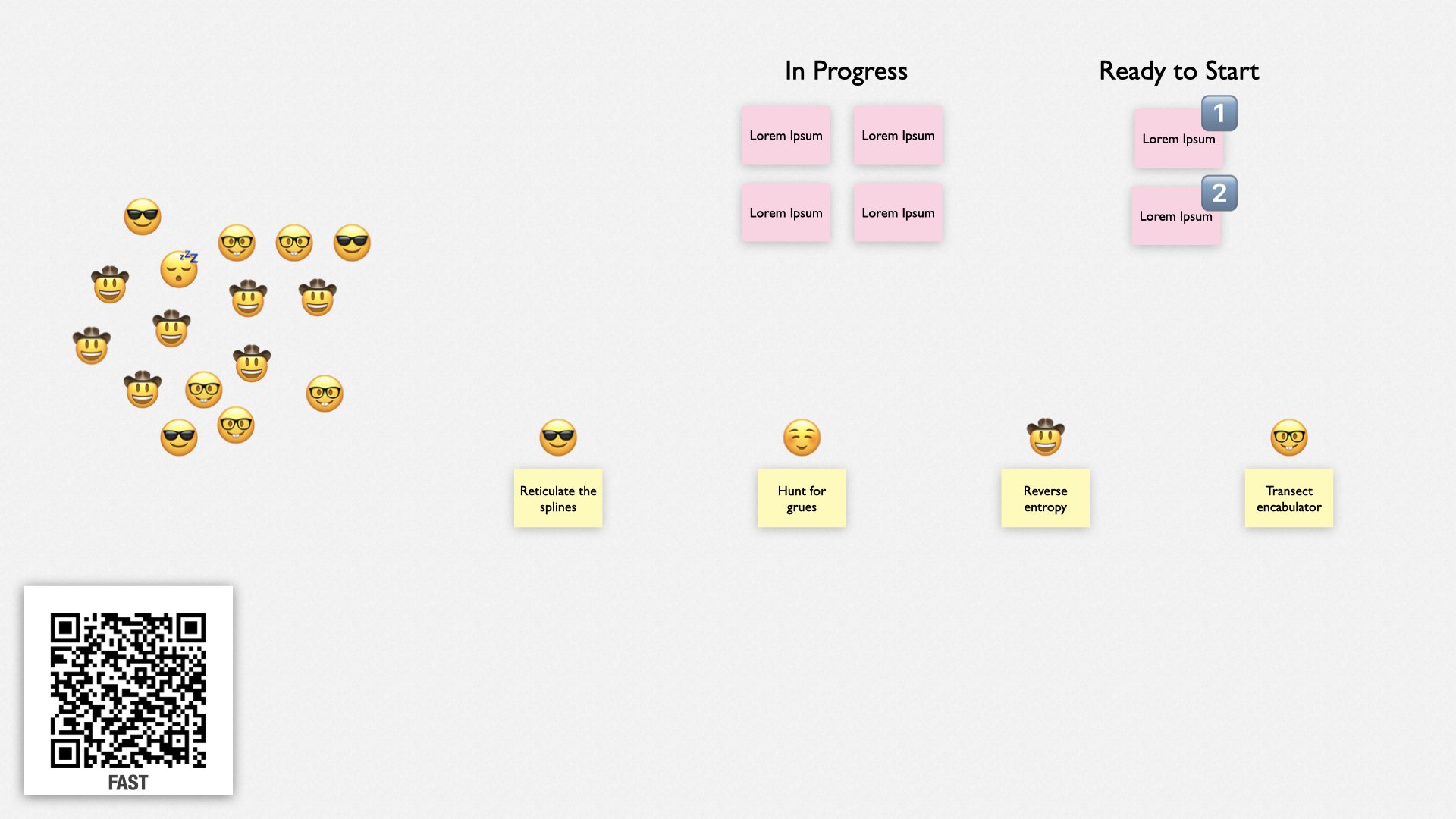

We don’t have time to discuss FaST today, but you can learn more about my approach to it by following this QR code. The short version is that we combined teams into product-centric “collectives” and created a single queue of work for each collective. Each product has a dedicated collective and work queue. Those collectives self-organize into teams as needed to tackle the highest priority work.

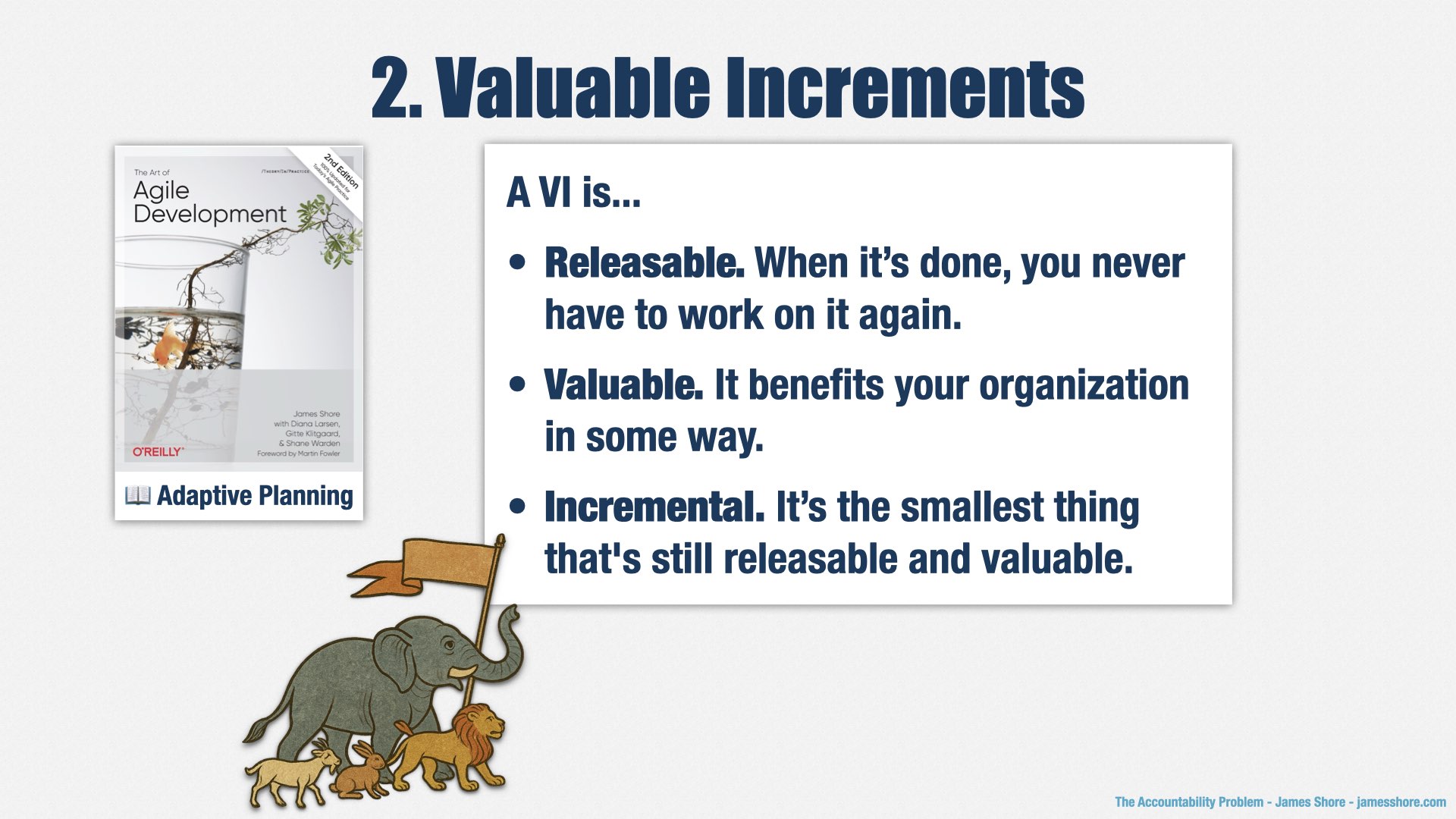

FaST solved the problem of teams not matching business needs. A related problem was the teams planned their work in terms of technical priorities rather than business results. They called them “stories,” and “epics,” and recorded them in Jira, but they were more like technical tasks. At the same time that I introduced FaST, I also introduced the idea of “Valuable Increments” from my book. (In case it’s not clear on the slide, my book is The Art of Agile Development, and it’s now available in a second edition. You can find this material in the “Adaptive Planning” section.)

A valuable increment is a similar idea to an epic, in that it groups together multiple stories, but an “epic” is literally a “big story.” A valuable increment isn’t focused on size; it’s focused on value. Each VI is something that stands alone. When it’s done, you can release it, and you’ll have gotten value out of it even if you never work on anything related to it ever again.

Introducing FaST and VIs allowed me to talk in terms of the business results my teams were creating for each product line, not just their technical accomplishments.

I also knew, from experience, that one of my biggest battles was going to be around estimates and forecasting. Before I could gain the trust of the organization, I needed to be able to demonstrate that I could do what I said I would. Up to this point, their experience of software development was that we never delivered on time. At the same time, I didn’t want people to over focus on features and dates.

So I played a game that, to this day, I’m not sure was the right approach. I had my engineering managers start collecting data so we could provide more accurate forecasts. While they did that, I told teams to stop providing estimates to stakeholders.

This caused a lot of anger in my stakeholders. They didn’t like hearing that they couldn’t have estimates. I told them that our estimates weren’t accurate, and we were working on getting better information, but they still didn’t like it. I think I only got away with it because there had been high-profile failures with the old approach, and I was still in my honeymoon period, but it still caused a lot of friction.

It worked out in the end, I think, because the new forecasts really are much more reliable, but I had to collect data for about six months before I could provide the new forecasts. That was an uncomfortable period. I could have kept the old approach to forecasting, but it definitely didn’t work. I’m not sure if “wrong estimates” would have been better than “no estimates.” On the one hand, a clean break meant that it was obvious that I had switched to a new approach, and—as I said—it really works. On the other hand, I made some important members of the leadership team angry in the meantime.

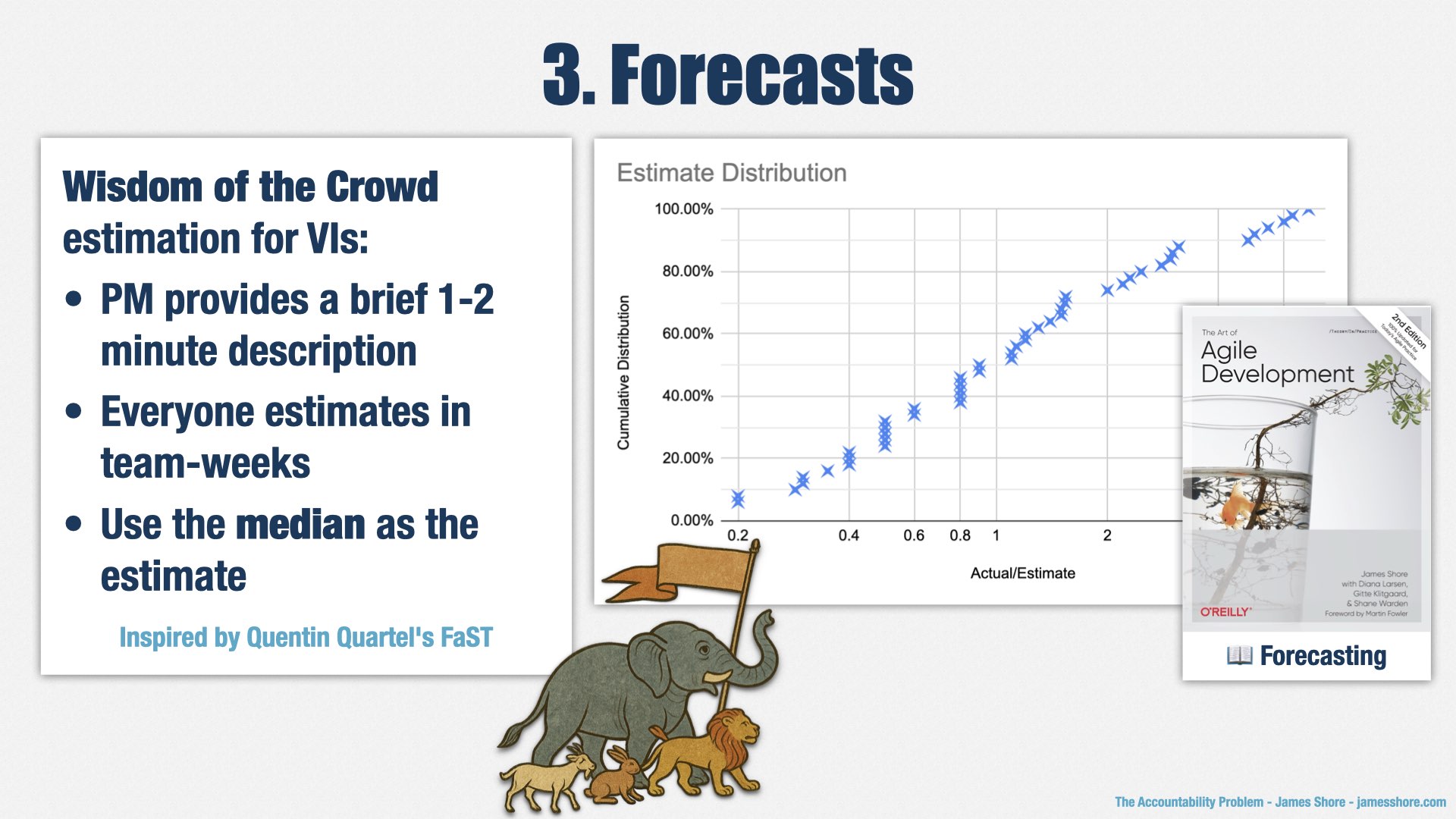

Anyway, the way it works is that we get a “wisdom of the crowd” estimate for each VI before works starts. That involves a product manager providing a very brief description of what the VI involves—just a minute or two of verbal explanation. People can ask clarifying questions, but there usually aren’t many. Then everyone provides their gut feel of how long the work will take a team to accomplish, in weeks. We collect the answers without discussing them and record the median response. That’s the estimate. It only takes a few minutes per VI. Since our collectives have between 12 and 25 people, including managers, product managers, and designers, there’s enough people to make the “crowd” part of “wisdom of the crowd” work.

Our Wisdom of the Crowd estimates are stunningly accurate. The median estimate for a VI actually matches the median reality. It’s amazing. The approach comes from Quentin Quartel and his FaST method, and I’ve never seen anything so good. It’s easy and it’s accurate.

However, although Wisdom of the Crowd estimates are accurate, in aggregate, they’re not very precise. We graph estimates versus actuals—you can see it on the right there. About 30% of VIs take twice as long as estimated, and about 30% take half as long as estimated. That’s a pretty big range.

So we don’t present the raw estimates to stakeholders. If we did, we’d be late half the time. Instead, we increase the estimate so we’re early more often than we’re late.

Doing this requires me to play a political balancing act. According to our data, never being late would require us to multiply our estimates by six or seven, and that wouldn’t fly. We can’t tell them that a small, two-week VI is going to take 3-4 months. On the other hand, it’s also not acceptable to be late half the time.

Right now, I’ve chosen to be 75% accurate. In other words, we’re early 75% of the time and late 25% of the time. For us, that’s about a 2x multiplier, depending on the team. I’ve also told stakeholders to expect about 1 in 4 VIs to go longer than expected. So far, it’s working well.

If you’d like to know more about the analysis behind this technique, it’s in my book in the “Forecasting” section.

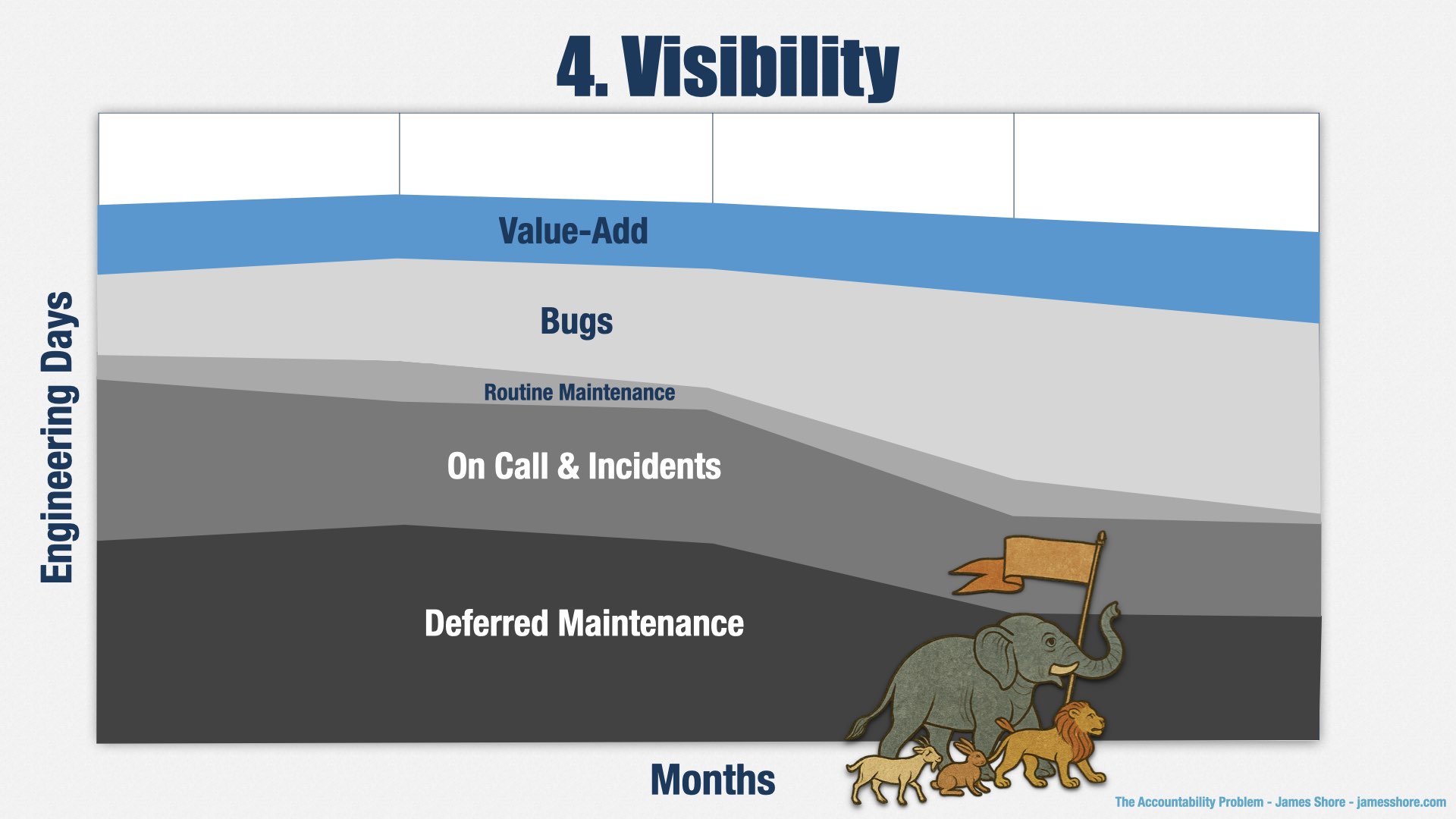

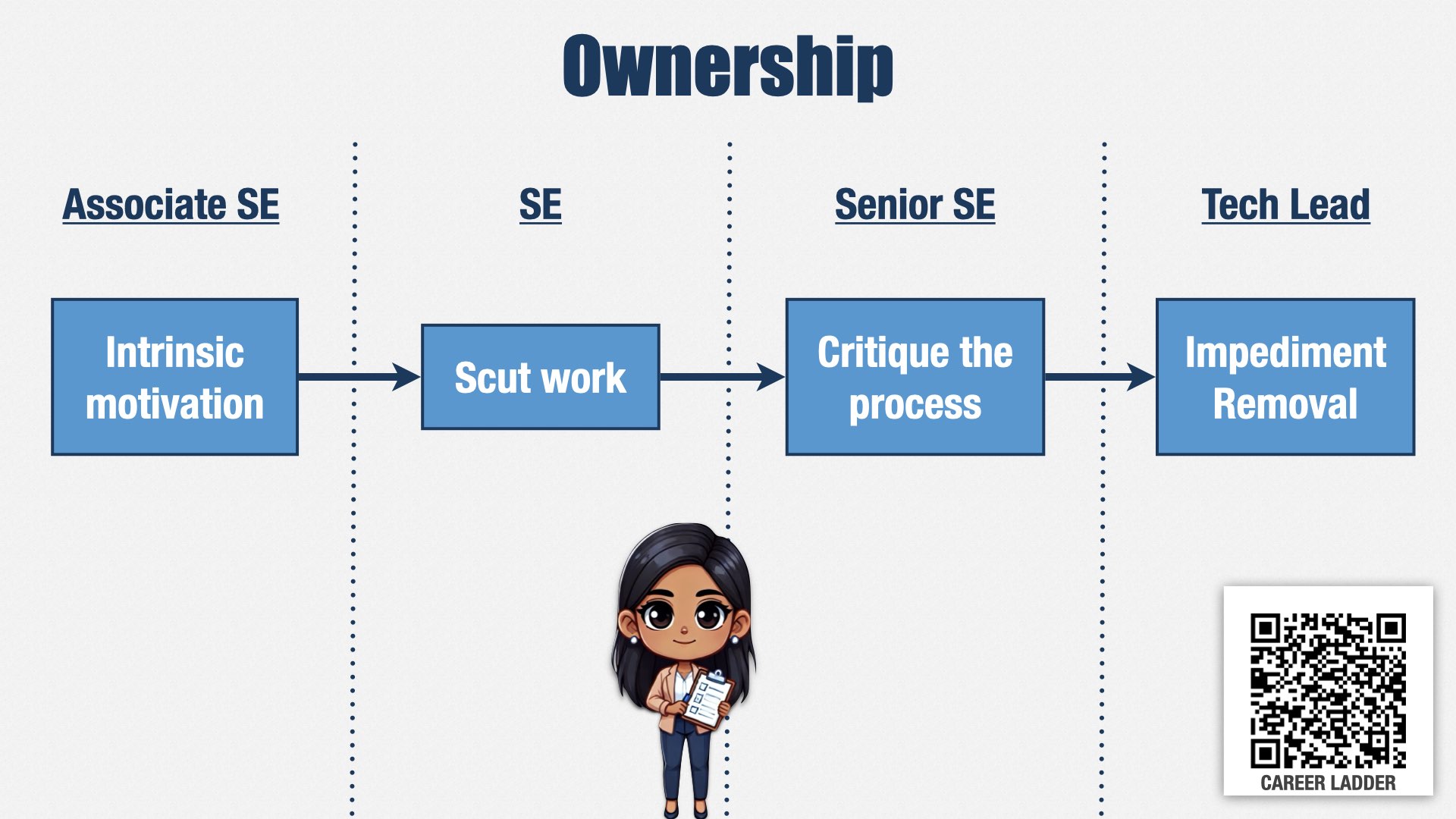

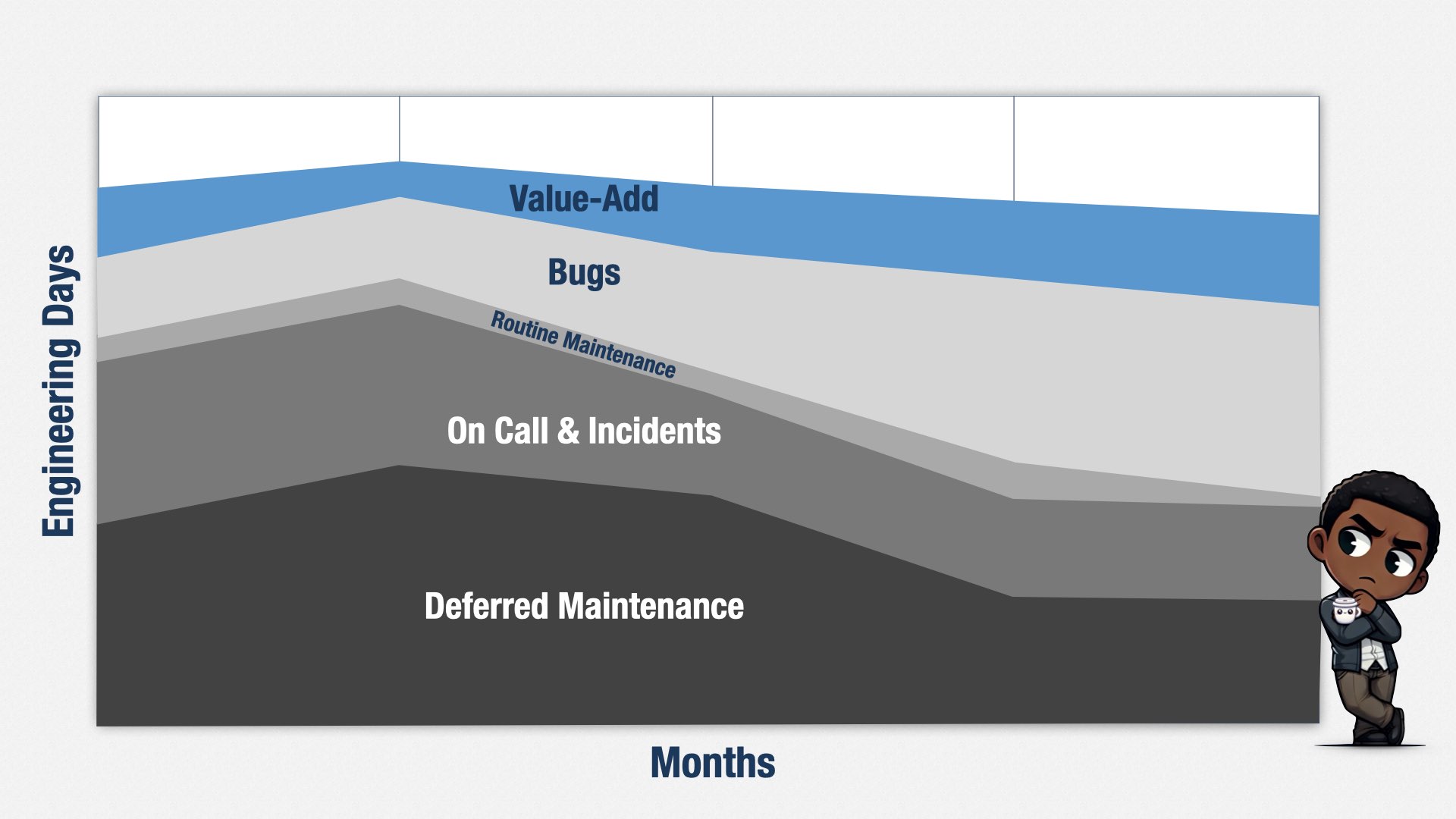

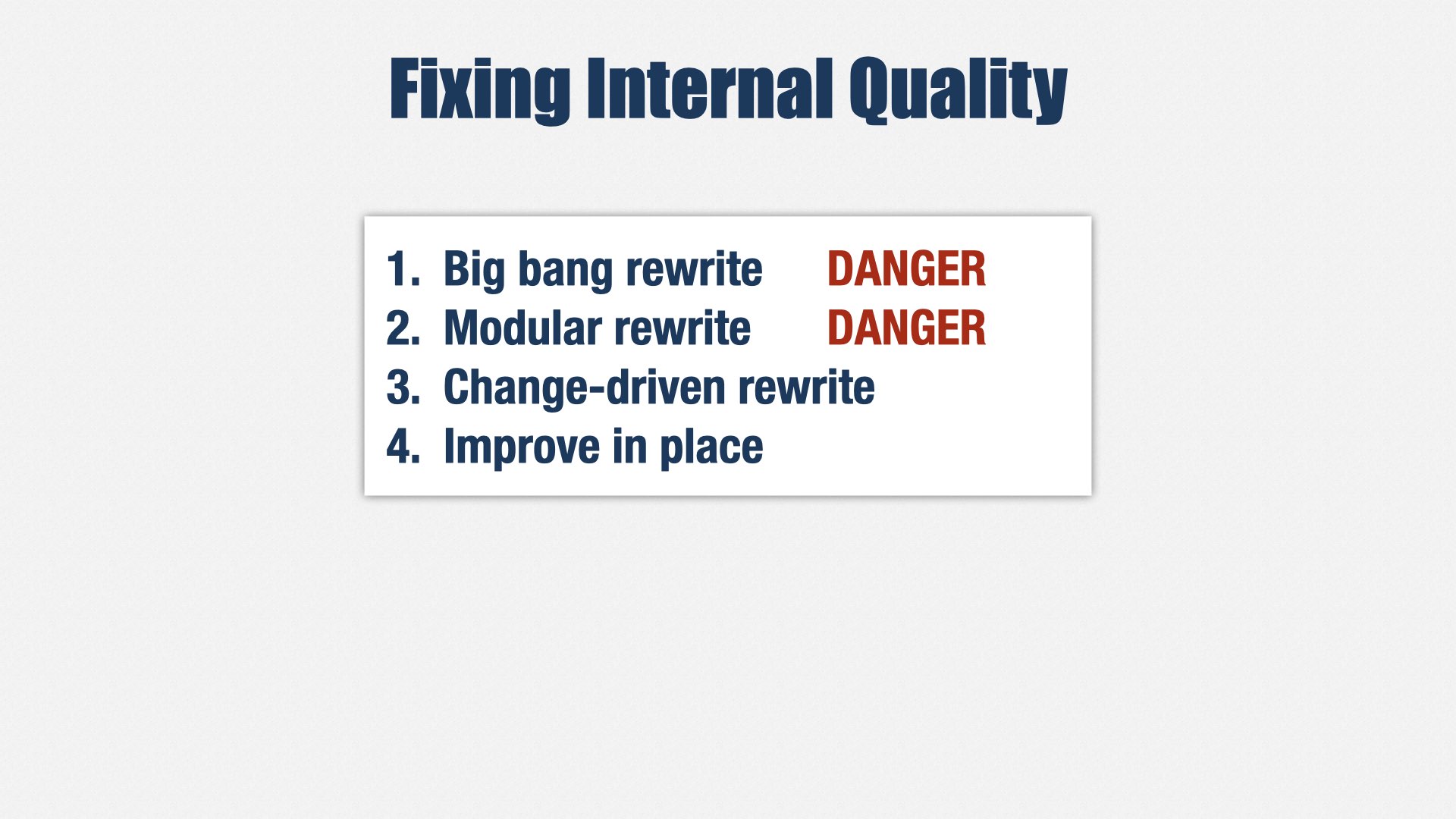

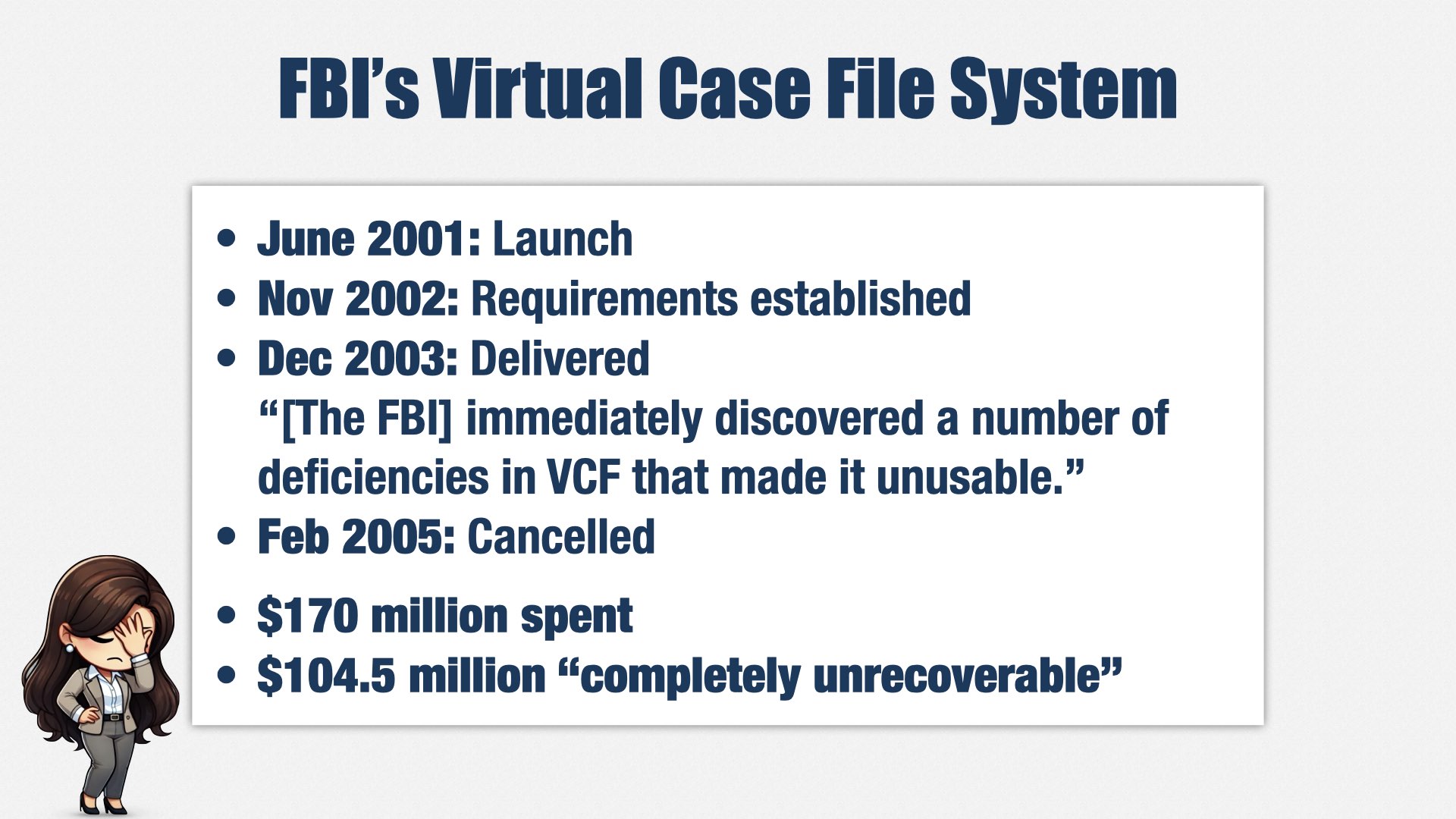

Collecting all that data for forecasting had a side benefit. My CEO pushed me to report productivity—that’s a whole ’nother story—and I decided to do it by reporting the percentage of time spent on muda versus the percentage of time spent on adding value to the business. Muda is activity that doesn’t add value. It’s the grey sections in the graph: maintenance, bugs, and on call.

This isn’t the real graph, for confidentiality reasons, but the story it tells is all too familiar: lots of time spent on deferred maintenance, lots of time spent on incidents, lots of bugs. And then just a fraction of time left over for doing valuable work.

I shared the real version of this graph with my leadership team and it was eye opening. All of the sudden, they understood exactly why things took so long, and why they didn’t ever get what they wanted. They had thought we had way more capacity than we actually did.

I told them that my responsibility was to reduce muda—the grey part—and make more room for valuable work—the blue part. That was an act of deliberate accountability, and it flipped the script. Yes, people still wanted me to be accountable for making teams deliver feature X on date Y, with all the fighting about deadlines that involves, but even more importantly, and primarily, I was accountable for decreasing muda. That’s precisely what I needed to be focused on, because that was our biggest problem.

And, over the past two years, that’s exactly what I’ve done. I report on my progress every quarter, and every quarter it’s a little bit better than it was before. And every quarter, I get a little bit less pushback on predicting dates.

And then, finally, I just kept pushing. These two books are excellent resources on how to do so.

I introduced the original variant of the product bet idea in January 2024, or maybe even earlier. It didn’t go anywhere. I brought it up again in March 2024. We sort of tried it, without leadership buy-in, and it sort of fizzled. I brought it up again, and again. I worked with my colleague, the VP of Product. I talked to the Chief Product Officer. I included it in a presentation to leadership about how Agile works. I piggybacked on the CEO’s passion for quantifying results. I stopped asking Leadership to create financial models and just created my own, then asked them to fill in the values. (That’s why they’re not very rigorous.)

And then finally, in March of 2025, the stars aligned. The CPO started pushing the rest of the leadership team to get involved. We created five product bets, the leadership team filled in my spreadsheet, and we started working on the first bet. And now we’re off to the races. We just started our second bet a few months ago, and we’re talking about how to increase capacity for more bets.

There’s lot more to do, and lots more to learn, but now that the logjam has broken, I think it’s going to stick. Our new CFO is intrigued and I’m able to show steady progress with my VIs and forecasting techniques. I’m well on my way to erasing the stigma that engineering can’t be trusted to deliver. I had 18-24 months to make a difference. I’ve just passed my 2nd year at OpenSesame, and I’m still here. I think it’s going to work out.

Software development may be a foreign country to the rest of the business, but we can still be a trusted part of their empire.

To do so, we have to take accountability, rather than allowing it to be forced upon us. Rather than falling into the habit of delivering X features on Y date, we can be accountable for what really matters: results, just like our colleagues in sales, marketing, and other parts of the business. And the results we create are new opportunities. Enabling more prospects. New partners. More leads. Better retention.

Product bets allow us to be accountable for the estimated value of those results. So far, they’ve been working for me. I hope they work for you, too.

![Book cover for the Korean translation of “The Art of Agile Development, Second Edition” by James Shore. The title reads, “[국내도서] 애자일 개발의 기술 2/e”. It’s translated by 김모세 and published by O’Reilly. Other than translated text, the cover is the same as the English edition, showing a water glass containing a goldfish and a small sapling with green leaves.](/images/aoad2/cover/aoad2-korean-cover-959x1200.jpg)